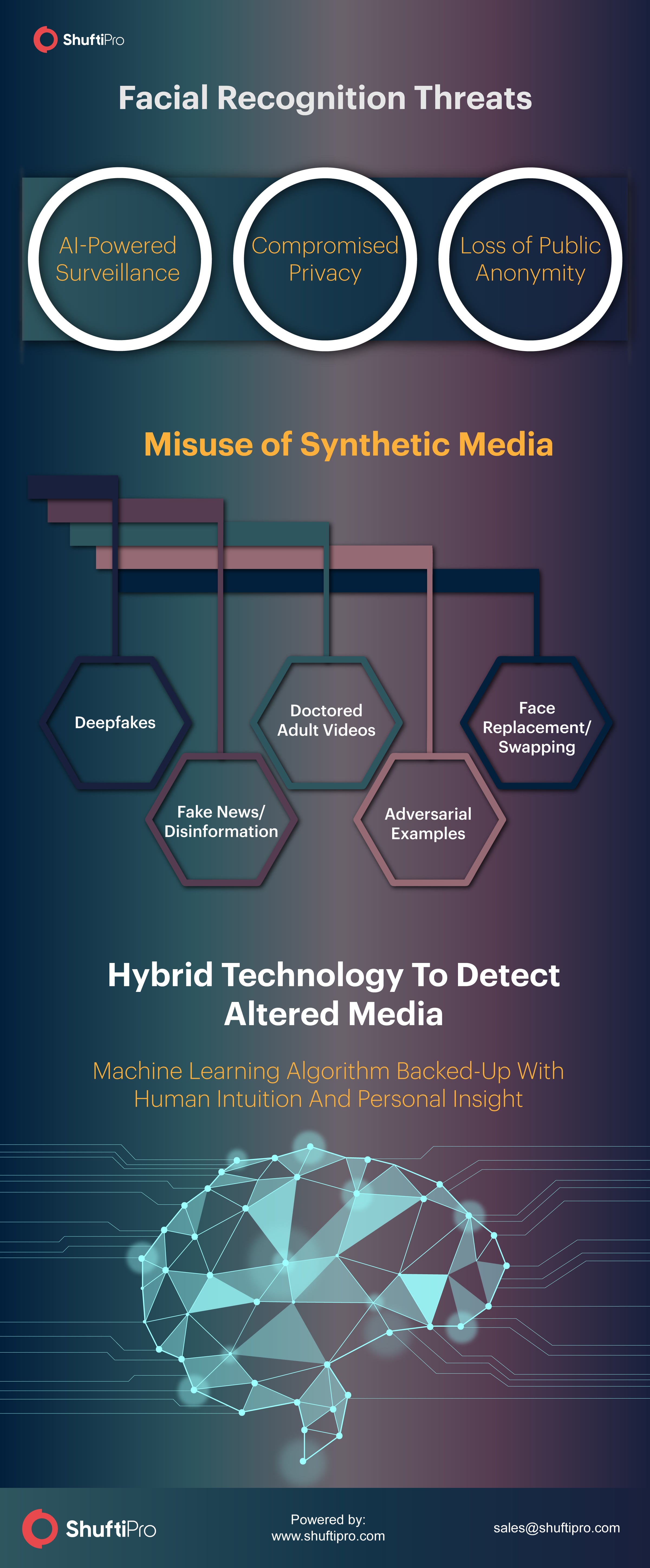

Facial Recognition: Worries About the Use of Synthetic Media

In 2019, 4.4 billion internet users were connected to the internet worldwide, a rise of 9% from last year recorded by Global Digital 2019 report. As the world shrinks to the size of a digital screen in your palm, the relevance of AI-backed technologies can hardly be overstated. Mobile applications took over marketplaces; cloud storage replaced libraries, and facial recognition systems became the new ID.

On the flip side, this has also exposed each one of us to a special kind of threat that is as intangible as its software of origin: the inexplicable loss of privacy.

AI-powered surveillance, in the form of digital imprints, is a worrying phenomenon that is fast taking center stage in technology conversations. Facial recognition is now closely followed by facial replacement systems that are capable of thwarting the very basis of privacy and public anonymity. Synthetic media, in the form of digitally altered audios, videos, and images, are known to have impacted many in recent times. As the largest threat to online audiovisual content, deepfakes are going viral, with more than 10,000 videos recorded to date.

As inescapable as facial technology seems, researchers have found a way to knock it down using adversarial patterns and de-identification software. However, the onus falls on the enablers of technology who must now outpace the rate at which preparators are learning to abuse facial recognition for their own interests.

Trending Facial Recognition Practices

Your face is your identity. Technically speaking, that has never been truer than it is today.

Social media, healthcare, retail & marketing, and law enforcement agencies are amongst the leading users of facial recognition databases that stock countless images of individuals for various reasons. These images are retrieved from surveillance cameras embedded with the technology, and from digital profiles that can be accessed for security and identification purposes.

As a highly controversial technology, facial recognition is now being subjected to strict regulation. Facebook, the multi-billion dollar social media giant, has been penalized for its facial recognition practices several times by legal authorities. Privacy Acts accuse it of misusing public data and disapprove of its data collection policies.

In popular use is Facebook’s Tag Suggestions feature using biometric data (facial scanning) to detect users’ friends in a photo. Meddling with the private affairs and interests of individual Facebook users, the face template developed using this technology is stored and reused by the server several times, mostly without consent. While users have the option to turn off face scanners at any time, the uncontrolled use of the feature exposes them to a wide range of associated threats.

Cautions in Facial Replacement Technology

As advanced as technology may be, it has its limitations. In most cases, the accuracy of identification arises as a leading concern among critics, who point to the possibility of wrongly identifying suspects. This is especially true in the case of people of color, as the US government has found them to be wrongly identified by the best facial algorithms five to ten times higher than whites.

For instance, a facial recognition software, when fed with a single photo of a suspect, can match up to 50 photos from the FBI database, leaving the final decision up to human officials. In most cases, image sources are not properly vetted, further dampening the accuracy of the technology underuse.

De-identification Systems

Businesses are rapidly integrating facial recognition systems for identity authentication and customer onboarding. But while the technology itself is experiencing rampant adoption, experts are also finding a way to trick it.

De-identification systems, as the name suggests, seek to mislead facial recognition software and trick it into wrongly identifying a subject. It does so by changing vital facial features of a still picture and feeding the flawed information to the system.

As a step forward, Facebook’s AI research firm FAIR claims to have achieved a new milestone by using the same face replacement technology for a live video. According to them, this de-identification technology was born to deter the rising abuse of facial surveillance.

Adversarial Examples and Deepfakes

Facial recognition fooling imagery in the form of adversarial examples also have the ability to fool computer vision systems. Wearable gear such as sunglasses has adversarial patterns that trick the software into identifying faces as someone else, as found by researchers at Carnegie Mellon University.

A group of engineers from the University of KU Leuven in Belgium has attempted to fool AI algorithms built to recognize faces, simply by using some printed patterns. Printed patches on clothing can effectively make someone virtually invisible for surveillance cameras.

Currently, these experiments are limited to specific facial software and databases, but as adversarial networks advance, the technology and expertise will not be limited to a few hands. In the current regulatory scenario, it is hard to say who will win the race: the good guys who will use facial recognition systems to identify criminals or the bad guys who will catch on to the trend of de-identification and use it to fool even the best of technology?

AI researchers of the Deepfake Research Team at Stanford University have delved deeper into the rising trend of synthetic media and found existing techniques such as erasing objects from videos, generating artificial voices, and mirroring body movements, to create deepfakes.

This exposure to synthetic media will change the way we perceive news entirely. Using artificial intelligence to deceive audiences is now a commonly learned skill. Face swapping, digital superimposition of faces on different bodies, and mimicking the way people move and speak can have wide-ranging implications. The use of deepfake technology has been seen in false pornography videos, political smear campaigns and fake news scares, all of which have damaged the reputation and social stability.

Humans Ace AI in Detecting Synthetic Media

The unprecedented scope of facial recognition has opened up a myriad of problems. Technology alone can’t win this war.

Why Machines Fail

Automated software can fail to detect a person entirely, or display improper results because of tweaked patterns in a deepfake video. Essentially, this happens because the machines and software understand faces can be exploited.

Deep learning mechanisms, that power facial recognition technology, extract information from large databases and look for recurring patterns in order to learn to identify a person. This entails measuring scores of data points on a single face image, such as calculating distance between pupils, to reach a conclusion.

Cybercriminals and fraudsters can exploit this weakness by blinding facial recognition software to their identity without having to wear a mask, thereby escaping any consequence whatsoever. Virtually anything and everything that uses AI solutions to carry out tasks are now at risk, as robots designed to do a specific job can easily be misled into making the wrong decision. Self-driving cars, bank identification systems, medial AI vision systems, and the likes are all at serious risk of being misused.

Human Intelligence for Better Judgement

Currently, there is no tool available for accurate detection of deepfakes. As opposed to an algorithm, it is easier for humans to be prepared to detect altered content online and be able to stop it from spreading. An AI arms race coupled with human expertise will discern which technological solutions can keep up with such malicious attempts. The latest detection techniques will, therefore, need to include a combination of artificial and human intelligence.

By this measure, artificial intelligence reveals undeniable flaws that stem from the abstract analysis that it relies on. In comparison, human comprehension surpasses its digital counterpart and identifies more than just pixels on a face.

As a consequence, the use of hybrid technologies, offered by leading identification software tackles this issue with great success. Wherever artificially learned algorithms fail, humans can promptly identify a face and perform valid authentications.

In order to combat digital crimes and secure AI technologies, we will have to awaken the detective in us. Being able to tell a fake video from a real one will take real judgment and intuitive skills, but not without the right training. Currently, we are not equipped to judge audiovisual content, but we can learn how to detect doctored media and verify content based on source, consistency, confirmation, and metadata.

However, as noticed by privacy evangelists and lawmakers alike, the necessary safeguards are not built into these systems. And we have a long way to go before relying on machines for our safety.