The Deepfake Challenge: Strengthening Compliance in Remote Identity Verification

KYC onboarding used to involve bringing physical documents to a branch or office for manual verification by dedicated staff members. While it was certainly error prone, compliance officers could at least be sure that the person submitting the documents was physically present. Now, driven in large part by the COVID-19 pandemic, onboarding is largely completed remotely and the use of deepfakes has become an existential threat.

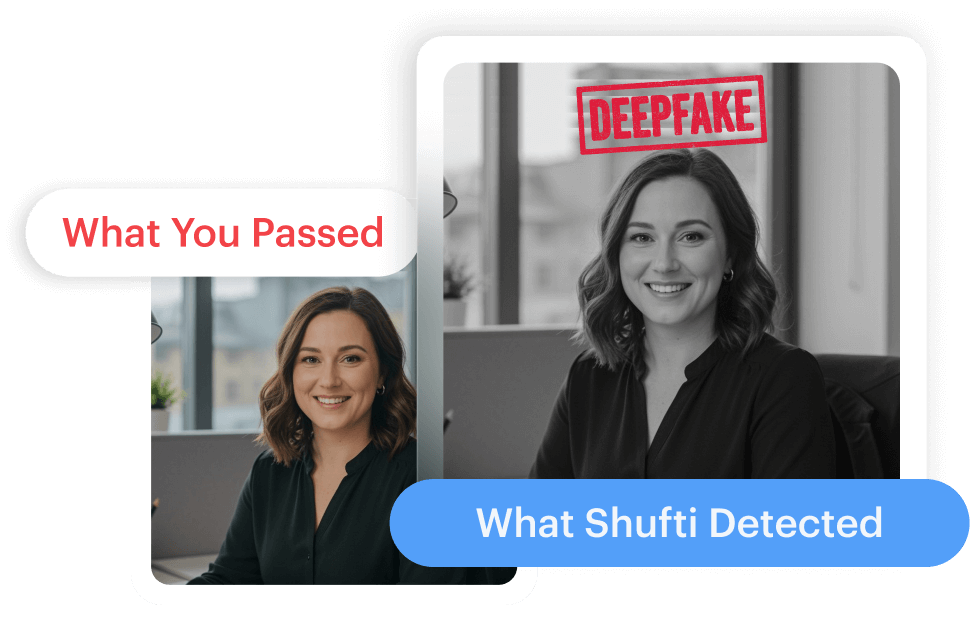

Deepfake technology has evolved rapidly from basic, easy to distinguish face swaps to highly realistic, multimodal simulations capable of passing traditional KYC systems. Fraudsters use synthetic faces, voice clones, and behavioral cues in real time, replicating genuine user presence during onboarding and account recovery.

These developments go beyond fraud risks. They represent a direct challenge to the foundations of AML and KYC compliance. As the distinction between authentic and artificial erodes, regulated industries face increased exposure to onboarding prohibited users, enabling money laundering, and failing to meet regulatory expectations that have not kept pace with current deepfake advancements.

The Risks Posed by Deepfake-Enabled Fraud

Remote onboarding has become a primary target for fraud networks seeking to defeat compliance controls at scale. Deepfakes enable attackers to present synthetic identities that can pass both image and video-based KYC liveness checks, opening accounts that appear fully legitimate. These accounts can then be used to move illicit funds, layer transactions, and evade sanctions screening requirements.

The scale of these attacks is no longer theoretical. Biometric deepfakes now account for up to 40% of identity attacks, with new attempts occurring approximately every five minutes. Synthetic identities can be purchased through vendors on online marketplaces for as little as $500 and in bulk, enabling hundreds of automated onboarding attempts with minimal technical skill for a fraction of the potential payout. Criminal networks exploit these capabilities to identify weaknesses in compliance processes, targeting institutions that rely on static document checks or single-step liveness prompts designed for older threat models.

The threat also extends to account maintenance and recovery. Fraudsters use deepfake video and voice cloning to impersonate customers during high-risk interactions such as password resets, security challenges, or call-center verifications. Successful account takeover compromises personal data and facilitates unauthorized transactions, further increasing exposure to fraud losses and regulatory scrutiny.

These risks go beyond financial losses. They undermine the effectiveness of AML and KYC frameworks by enabling the onboarding of prohibited or high-risk users without detection, eroding the foundations of customer due diligence, and introducing potentially catastrophic systemic vulnerabilities.

The Compliance Gap

Many verification systems remain built on assumptions that fraud is static, manual, and easily detected through document checks or simple liveness prompts. These controls were developed to catch traditional forgeries and identity theft, not highly realistic, adaptive synthetic media that can respond in real time.

This disconnect has created a serious compliance gap. Financial institutions, gaming platforms, and insurers, among many others, have regulatory obligations to verify customer identities, prevent the onboarding of prohibited or high-risk individuals, and detect transactions consistent with money laundering. When synthetic identities pass these checks undetected, these obligations are not being met, increasing exposure to money laundering, sanctions breaches, and other forms of financial crime.

Regulatory bodies such as the Financial Action Task Force have recognized the development of synthetic identities as a significant threat, emphasizing the need for robust, risk-based verification processes. However, most regulatory frameworks remain principles-based and do not specify technical standards for detecting deepfakes. This leaves institutions responsible for interpreting and implementing controls that can withstand regulatory scrutiny later on in an environment of rapidly evolving fraud techniques.

This gap represents more than a failure to keep up with fraud techniques; it reveals a fundamental weakness in the assumptions that have guided verification practices for years. Addressing it requires institutions to confront the limitations of their existing systems and commit to overhauling approaches that no longer reliably detect or adapt to sophisticated synthetic threats.

The Need for Adaptation

Deepfakes have introduced a level of risk that challenges the very foundations that AML and KYC compliance are built on. Synthetic media makes it possible to onboard prohibited users, move illicit funds, and evade sanction screening without detection, undermining customer due diligence obligations across industries.

Relying on one-size-fits-all solutions and deepfake detection systems that take months to update has the potential for disastrous consequences not too far down the line. Companies in regulated sectors need to work with providers, like Shufti, that have a proven track record in detecting deepfakes and synthetic identities and the technology to continue adapting as threats evolve.

How Shufti Fights Back Against Deepfakes

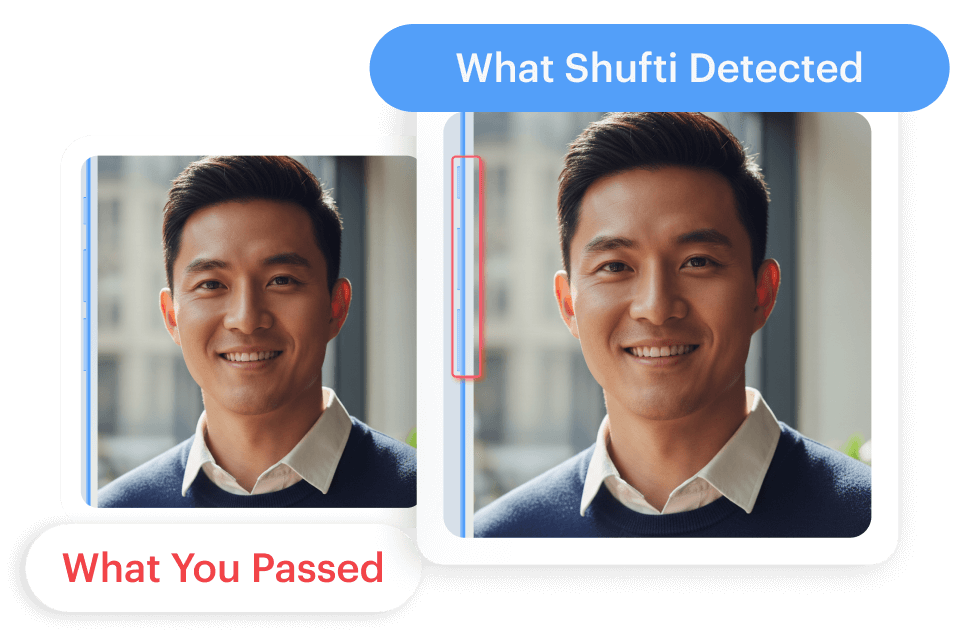

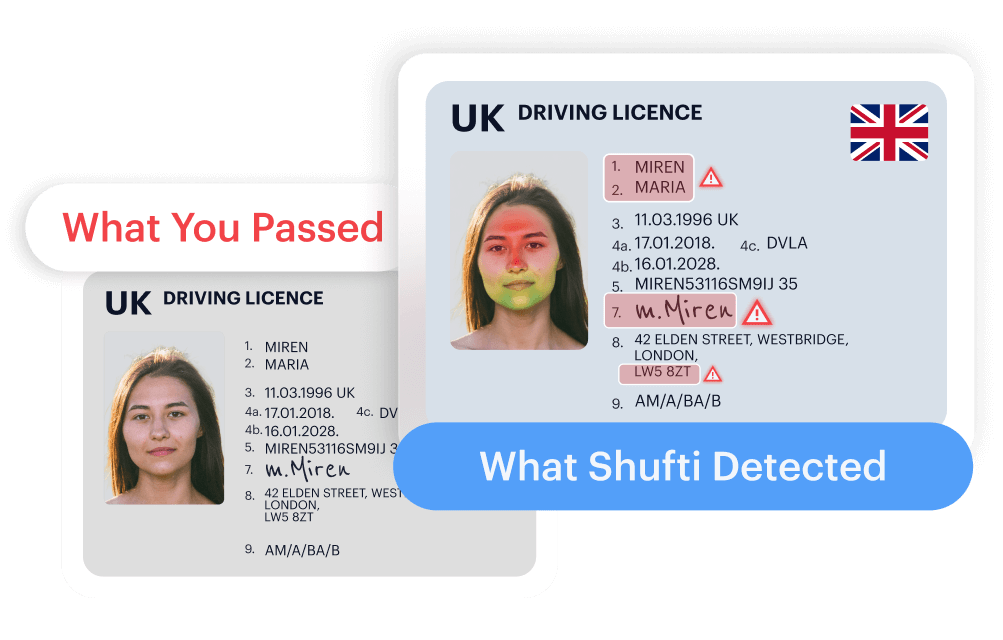

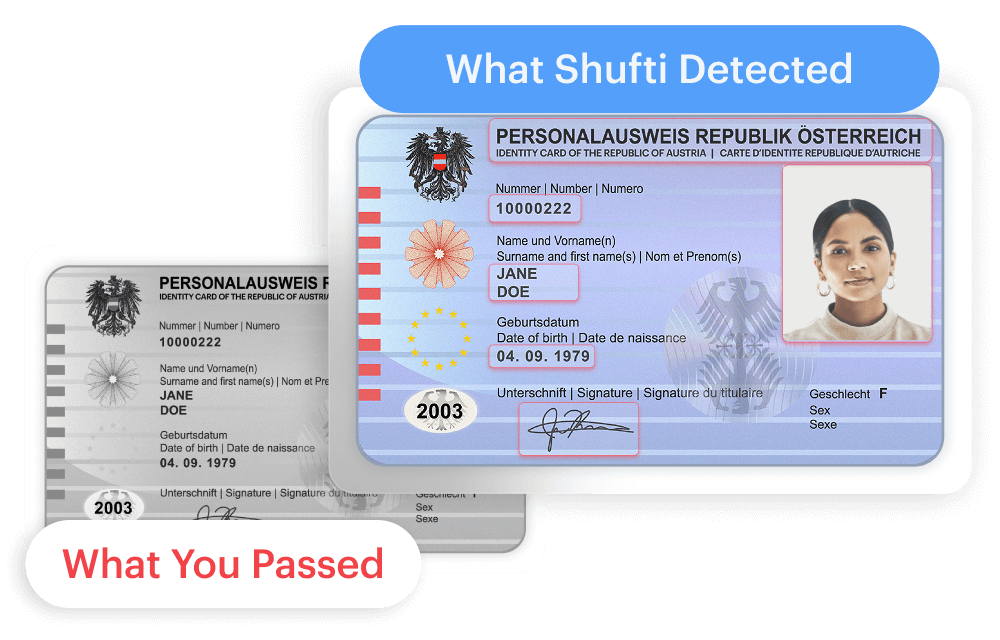

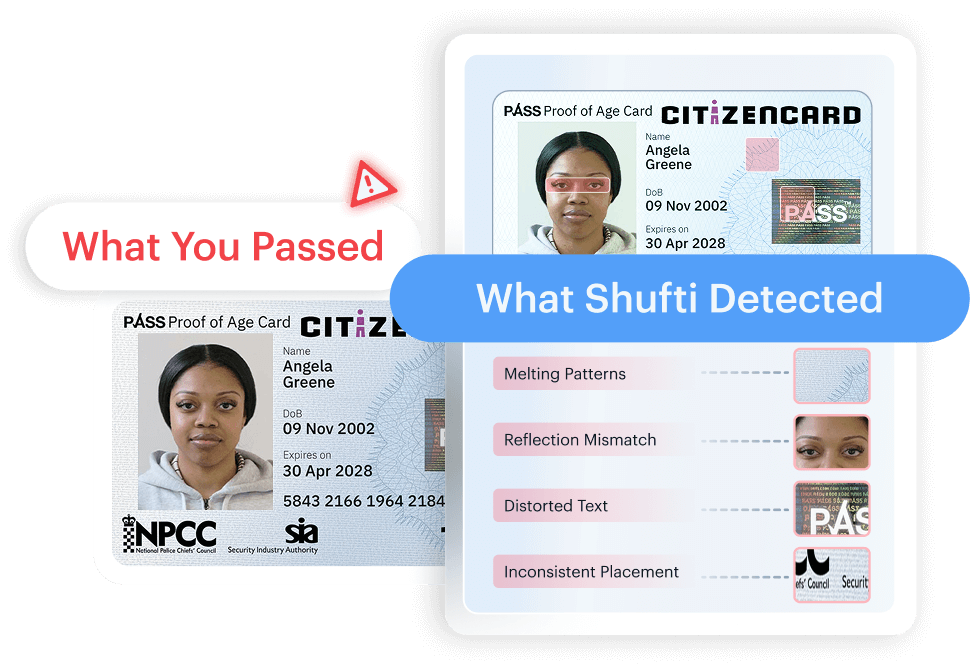

Deepfakes are rewriting the rules of digital fraud and Shufti is built to stay ahead. Our layered defense combines advanced AI that detects subtle deepfake signals (like distorted edges and looped frames) with expert human review for high-risk cases.

Drawing from threat data across 240+ countries and 10,000+ document types, our system identifies evolving patterns and repeat fraud attempts. Unlike static systems, we roll out new detection layers monthly to counter emerging tactics.

Shufti helps you stay compliant and secure, with a defense that adapts as fast as the threat evolves.

Want to learn more about how Shufti helps companies proactively stay ahead of deepfake threats and regulatory changes? Download our report: Outsmarting the Deepfake Threat to Identity Trust

Explore Now

Explore Now