Facial Recognition: Burgeoning Threat to Privacy

The expanding use of facial recognition technology for ID verification, user authentication, and accessibility is finally coming under fire from privacy evangelists worldwide. Proponents of digital privacy are talking about user consent, data context, transparency in data collection, data security, and lastly accountability. Adherence to strict principles of privacy, as well as free speech, entails proper regulation aimed at controlled use of facial technology.

Facial scanning systems are used for a variety of purposes: facial detection, facial characterization, and facial recognition. As a major pillar of digital identity verification, facial authentication serves as a means of confirming an individual’s identity, and stores critical user data in the process. The technology is keeping the trade-up by allowing users broader use of digital platforms and enhanced knowledge of data collection.

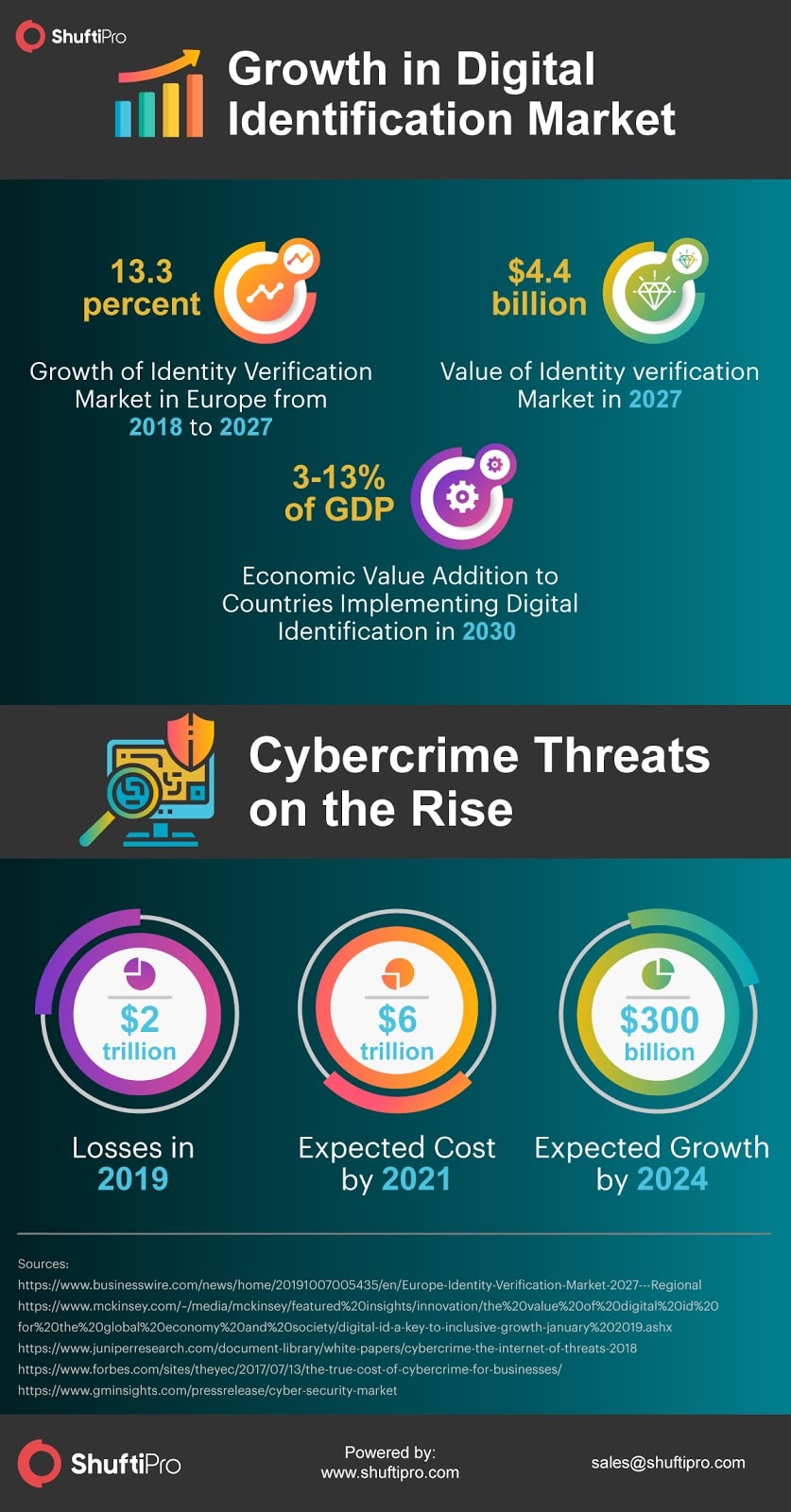

The Digital ID Market: A Snapshot

Digital identity verification is changing the way companies are working. In Europe alone, the expected growth of the identity verification market is found to be 13.3% from 2018 to 2027. By then, the market will have grown to US$4.4 billion. By the year 2030, the McKinsey Global Institute puts value addition by digital identification at 3 to 13 percent of GDP for countries implementing it.

At the same time, cybersecurity threats are also on the rise, indicating a glaring need for enhanced security solutions for enterprises. According to Juniper, cybercrimes have cost $2 trillion in losses in 2019 alone. By 2021, Forbes predicts this amount will triple as more and more people find ways to mask identities and engage in illicit activities online.

As a direct consequence of this, the cybersecurity market is also expected to grow to a humongous $300 billion industry, as apprehended in a press release by Global Market Insights.

As technological advancement fast-tracks, this figure will probably grow in proportion to the growing threats to cyberspace, both for individuals and enterprises.

Facial Recognition Data Risks

Formidable forces tug at the digital user from both ends of the digital spectrum. Biometric data, while allowing consumers to avail a wide range of digital services without much friction, also continue to pose serious risks that they may or may not be aware of.

Facial recognition data, if misused, can lead to the risks that consumers are generally unaware of, for instance,

- Facial spoofs

- Diminished freedom of speech

- Misidentification

- Illegal profiling

Much has been said about the use of facial recognition technology in surveillance by law enforcement agencies. At airports, public events and even schools, facial profiling has led to serious invasion of privacy that is increasingly gaining public traction. While most users are happy to use services like face tagging and fingerprint scanning on their smartphones, privacy activists are springing into action with rising knowledge and reporting of data breaches.

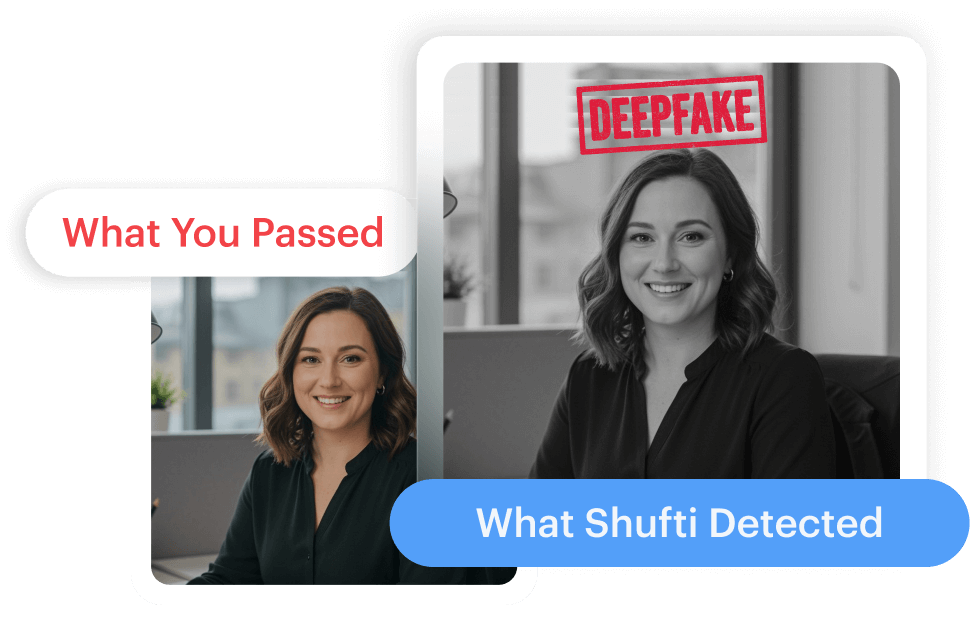

Let’s dig deeper into one of the most potent cybersecurity threats linked to facial recognition technology: Deepfake.

How Deepfakes Impact Cybersecurity

In the world of digital security, deepfakes are posing a brand new threat to industries at large. To date, there are 14,678 deepfake videos on the internet. As barriers to the use of AI are lowered, adversaries share the same access to advanced technological capabilities as regulators. High rates of phishing attacks are targeting financial institutions, service providers and digital businesses alike. Representation of enterprises is at risk as deepfakes are fully capable of altering videos and audio without being detected.

This has profound security implications for identity verification processes based on biometrics, which will find it harder to identify the true presence of a customer.

With the pervasive use of evolving technology, cybercriminals will find it easier to access sophisticated tools and nearly anyone can create deepfakes of people and brands. This involves higher rates of identity threats, cyber frauds and running smear campaigns against public personalities and reputable brands.

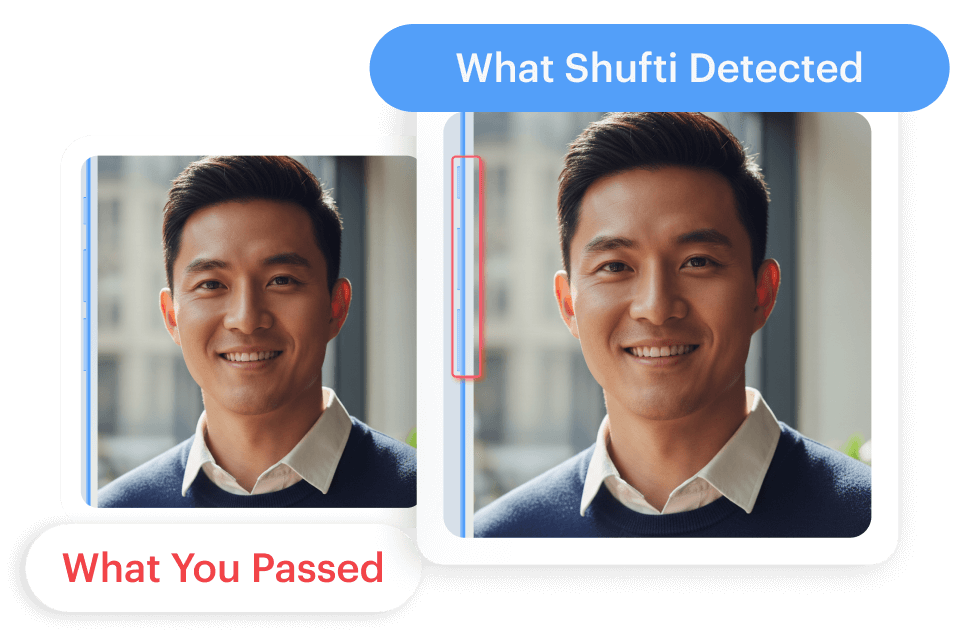

For facial identification software, this means fake positives created by deepfake technology can assist cyber criminals in impersonating virtually anyone on the database. Cybersecurity experts are rushing to integrate better technological solutions such as audio and video detection, in order to mitigate the impact of deepfake crimes. More subtle features of a person’s face will be recorded in order to detect impersonators.

However, it is impossible to turn a blind eye to the raging speed at which the use of generative adversarial networks is making deepfakes harder to detect. According to experts, the underlying AI technology that supports the proliferation of such impersonation crimes is what will fuel more cyber attacks.

Blockchain technology might also help in authenticating videos. Again, the success of this solution also depends on validating the source of the material, without which any individual or enterprise is at high risk of being maligned.

Implications Across Users

Gartner warns enterprises about the use of biometric approaches to identity verification, as spoof attacks continue to riddle the digital security landscape. While popular celebrities can be exploited by incorrectly using their facial identity in pictures and videos, large corporations are also at high risk of being targeted.

Sensational announcements about the company or industry trends can lead to stock scares and other financial repercussions. Fake news and misinformation have the potential to cause meltdowns in political landscapes. Additionally, doctored videos on social media can cause an uproar among certain demographics, leading to social unrest.

Identity Verification Technology – A win-win approach

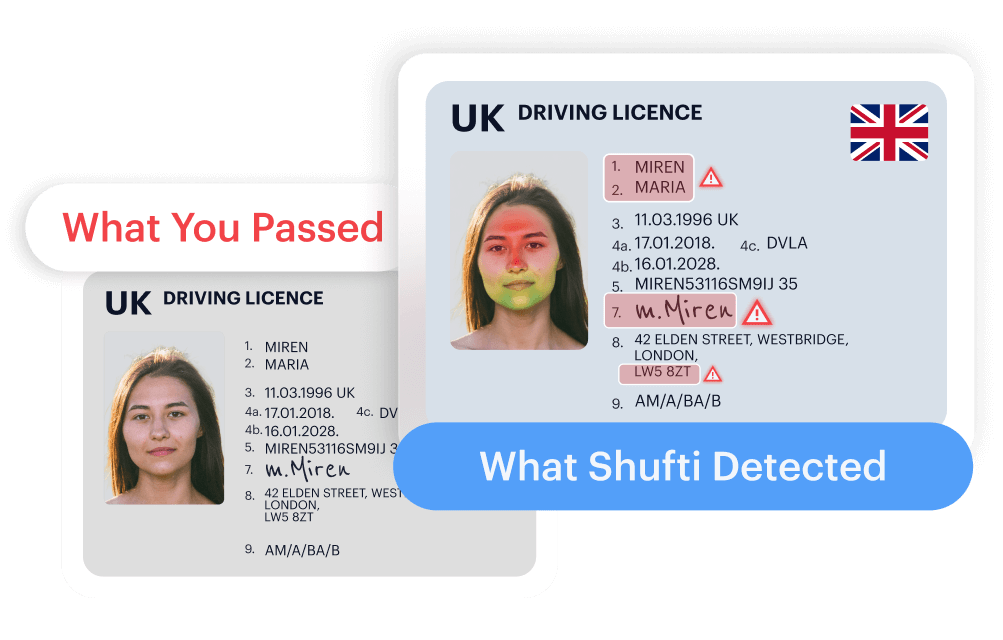

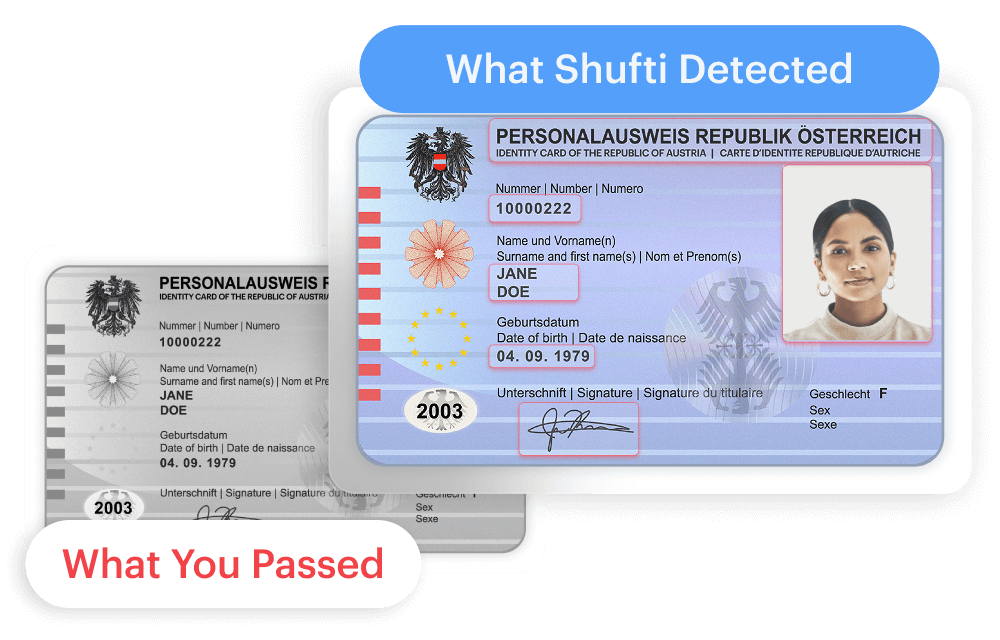

With more and more companies using digital onboarding solutions, the threat of deepfakes is real and must be effectively countered. Companies are no longer looking only for identity solutions that make the best use of customer biometrics. Instead, they now have an increasing interest in how the stored information is safeguarded against burgeoning cyber threats.

The first step in resolving digital impersonation crimes is to be fully aware of the possibilities as such. Enterprises and professionals need to be apprised of the rising misuse of digital verification software, and the likelihood of personal data being compromised.

Face swapping technologies must now be matched with face detection software that helps identity fake videos and content that misleads. In addition, digital security solutions must be ramped up, especially those involving the use of sensitive client data.

Biometric authentication and liveness detection solutions

Liveness detection, as an added feature of facial recognition, provides an efficient solution to deepfakes as fraudulent attempts at using past photos/videos to bypassing biometric identification increase. The same technology behind deepfakes can also be employed to counter frauds and spoof attacks, to ensure that personal data is not compromised for cybercrime.

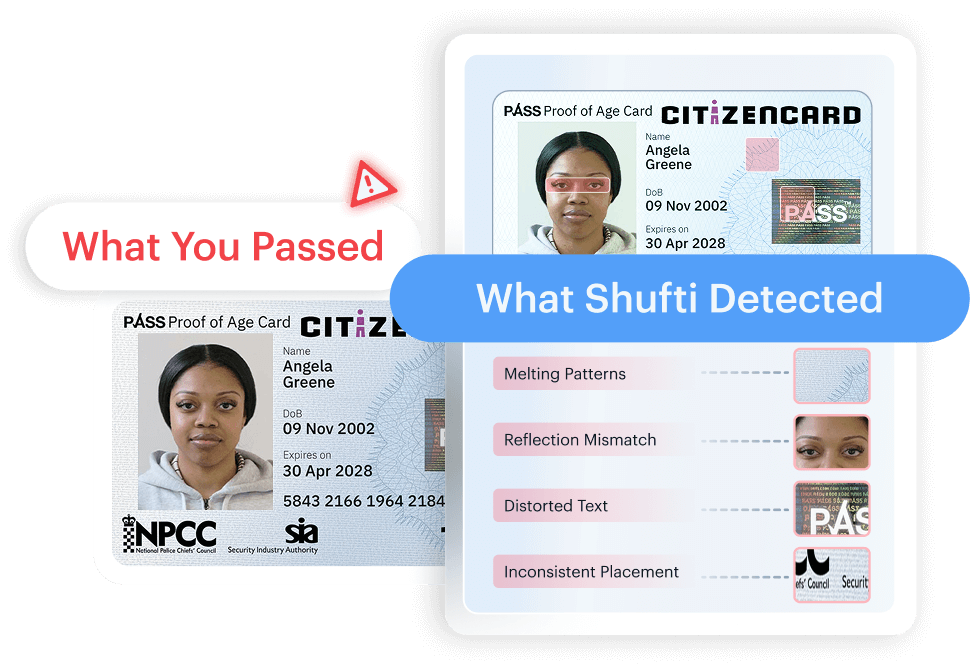

Differentiating between spoofs and real users became easier as additional layers of security are added to the verification process. Users are required to appear in front of a camera and capture a selfie or a live video.

Shufti performs biometric analysis to validate true customer presence, with markers that check for eyes, hair, age, and color texture differences. Coupled with microexpressions analysis, 3D depth perception and human face attributes analysis, this ID verification process ensures maximum protection against digital impersonators.

Explore Now

Explore Now