New Study by NIST Reveals Biases in Facial Recognition Technology

The National Institute of Standards and Technology (NIST) recently did a study on the effects of race, age and sex on the facial recognition software. The results showed significant biases in the software for the people of color and women.

The report’s primary author and a NIST computer scientist, Patrick Grother, said in a statement,

“While it is usually incorrect to make statements across algorithms, we found empirical evidence for the existence of demographic differentials in the majority of the face recognition algorithms we studied.”

This means that the algorithms misidentified people of color more than white people and they also misidentified women more than men.

JUST RELEASED! New report from @NIST provides first-of-its-kind insights into the accuracy of face recognition algorithms… and findings show a wide range in performance.

Read NISTIR 8280 here: https://t.co/U4n0FhKe6x #NISTFRVT pic.twitter.com/OsIcpn6LF8

— Cybersecurity @ NIST (@NISTcyber) December 19, 2019

The study done by NIST is pretty robust. NIST evaluated 189 software algorithms from 99 developers, “a majority of the industry”, using federal government data sets which contains roughly 18 million images of more than 8 million people.

The study evaluated how well those algorithms perform in both one-to-one matches and one-to-many matches. Some algorithms were more accurate than others, and NIST carefully notes that “different algorithms perform differently.”

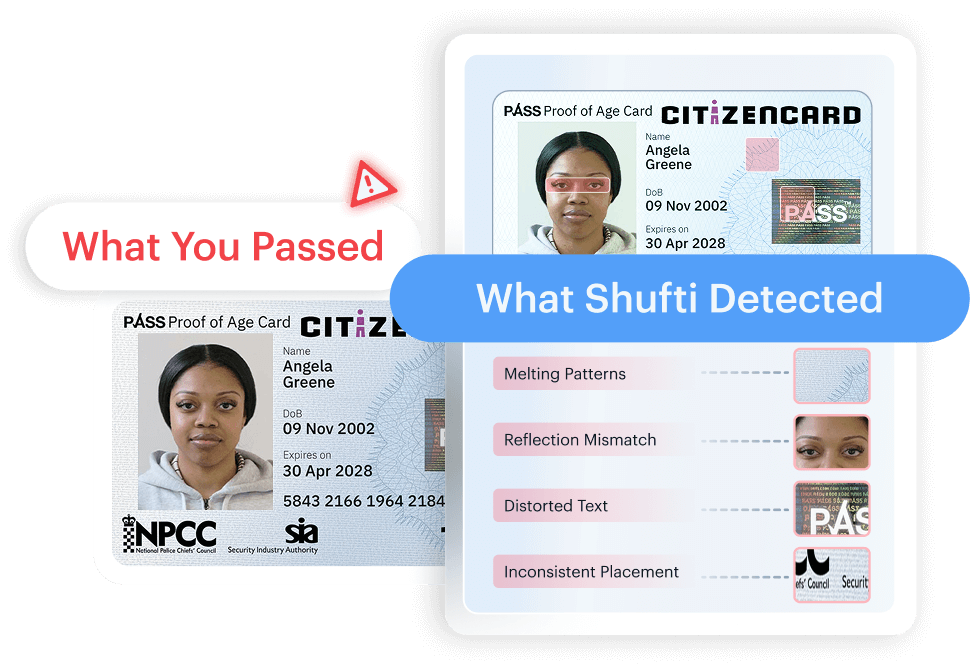

The higher rates of false positives were in the one-to-one matching scenario for Asian and African American faces compared to Caucasian faces. And this effect was remarkably dramatic as well in some instances with the misidentifications 100 times more for the Asian and African American faces compared to their white counterparts.

The study also showed that the algorithms resulted in more false positives for women than men and more false positives for the very young and very old compared to the middle-aged faces. In the one-to-many scenario, African American women had higher rates of false positives.

The study also found out that the algorithms developed in Asian countries didn’t result in the same drastic false-positive results in the one-to-one matching of Asian and Caucasian faces. According to NIST, this shows that the impact of the diversity of training data, or lack thereof, on the resulting algorithm.

“These results are an encouraging sign that more diverse training data may produce more equitable outcomes, should it be possible for developers to use such data,” Grother said.

Explore Now

Explore Now