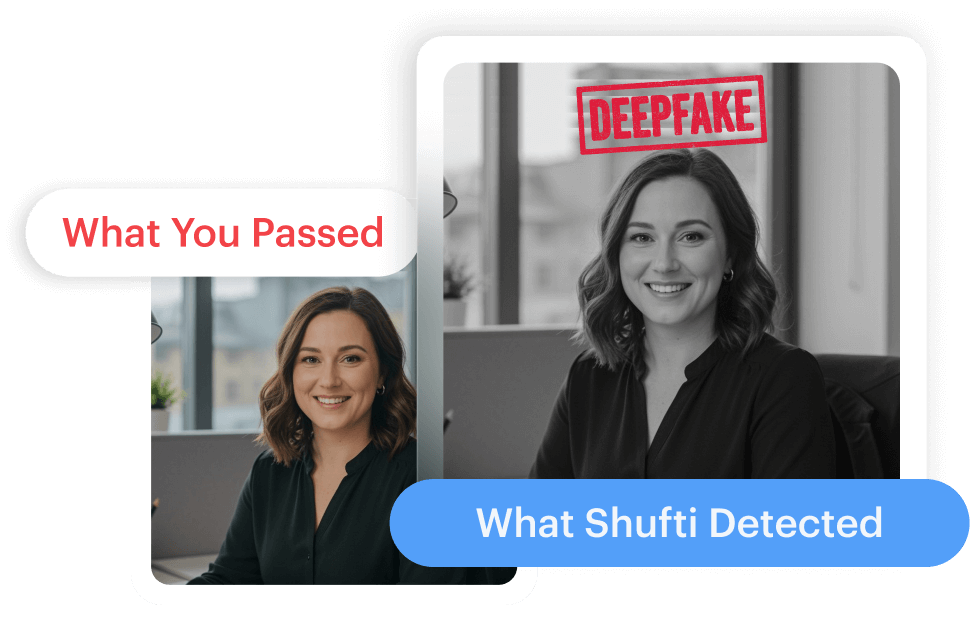

A Growing Threat: Deepfakes Are Reshaping Fraud at Scale

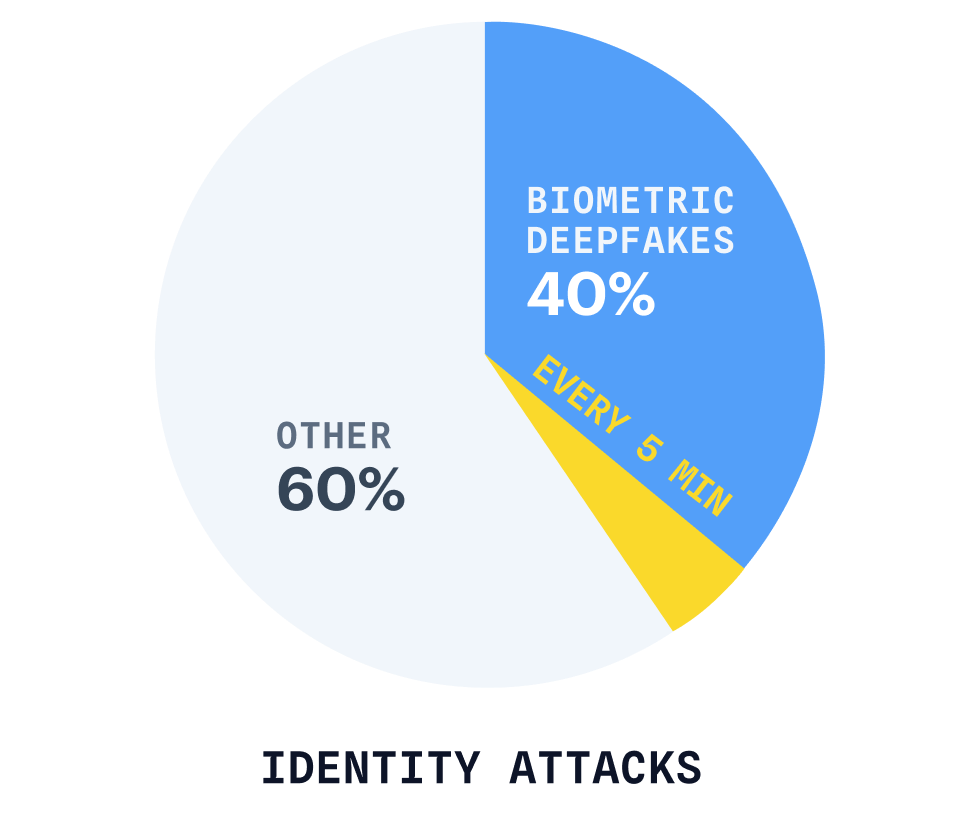

Deepfakes realistic but fabricated images, videos, and voices, are being deployed in coordinated, often undetectable identity attacks. Biometric deepfakes now account for up to 40% of identity attacks, with new attempts occurring every five minutes. More so, we’ve observed a 244% increase in account takeover (ATO) and identity fraud incidents due to generative AI and deepfakes.

No industry is safe. Financial services, fintechs, gaming platforms, and insurers are all facing rising losses, regulatory pressure, and user trust erosion. Traditional ID verification and facial recognition tools weren’t built for this level of deception and might lag behind evolving threats.

What You’ll Learn Inside the Report

The Evolution of Deepfake Fraud

Understand how the technology behind synthetic media evolved from grainy CGI to multimodal deception capable of mimicking voice, behavior, and emotion in real time. Learn about the tools, tactics, and fraud-as-a-service models powering today’s attacks.

Real-World Case Studies

Explore high-stakes fraud incidents, including a $25M transfer initiated via deepfake video impersonation, and North Korean operatives using AI to secure tech jobs under false identities. These are not hypothetical risks, they are live threats impacting global businesses right now.

Primary Attack Vectors

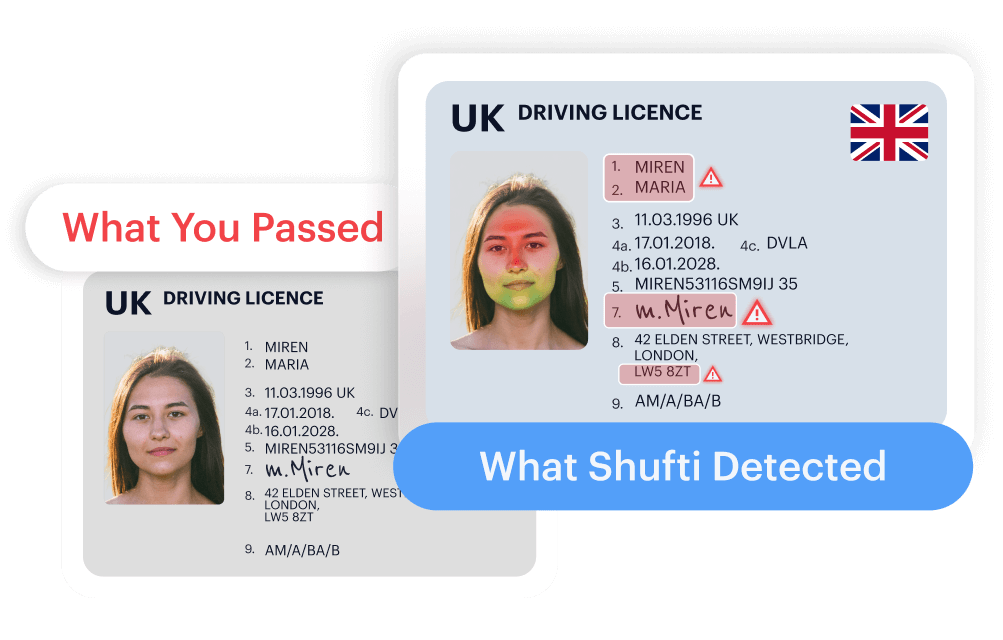

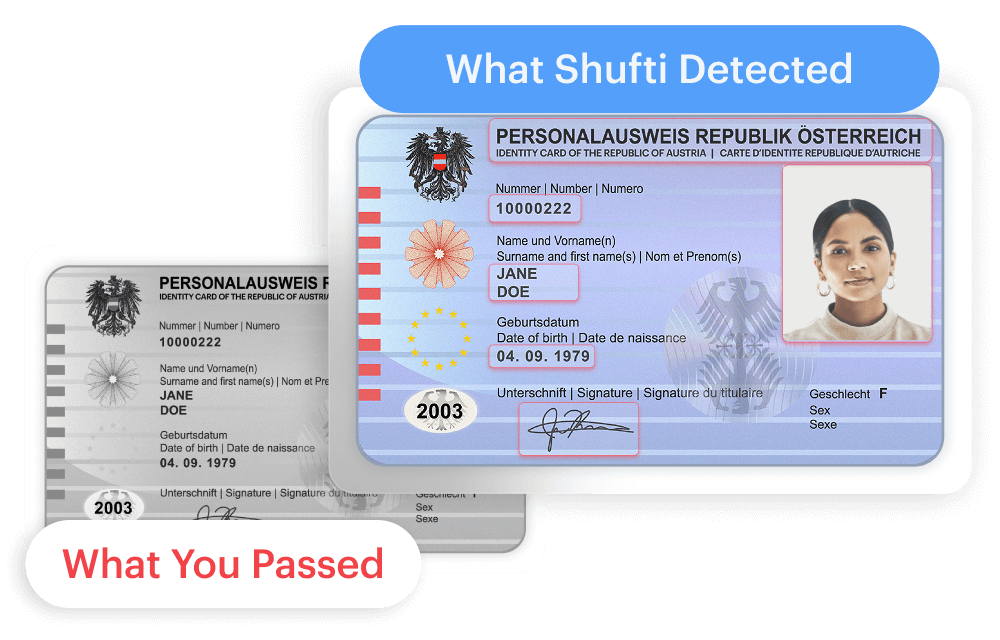

See how deepfakes are used to spoof KYC interviews, forge video and audio assets, hijack onboarding flows, and manipulate internal systems through executive impersonation or BEC scams.

Industry Impact & Regulatory Gaps

With synthetic identities often underpinning credit-related fraud, discover why current regulations lag behind and why most legacy biometric systems are not equipped to flag these threats.

The Shufti Strategy: A Multi-Layered Defense Built for the Deepfake Age

Fraudsters are moving fast. Shufti moves faster.

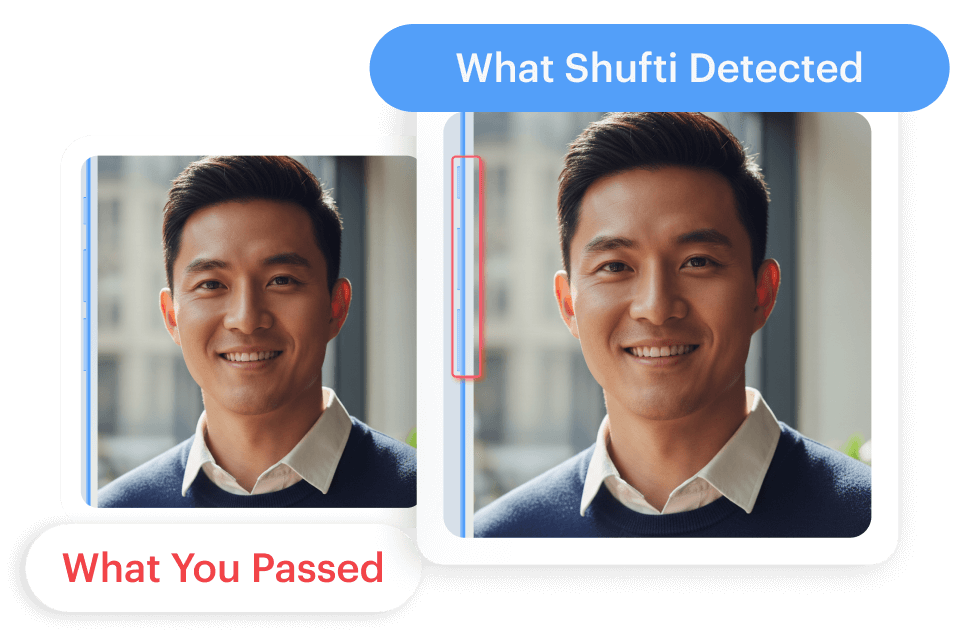

Our deepfake defense architecture is built around adaptive AI, behavioral biometrics, and proprietary liveness detection systems, trained, tested, and deployed on our own tech stack.

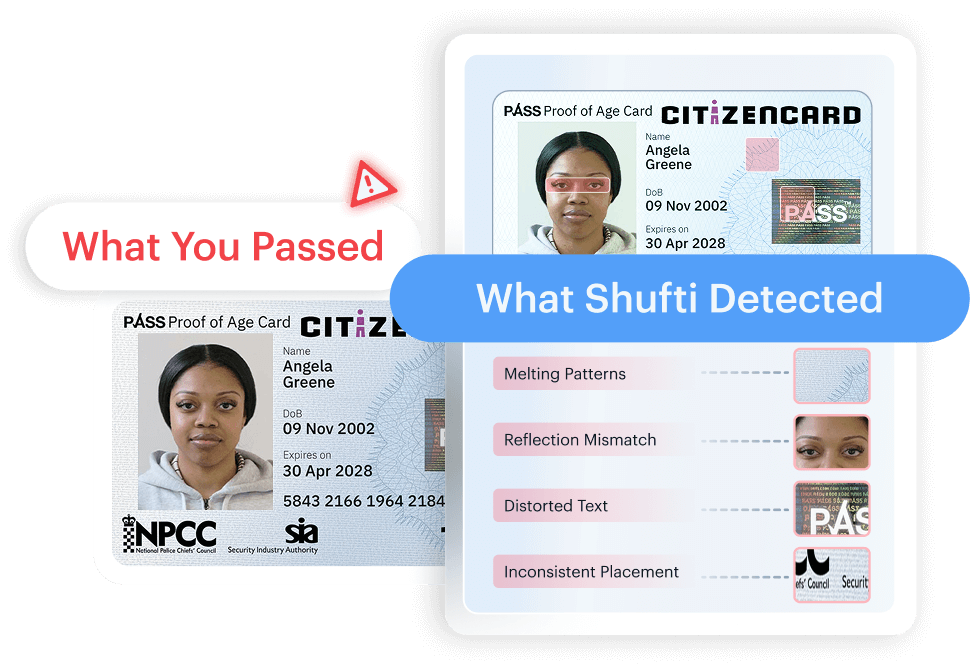

- Liveness Detection (Active + Passive) Identify imposters by analyzing light, depth, and reflection patterns, with or without user interaction.

- Behavioral Biometrics Track micro-expressions, blinking, and pupil movement to expose synthetic media that “looks right” but doesn’t behave like a real human.

- Forensic Media Analysis Scan files for signs of manipulation, checking against an extensive library.

- Fusion Models + Scoring Engine Our AI models assess visual, audio, behavioral, and contextual inputs, calculating dynamic fraud scores based on your organization’s unique thresholds and risk profiles.

- Continuous Learning & Updates Because Shufti owns the full AI pipeline, from data ingestion to deployment, we release up to new detection layers each month, updating defenses in real time to counter the latest deepfake advancements.

What Sets Shufti Apart

No Third-Party Dependencies

We own our full tech stack, enabling faster innovation, greater transparency, and tighter security control.

Trained on Real + Synthetic Data

Our adversarial datasets mimic live attack scenarios, helping us detect even novel deepfake techniques before they’re weaponized at scale.

Customizable Risk Thresholds

Tailor verification rigor to your workflows, whether it’s onboarding, account recovery, or fraud escalation.

Privacy by Design

Every layer is built with regulatory compliance and ethical AI use in mind.

The Time to Act Is Now

Deepfake fraud is no longer emerging. It’s here, and it’s growing fast.

If your identity systems rely on static liveness checks or rule-based ID verification, you’re already exposed. The question isn’t whether deepfake-resistant defenses are needed, it’s how fast you can implement them.

This report is your executive-level guide to understanding, assessing, and mitigating the deepfake threat. Whether you lead risk strategy, IT, product security, or fraud operations, you’ll gain the insights needed to modernize your approach.

Download the Full Report to Learn how to spot, and stay ahead of AI-driven identity fraud.

Get your copy

Explore Now

Explore Now