UK Online Safety Act (OSA)

The online world has opened incredible opportunities; however, these opportunities also bring potential risks, specifically for children. In an effort to eliminate these, the UK Government has passed the Online Safety Act 2023 (OSA), an act that gives regulators oversight of platforms’ compliance efforts, makes them directly responsible for keeping their users safe, and demands greater transparency for adults.

If your online business services have users from the UK, this law may apply to you, whether you’re based in London or anywhere around the world. This means global platforms can not treat online safety as a UK-specific issue. Here’s all you need to know about the UK Online Safety Act.

What is the Online Safety Act?

The Online Safety Act 2023 is a UK law that is designed to make online platforms safe for users and hold these platforms legally responsible for protecting users from harm. The strongest protections in the Act are designed for children. Platforms must prevent young users from seeing harmful or age-inappropriate material.

- It applies to all user-to-user services (like social media networks, messaging apps), search engines (Bing, Google), and platforms that publish pornographic content or sell illegal drugs or guns.

- The act demands these services to reduce risks, protect minors from harmful content on the internet, and remove illegal content, including the sale of illegal drugs and weapons.

- The law will be enforced by Ofcom, which is the communications regulator for the government of the United Kingdom. Ofcom sets out detailed codes of practice for respective platforms to follow.

As of July 2025, UK businesses are required to comply with the UK Online Security Act (OSA). The OSA bill represents a shift in regulatory approach. Online safety is no longer a voluntary commitment but a statutory duty backed by significant penalties.

Which Online services fall under OSA?

The OSA applies to both UK-based and international services. Your platform is likely in scope if it meets any of the following criteria:

- It allows users to generate and post content or interact with each other through features like comments or chats

- It functions as a search engine like Google.

- It publishes pornographic or any other content that can be harmful for minors or sells illegal drugs or guns, requiring robust age assurance. The harmful material includes self-harm encouragement and bullying

- It has a significant UK user base, targets the UK as a market, or presents a material risk of harm to UK users, even if the provider is based overseas.

In short, if your service can reach UK users and expose them to any harm online, the Online Safety Act expects you to act.

What does it mean to comply with the OSA?

Under the OSA Act, platforms must check for content that might prove harmful to minors and take noticeable measures to resolve it. These steps are not just mere tick-boxes to be checked, but are the foundation for designing a safe and secure platform for users. These steps are intended to help services identify risks early and apply proportionate safety measures to stop harmful material from spreading online. These measures include age verification and safe designs with built-in features to let users customize their feed. Ofcom also mandates platforms to keep records of these safety measures and how they work on their platform to show compliance when the regulating body requests it.

Core Compliance duties under the OSA

The act requires online platforms to follow the mechanisms mentioned below.

1. Risk Assessments

All services that fall under the scope of this act must carry out risk assessments for illegal content on their platforms. These assessments are essential to build a safe platform. These assessments must take into account the platform design, its functionalities, and its algorithm that recommends content to users. If minors are likely to use a service, a minor’s access assessment and his/her risk assessments must be verified by the service providers. Moreover, these assessments are not on time requirements, but must be updated routinely as the platform changes.

2. Preventing and Removing Illegal Content

Platforms must act proactively to stop illegal content from spreading on their platform. The tech giants are not only required to remove such content but also to put mechanisms in place to stop such content from being uploaded on their platforms in the first place. They should design safeguards that reduce risks, implement continuous regulation, and use automation where it’s needed. Illegal material must be removed quickly once detected. This includes child sexual abuse material, violence-encouraging content, intimate image abuse, and the sale of illegal drugs or weapons.

3. Protecting Minors from Online Harms

OSA makes a clear distinction between Primary Priority Content, which must be fully blocked for children, and Priority Content, which is to be managed to limit its access to minors. Primary Priority Content includes pornography and content promoting self-harm or suicide. Other Priority Content, such as bullying, violent material, and dangerous online challenges, must be limited so children only have age-appropriate experiences on the internet.

4. Age Assurance and Verification

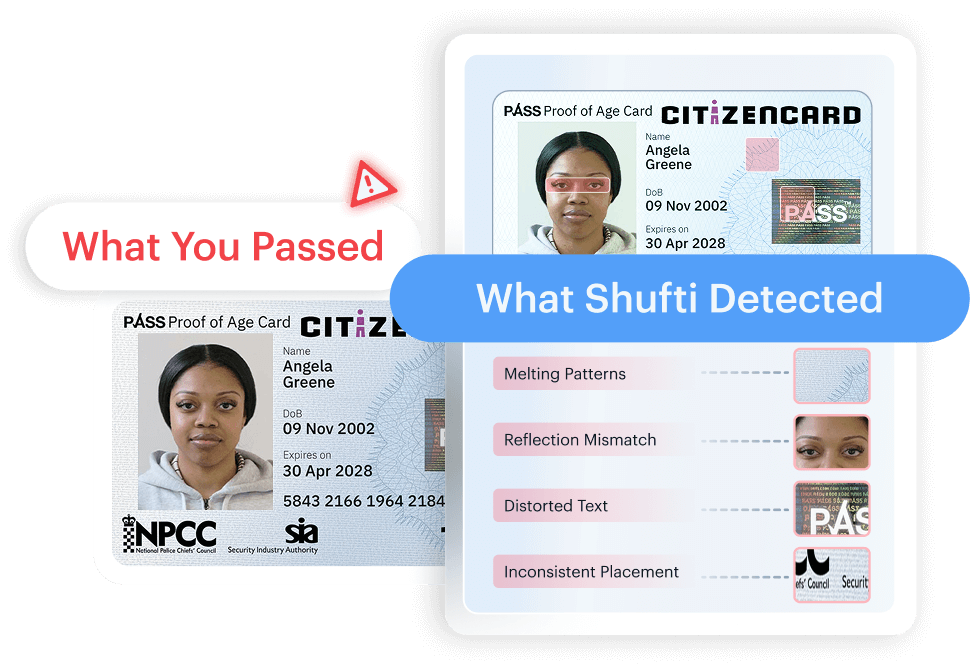

The act mandates all platforms that publish pornography to use an accurate age verification process to check whether or not their user are minors. Other services that children may access must also use reliable age assurance methods. Platforms must not trust self-declaration for age verification but include document checks, age estimation, or third-party verification.

5. Transparency and Control

Services that fall under the scope of OSA must provide a clear strategy that explains what content is permitted on their platform and what is restricted or blocked. Platforms must have a clear mechanism and workflow to show how their moderation works. They must also allow users to report problems and seek their solutions. Ofcam requires Category 1 services (TikTok, Facebook, etc) to offer customization to adults, which lets them modify their feeds and recommendations.

6. Record-Keeping and Ensuring Compliance

OSA gives Ofcom the power to demand proof of compliance from platforms at any time. Platforms must therefore keep copies of all risk assessments and records of the safety measures used. These reporting and record-keeping duties are to make sure that Ofcom can independently assess whether platforms are complying with the act or not. Platforms are required to provide Ofcom with the information about how quickly illegal content is being removed on their respective platform, how user reports and complaints are being resolved, and how effective the systems are in practice.

Consequences of Non-Compliance

Non-compliance with these guidelines can result in heavy fines or sanctions on your business. Ofcom has the power to impose fines of up to £18 million or 10% of the global revenue of your platform. Ofcom also holds the authority to block your business in its jurisdiction (UK) or even charge the senior managers with criminal liability.

Final Thoughts

The Online Safety Act of the United Kingdom marks a noticeable change in online regulation. It puts the burden of responsibility on tech giants rather than daily users. It does this by requiring platforms to design a safer online world with the aim of protecting children and meeting obligations by keeping documented risk assessments, safety measures, and performance data. The internet may never be risk-free, but under the OSA, platforms are obligated to build services with users’ safety at their core.

Explore Now

Explore Now