Are Deepfakes Illegal? Laws, Ethics & Regulatory Landscape

- 01 Regulatory Pressure Due to Emerging Deepfake Laws & Policies

- 02 Operational Pain Points and Risks for Businesses & Institutions

- 03 Strategic Solutions for Combating Deepfake Threats

- 04 Are Deepfakes Illegal? The Reality

- 05 Securely Onboard Your Clients and Protect Your Revenue From Deepfake-Enabled Fraud Losses

Deepfake technologies are accessible to almost anyone, and any person can create convincing media with minimal effort. This has led to high-quality synthetic media no longer being restricted to specialized environments but now being available to a significantly broader audience.

The financial services industry is one such sector where identity spoofing poses a threat to verification processes, and social media, where fake information is gaining excessive popularity. The threats of manipulation are not absent in political systems and the entertainment industry. That being the case, a significant question is whether deepfakes are illegal, or their legality depends upon the circumstances, like intent and use.

Regulatory Pressure Due to Emerging Deepfake Laws & Policies

Deepfakes misuse is becoming a source of concern for regulatory authorities. Even though deepfakes themselves are not criminalized, authorities commonly treat deepfake misuse as fraud, defamation, identity theft, impersonation, and privacy invasion.

This evolution shows an increased recognition of the idea that synthetic media might have comprehensive implications in terms of financial systems, personal rights, and citizen trust. Deepfakes creation is rarely banned as a technology; however, if it’s used to harass, deceive, or impersonate others, in most of the jurisdictions it’s unlawful. Consequently, the legality will rely more on the intent of deepfakes than on the actual process of doing it, how they are shared, and the damage they can cause.

United States: Fragmented but Expanding Enforcement

The United States maintains a decentralized approach, with no single federal statute governing deepfakes. However, state-level enforcement continues to expand through targeted legislation. States like Texas and California have made it a criminal offense to create deepfakes that influence elections, while federal legislation, such as the TAKE IT DOWN Act, which is already in force, targets non-consensual explicit content. As a consequence, are deepfakes considered illegal in the US? The response remains situational, but the enforcement patterns show the growing regulatory scrutiny and penalties.

Europe: Consent-Driven Regulatory Frameworks

The stringent data protection and transparency standards are enforced by European regulators on synthetic media. The EU AI Act requires clear labeling of AI-generated content, and the GDPR considers the use of personal data without an individual’s authorization a breach in the context of deepfakes. Several countries impose direct criminal penalties. This reinforces the view that deepfakes infringe on identity and privacy rights.

Asia: Aggressive Enforcement Models

Some of the strictest controls are observed in Asian jurisdictions. China requires proper labeling and prohibits the dissemination of AI-generated false information. In South Korea, there are penalties for generating, viewing, or possessing explicit deepfake images. In most parts of the region, implementation aligns with cybercrime and defamation laws.

Emerging Global Direction

Deepfakes are increasingly used for digital identity theft and synthetic fraud, prompting regulatory action worldwide. In Denmark, authorities are moving toward a comprehensive legal framework to address illegal uses. The trend is clear: deepfakes are being criminalized even in countries where such bans are not yet widespread.

Operational Pain Points and Risks for Businesses & Institutions

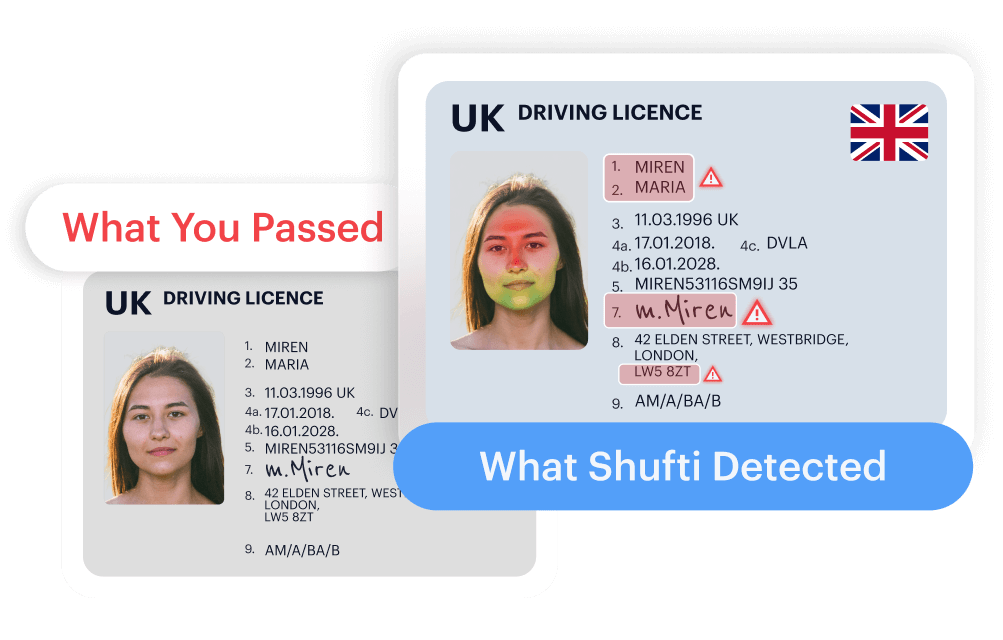

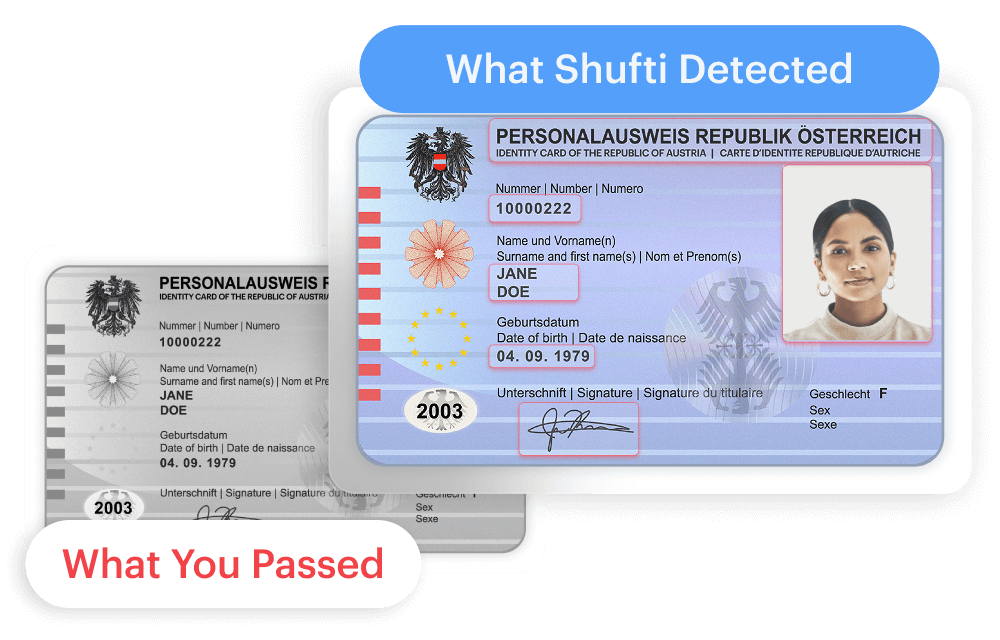

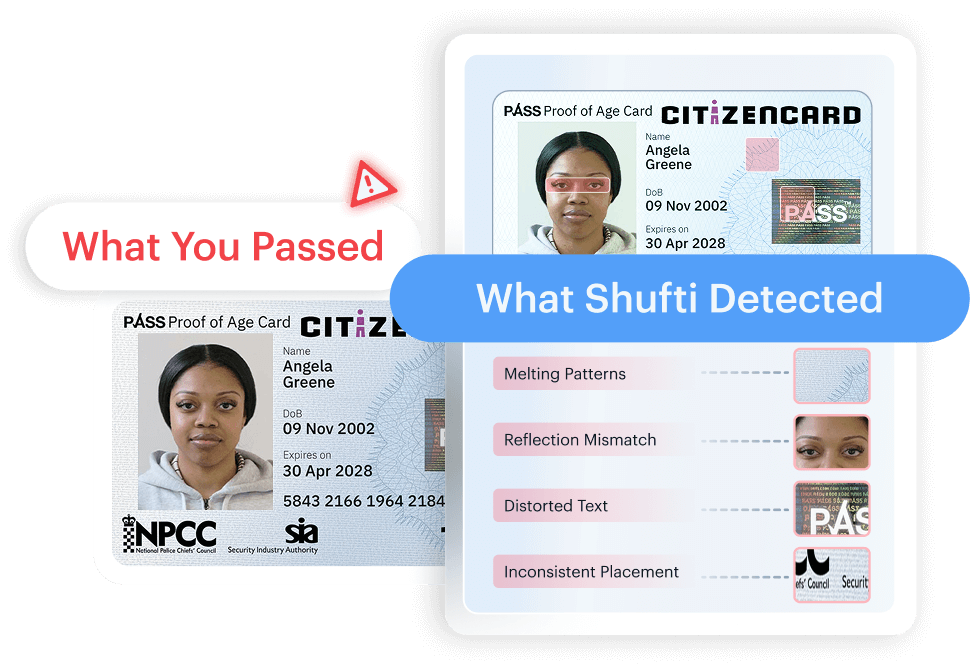

Conventional KYC defenses are more vulnerable to deepfake-based fraud attempts, especially those that depend on the checking of static images or video. Fraudsters are combining synthetic media and stolen personal information in order to build convincing synthetic identities. This complicates detection and causes a substantial rise in financial risks.

As regulatory standards are becoming stricter, compliance teams are struggling to harmonize their approaches in various jurisdictions. The audits are becoming more strained, and the dangers of enforcement actions have increased as the supervisory authorities demand clear evidence of controls against AI-driven impersonation.

Deepfake-enabled brand impersonation can be exploited to bypass KYC checks or facilitate AML-related fraud, rapidly spreading false identities and undermining institutional trust. Meanwhile, the risk of old verification methods is commonly unable to identify manipulated biometric data, which reveals significant security gaps.

This situation raises an ongoing ethical dilemma: can deepfakes ever be justified in a commercial setting?

Issues like lack of consent, false narratives, and identity misuse are driving a decline in trust across digital platforms.

The financial consequences are serious, not only in the direct costs of fraud but also in investigations, remedial measures, and monitoring programs.

Strategic Solutions for Combating Deepfake Threats

Companies can no longer afford to use conventional methods of verification but rather use a multi-layered and intelligence-based approach. The following are some of the strategic solutions businesses can choose to implement to prevent deepfake threats:

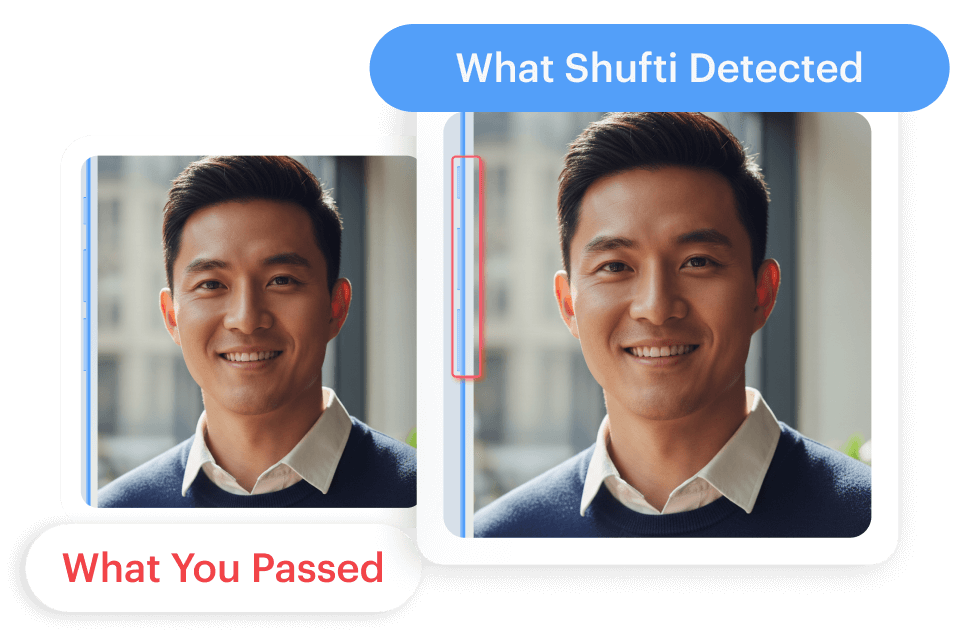

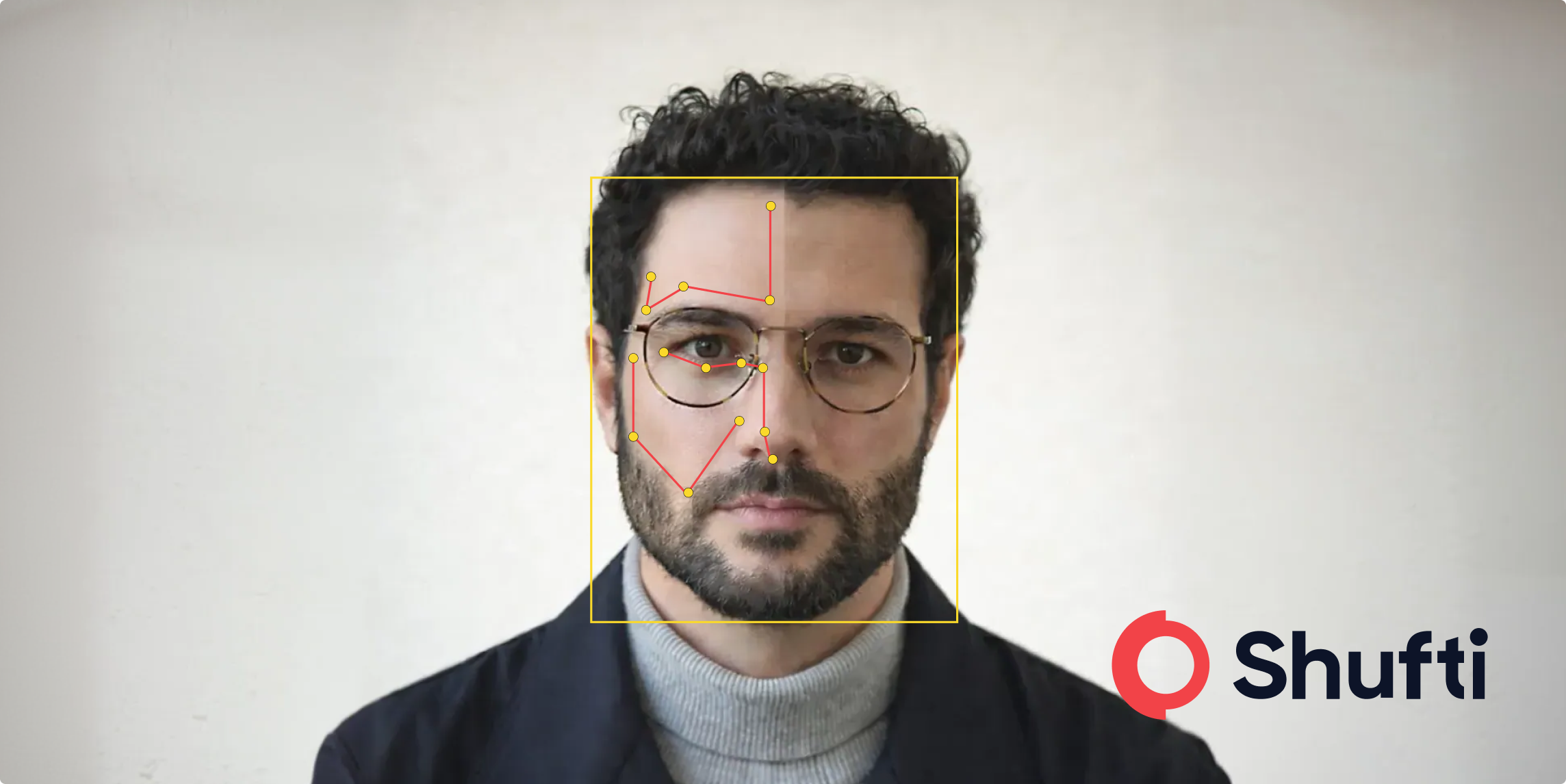

- Advanced Identity Verification: High assurance identity verification involves biometric and liveness checks, which ensure that an individual is real and present. Multi-step verification workflows further reduce exposure to synthetic identities.

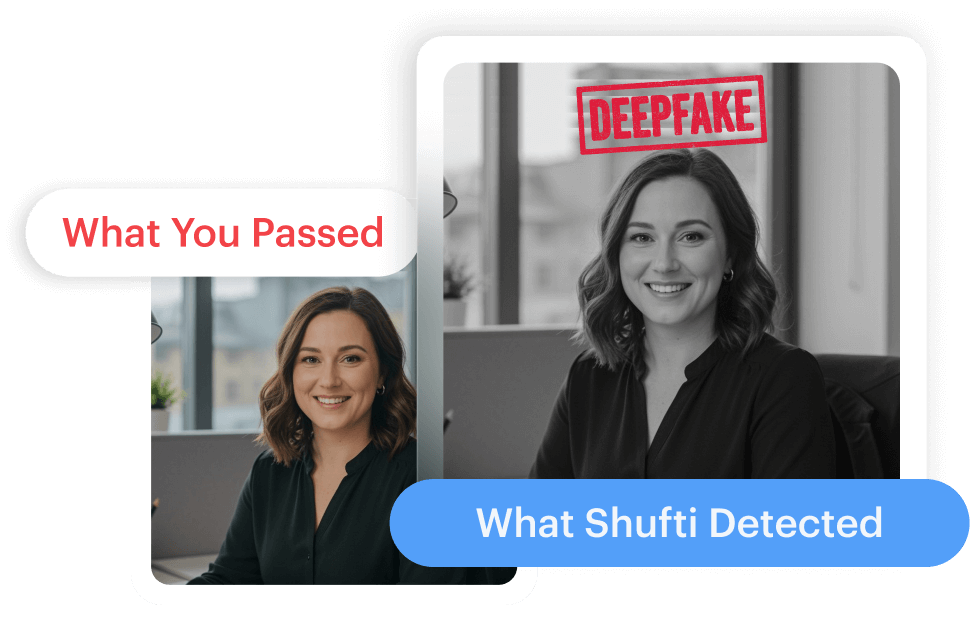

- AI-Powered Deepfake Detection: Artificial Intelligence can use machine learning algorithms to identify digital artifacts, unnatural lighting, pixel-level anomalies, irregular blinking, and lip-sync errors. These anomalies are normally invisible to the human eye.

- Regulatory Alignment: Integrating verification procedures that are ready to comply with regulations, detailed audit trails, and reporting systems reduces the regulatory risk and shows due diligence to the supervisory authorities.

- Risk-Based and Cross-Channel Protection: Tiered verification models are prioritised for high-risk transactions and securing onboarding, authentication, and channels of transaction to prevent compromise at many access points.

- Human-AI Hybrid Models: Combining automated detection with expert manual review balances efficiency with judgment. Thus, guaranteeing a thorough evaluation of complicated or unclear cases. This automated check, combined with a human review, is more effective in preventing deepfake-driven fraud.

Are Deepfakes Illegal? The Reality

Deepfakes exist in a regulatory grey zone. While the technology itself is largely legal, its misuse, especially in fraud, identity theft, political deception, harassment, and non-consensual imagery, is increasingly subject to strict legal penalties. However, authorities usually enforce this through laws that cover fraud, identity theft, and privacy violations.

Deepfakes are becoming less a technological innovation and more a category of digital risk under legal restrictions, as the world communities become more consistent in their approach to regulations and enforcement.

The results are also substantial to businesses, as an organization that does not establish strong detection and verification strategies is susceptible to regulatory fines, fraud risk, and loss of customer confidence.

Identity verification practices and strategies are also essential to combat deepfakes in order to safeguard the operations and brand reputation. This assists in sustaining confidence in online interactions in all communication and transaction channels.

Securely Onboard Your Clients and Protect Your Revenue From Deepfake-Enabled Fraud Losses

Financial institutions and digital platforms are facing a new compliance dilemma as deepfake-driven impersonation exposes gaps in identity verification and attracts regulatory scrutiny.

Shufti supports organizations with AI-powered identity verification, 3D liveness detection, and multi-layered fraud prevention controls that help reduce exposure to synthetic identity fraud while strengthening KYC and AML programs.

Request a demo today to explore how Shufti can support secure onboarding and deepfake-resistant verification across global markets.

Explore Now

Explore Now