The Looming Threat of Deepfake Apps for the Financial Industry

Deepfakes gained prominence back in 2017 when an anonymous Reddit user manipulated Google’s open-source face-swapping technology to create fake videos. Because of their ability to create realistic fake videos, the threat of deepfake apps has now reached the realm of the financial industry.

In the first noted incident of deepfake audio being used to commit a financial crime, the CEO of a UK-based energy firm got tricked into transferring €220,000 to a scam artist, thinking he was being asked to do so by the parent company’s CEO. The scammer used a voice-modifying AI app to create a deepfake audio and spoof the CEO into transferring the cash.

What Are Deepfakes?

Deepfakes are an emerging form of artificial intelligence, adept at deceiving people into believing that certain media is real when in reality it is just a compilation of pictures, videos, and audios designed to fool others. The high amount of fake news we hear is representative of how deepfakes can trick people into believing fake and made-up news.

Why are Deepfakes Such a Threat?

While it may seem like apps such as Wombo AI are imparting smiles across the world, cybersecurity experts are predicting the rise of AI deepfake scams in the upcoming future. From presenting themselves as government officials to creating false identities to provide illegal services online, fraudsters are on the lookout for ways to steal confidential data for monetary benefits.

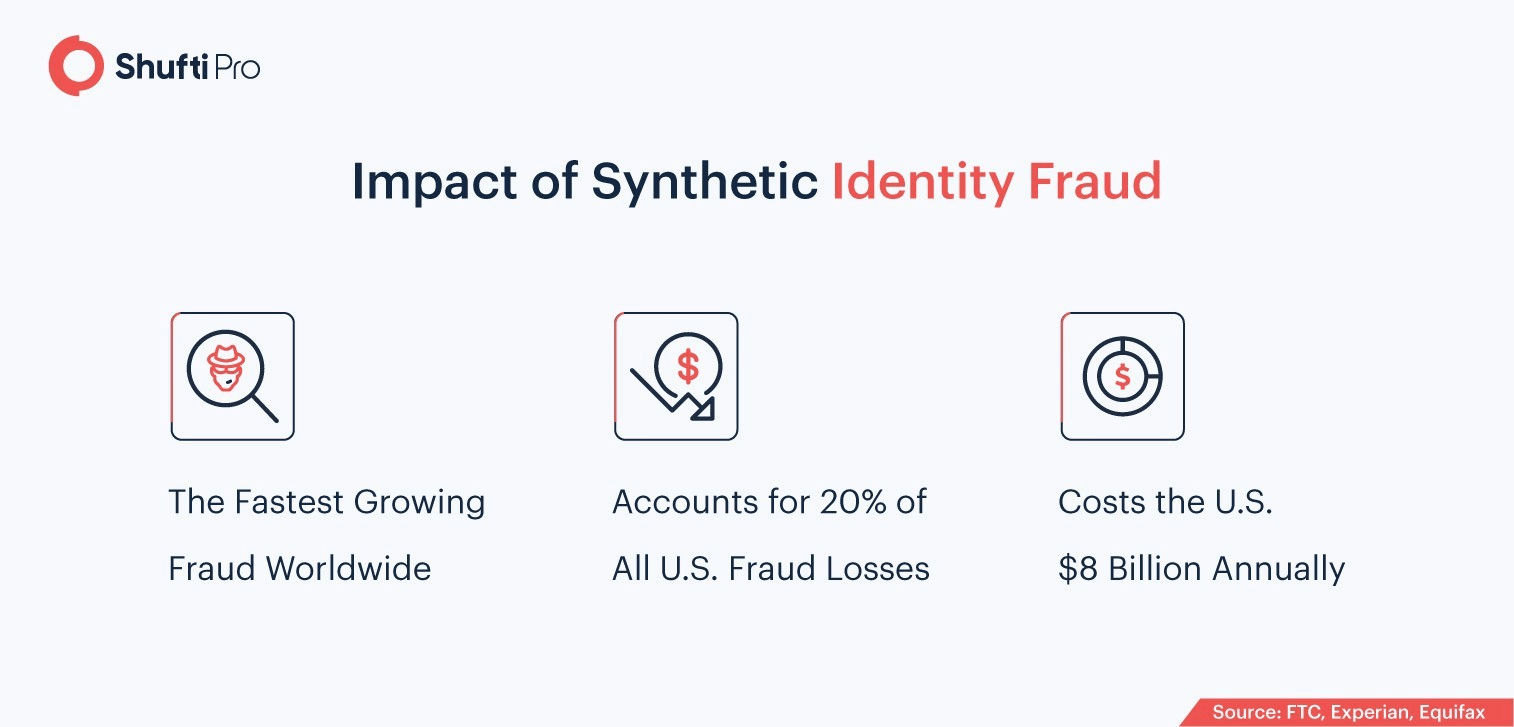

To make things worse, fraudsters are adding synthetic identity fraud as the latest weapon to their arsenal.

Deepfakes for Committing Synthetic Identity Fraud

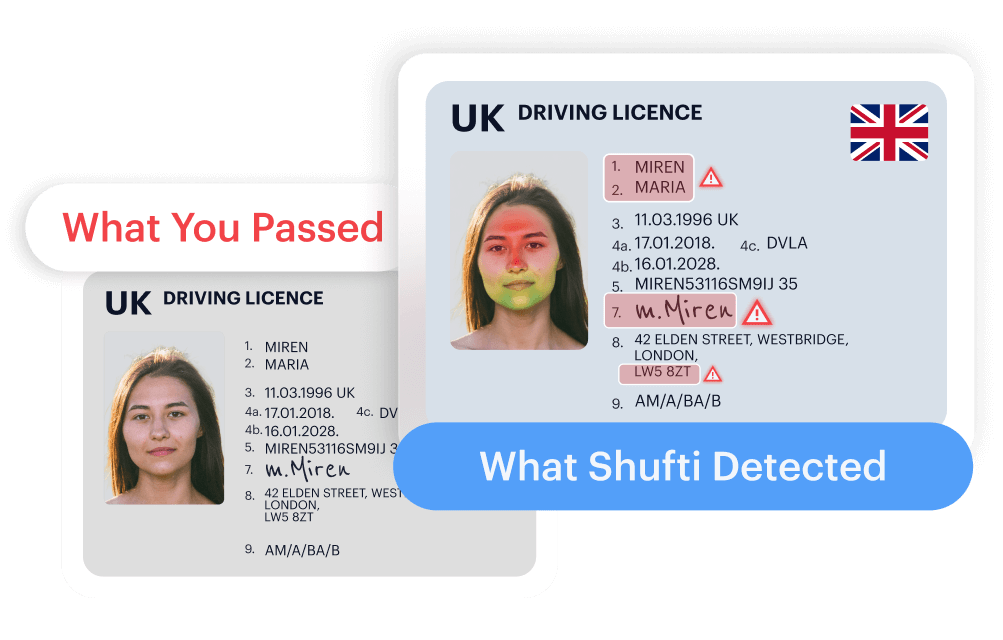

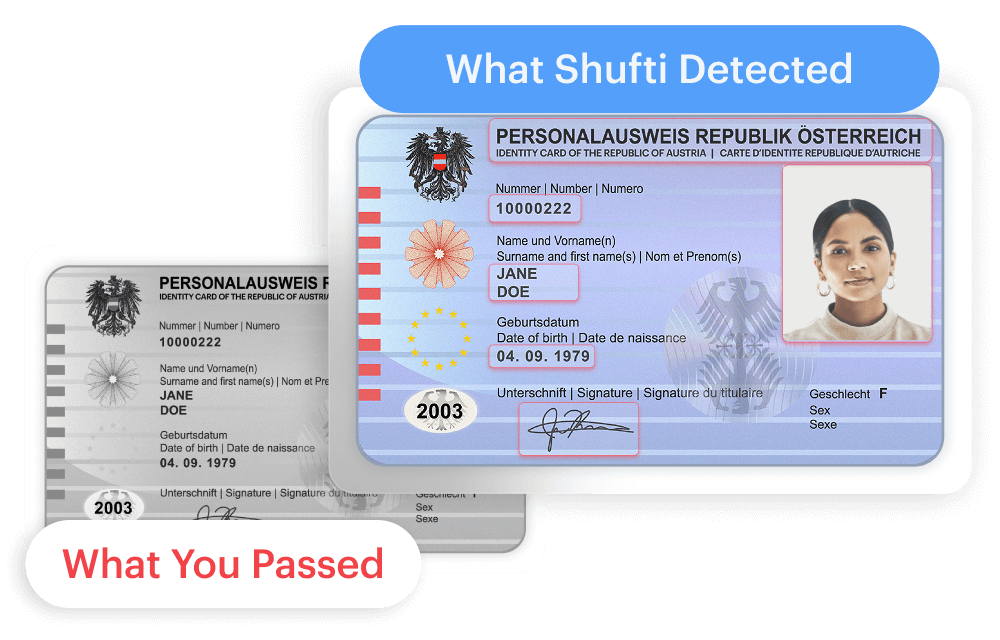

Synthetic identities are completely made up for the purpose of committing fraud, particularly in the banking and finance sector. Synthetic identity fraud involves the creation of a fake identity by combining false information with authentic information of individuals. Legitimate data is generally stolen by hacking company databases, phishing from online websites, or buying it off the dark web.

To commit deepfake fraud, scammers are using synthetic identities, to create “deep-faked” pictures, audios, and videos. This leads to identity theft, account takeovers, and numerous online scams and hoaxes. Given that this type of fraud is the fastest-growing financial crime, it’s only a matter of time till deepfakes are used in high-profile crimes.

Misinformation and Defamation

Currently, a major area of concern regarding deepfakes is its ability to spread misinformation. Here’s why this is a major threat. Suppose a scammer steals recorded calls from a company’s database and uses it to impersonate the CFO. By doing so, the scammer can issue an urgent directive to employees to send customer account details, announce company-wide layoffs, or defame the company by leaking confidential data. As a result, customers can pull out, stock prices can crash, and brand reputation can be tarnished, all because of a deepfake.

Employing a Multi-layered Defense Mechanism

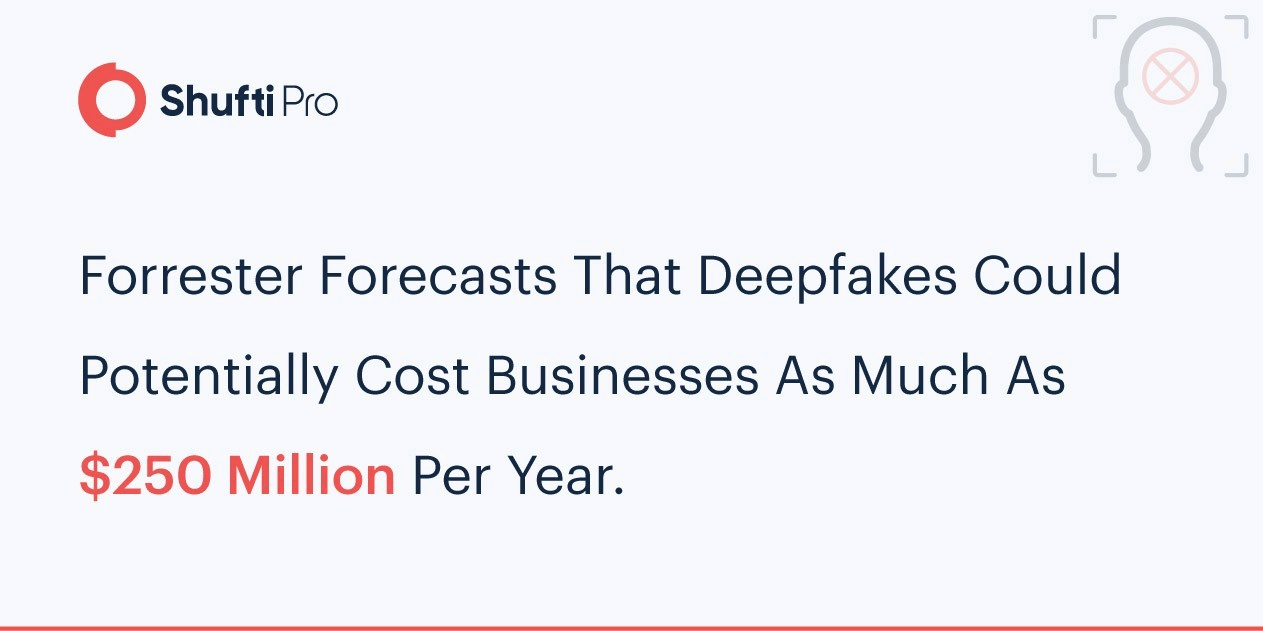

The rise of deepfake technology is paving the way for fraudsters to generate fake but realistic videos using synthetic identities to commit fraud. A recent report by University College London ranked deepfake apps as the fastest growing online threat in the present day, highlighting that deepfakes are no longer being used just for entertainment purposes.

While deepfake-related scams are slowly emerging, it has become crucial for financial institutions such as banks, stock exchanges, insurance companies, and FinTechs to take precautionary measures. As two-factor authentication and KBA (Knowledge-based Authentication) are not sufficient for detecting spoof attempts, a multi-layered approach for detecting deepfakes may include:

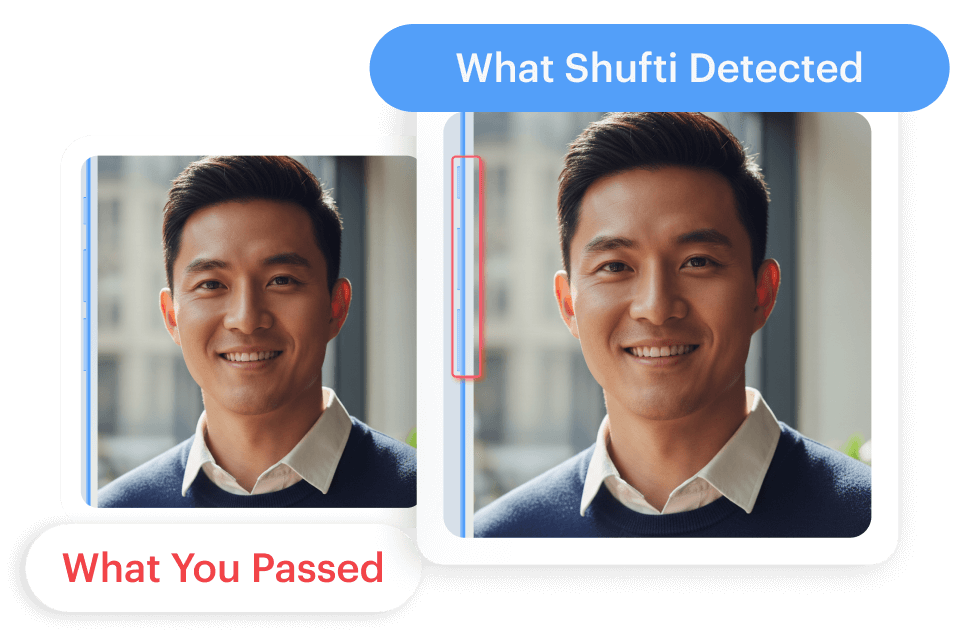

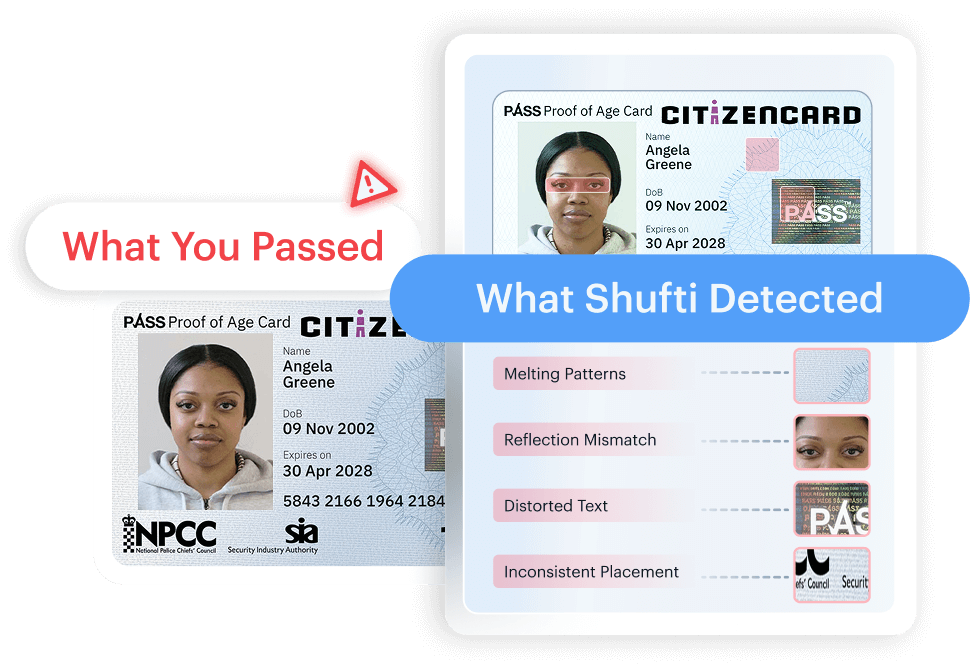

- Utilizing a robust identity verification software, to verify customer identities using official ID documents

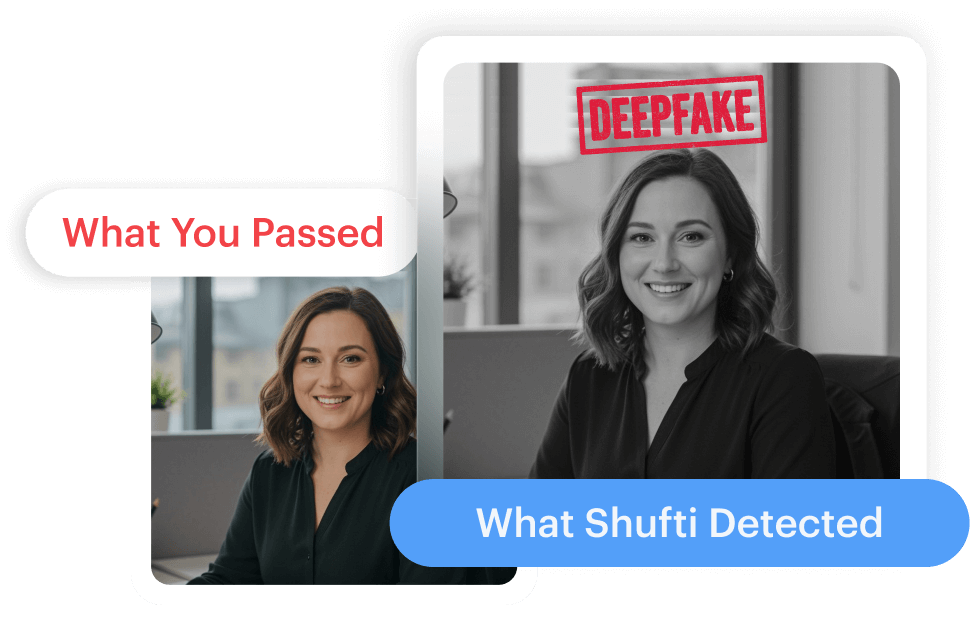

- Biometric facial authentication, to detect deepfake videos and images through features such as liveness detection, 3-D depth perception, and selfie biometrics

- Video-based KYC, where an expert eye can detect deepfakes through an interview taken in real-time

- Cybersecurity training of employees to raise awareness and promote vigilance

Final Thoughts

Scams that leverage deepfake technology are posing a new challenge for financial institutions. Traditional security mechanisms designed to keep impostors at bay are not sufficient for the detection of fake audios or manipulated videos. However, with the help of AI-powered identity verification technology that utilizes biometrics, scammers can be spotted in real-time within seconds.

Explore Now

Explore Now