AI Agent Identity Verification for Secure and Trusted Operations

How can organizations trust AI agents to handle sensitive tasks without risk?

The fact that over 82% of enterprises have already deployed AI agents and 44% lack the appropriate security policy is a clear indication that most systems are prone to fraud, misuse, and impersonation. AI agents are not mere automation; they make decisions, access data, and communicate with users.

This is why it is important to determine their digital identity. Organizations can ensure that their AI agents operate safely and comply with standards by assigning each agent a unique identity, secure credentials, controlled permissions, and audit trails. Identity verification is not merely a technical procedure; it is the key to trust in any AI-based workflow.

The Digital Persona of AI Agents

With the rise of artificial intelligence systems in various digital services, the necessity to clearly comprehend and regulate their existence becomes more essential. AI agents can now interact with systems, data, and users in a manner associated with autonomy that previously was reserved for human operators. These agents need to be perceived as separate digital entities and not anonymous pieces of software to guarantee trust, accountability, and secure operations.

This structured identity is embodied in the digital persona of AI agents, and it usually consists of:

- An individual digital identity of an AI agent.

- Authentication credentials that verify that the agent is authorized.

- Restrictions in the form of defined permissions on what the agent can do.

- Audit trails and activity logs that document the decisions and actions of accountability.

- Security mechanisms that safeguard the agent against impersonation or abuse.

Together, these elements help AI agents act in a secure, accountable, and transparent way in complex online spaces.

What is Identity Verification for AI Agents?

AI agent identity verification differs from traditional KYC. Instead of verifying a human, it confirms machine identity using cryptographic proof, defined access rights, and traceable activity. Practically, this process assists organizations to know precisely who the agent is, what it is authorized to execute, and where the accountability resides. Through an explicit and verifiable identity of AI agents, teams can mitigate risk, keep control, and have automated systems act in a manner that is secure, transparent, and compliant.

AI Agent Identity and Its Role in Industry Workflows

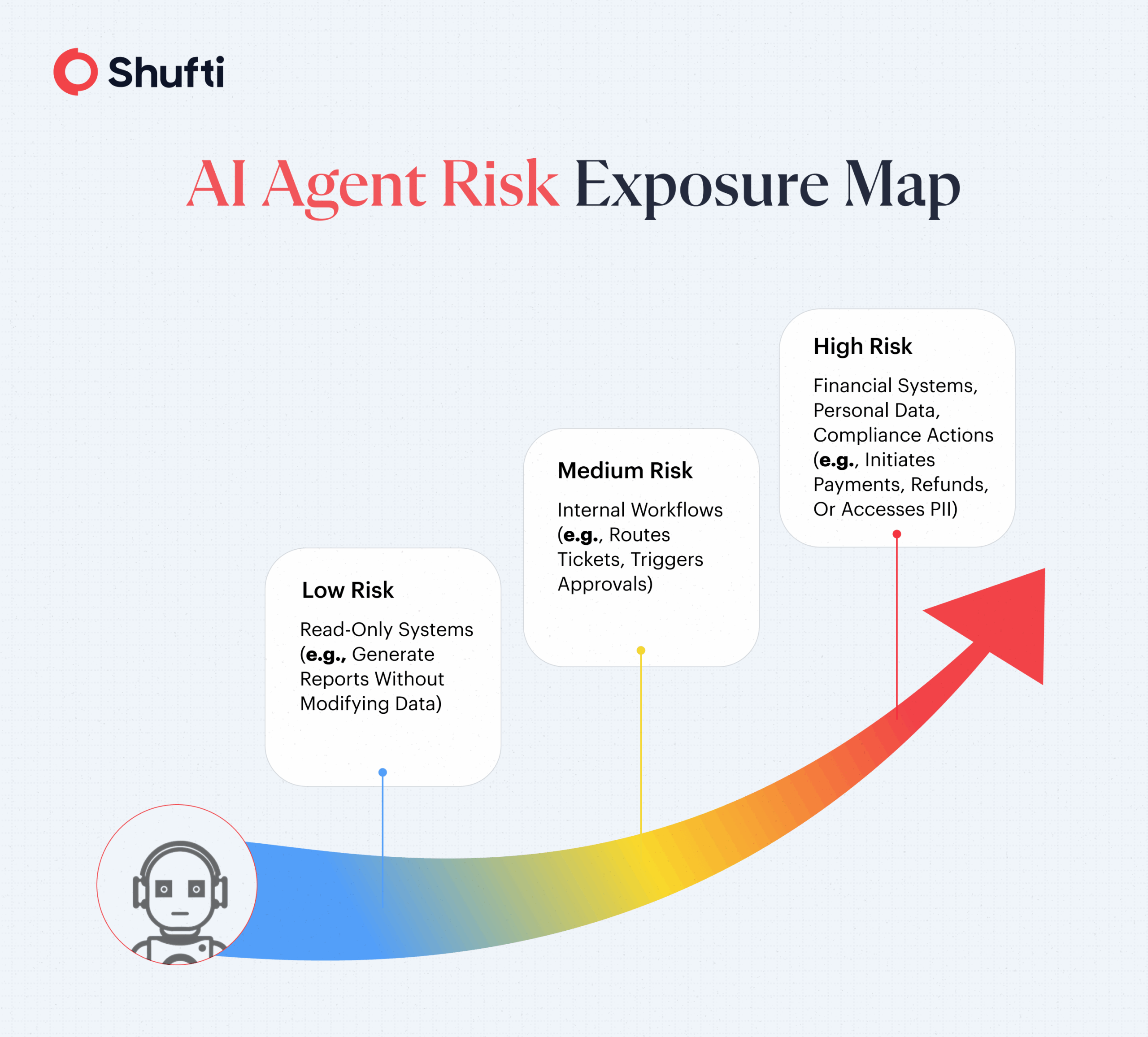

The need to verify the AI agent identity becomes more evident as they gain more responsibility on digital platforms. When autonomous systems are allowed to operate without adequate validation, the risks are not only limited to technical malfunctions but also to questions of trust, security, and accountability. Practical examples can demonstrate how identity verification can empower AI agents to work safely and provide quantifiable business value.

AI agents are typically used as transaction-handling robots in the financial services industry. Prior to making any payments, refunds, or updating accounts, authentication of agent credentials allows only authorized systems to conduct financial transactions. Consequently, organizations are able to avoid fraud, decrease financial losses, and speed up the processing of transactions without losing security.

The same applies to customer support settings, where AI agents frequently deal with customer-sensitive data. Identity verification acts as a security measure where only certified support agents can access or manipulate personal information. This protects user privacy and contributes to compliance with rules, and increases response accuracy and consistency.

Another essential case besides finance and support is automated content moderation. Certified AI agents are able to either label or delete content based on policies set by the platform, and it is possible to trace all the moderation decisions to a trusted source due to clear authentication. These examples combined demonstrate how identity verification enhances speed, accuracy, and accountability in AI-based operations.

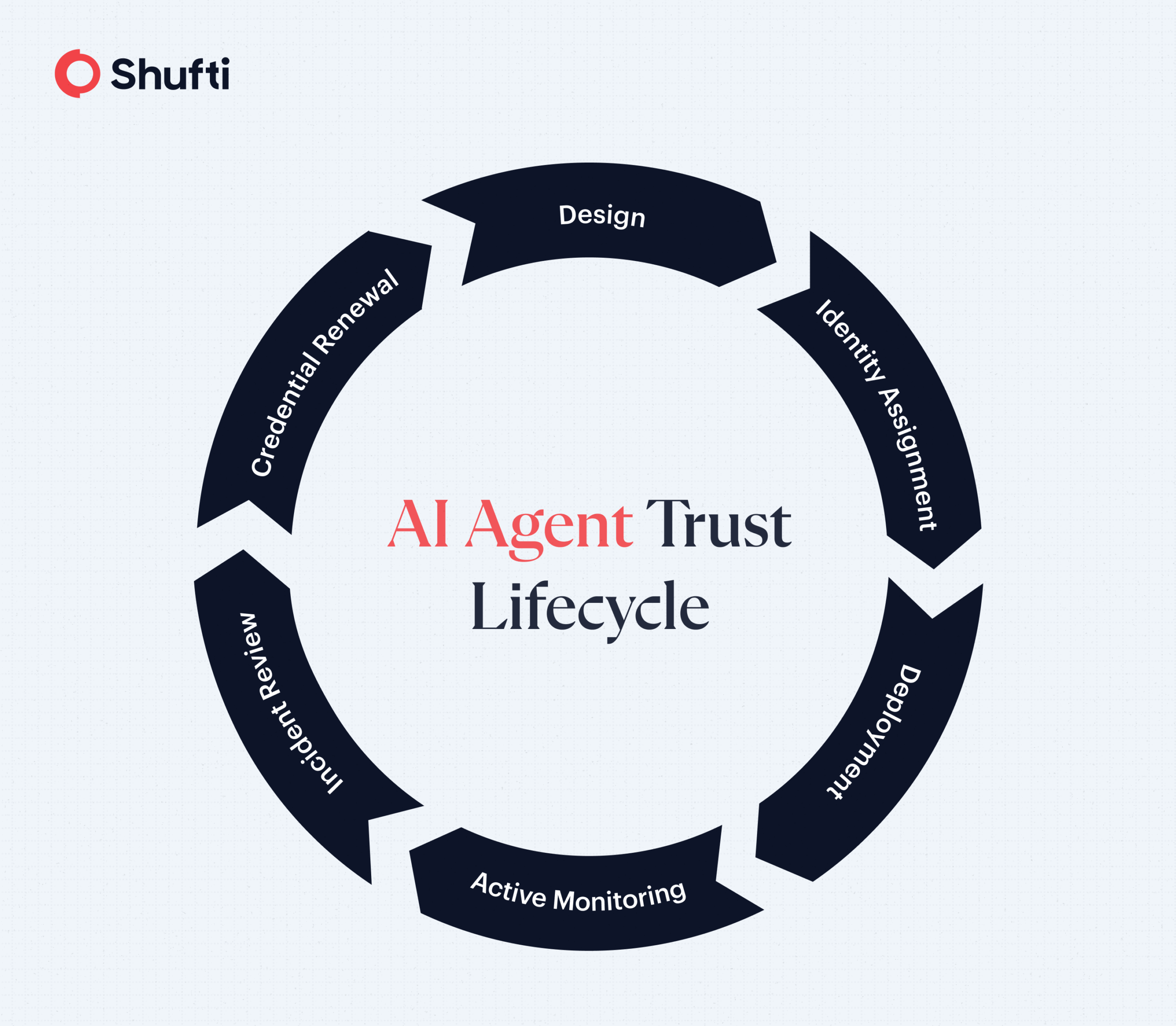

How AI Identity Verification Works?

AI identity verification is a well-organized procedure that restricts the involvement of unauthorized actors in digital systems. The process uses secure digital proof and ongoing validation in lieu of documents or biometrics.

Step 1: Issuing a Digital Identity

The first step is the allocation of the AI agent a distinct digital identity. This identity makes the agent unique among all others and forms the basis of trust, traceability, and accountability across systems.

Step 2: Presenting Secure Credentials

When the agent tries to communicate with a system, it shows cryptographic credentials, including digital certificates, secure tokens, or private keys. These credentials serve as evidence that the agent is authentic and has not been modified or forged.

Step 3: Credential Validation

The presented credentials are checked using trusted records by the system. The agent is only permitted to proceed after a successful validation. In case of verification failure, it denies access and eliminates actions that are not authorized.

Step 4: Permission and Context Checks

Once authenticated, the system considers what the agent should be allowed to do. As an example, an AI support bot may be given access to user accounts, and an autonomous payment bot may be given the permission to issue refunds within identified limits.

Step 5: Continuous Logging and Monitoring

All actions of the AI agent are kept up-to-date. These logs create an easy-to-follow audit trail, allowing organisations to track decisions, activity, and prove compliance. The early identification of abnormal behavior and accountability is also facilitated by constant monitoring.

AI Agent Security and Authentication Best Practices

Authentication and security controls determine the extent to which AI agents are safe to operate in the digital systems. Even well-designed agents may act as access points to fraud, data misuse, or unauthorized access when these controls are weak or absent.

Authentication of AI agents frequently involves the use of public key infrastructure (PKI), whereby cryptographic keys can be used to verify authenticity, or token-based authentication, where access can be revoked and limited. Multi-factor agent authentication provides a greater level of protection in a higher-risk environment because it ensures that a single agent is not permitted to operate without multiple forms of verification.

The best security practices are initiated by making certain that only verified AI agents are allowed to access sensitive systems. The permissions must be highly related to each role of the agents, and the actions must be limited to what is absolutely necessary. All interactions also need to be recorded and auditable to ensure transparency and accountability.

These practices reduce actual risk, especially in sectors such as fintech or e-commerce. Refunds are initiated within specified limits by a verified payment agent, and audit records are made to ensure that everything can be tracked. This approach is fast and automated without losing security and trust.

Why Skipping AI Agent Digital Identity Verification Creates Risk?

A company that uses AI agents without identity verification is vulnerable to risks beyond technical failures. Hackers may spoof or use unverified agents and access sensitive systems, cause data breaches, or fraudulent access.

Within the financial service sector, this may include circumventing payment bots through the approval process to request a refund or transfer, resulting in loss of money and reputation.

In customer service, the use of unauthenticated AI agent employees may result in privacy violations and legal non-conformance.

An increase in operational inefficiency will also be experienced, as human teams will be required to intervene to rectify mistakes or check rogue activities.

In addition, the lack of verification undermines the accountability of the system, rendering audit trails ineffective and decisions difficult to trace. This, in the long term, erodes trust between users, partners, and regulators, limiting the scalability and utility of AI-driven systems.

How Shufti Supports Secure Automated Workflows

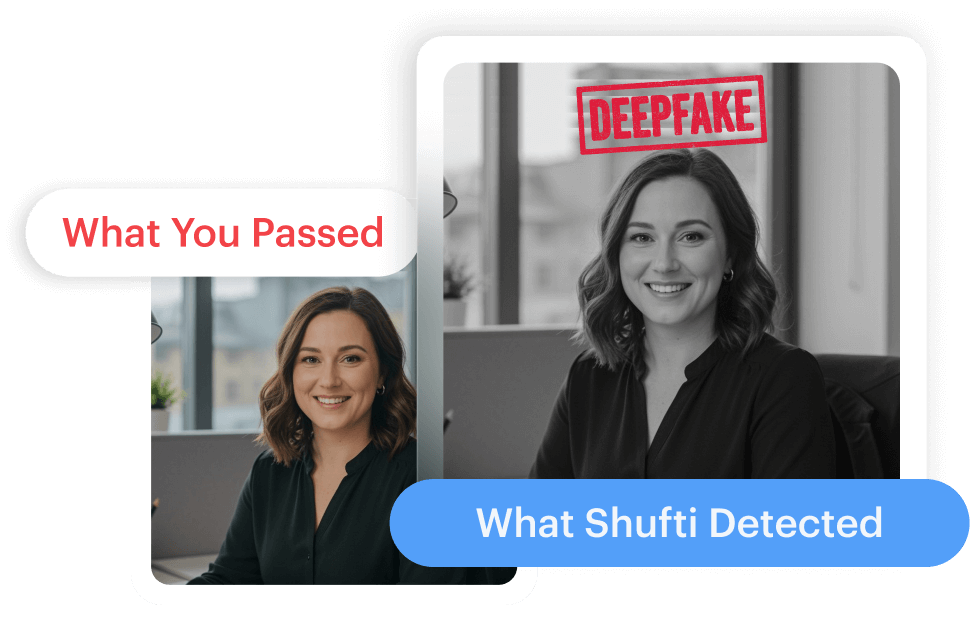

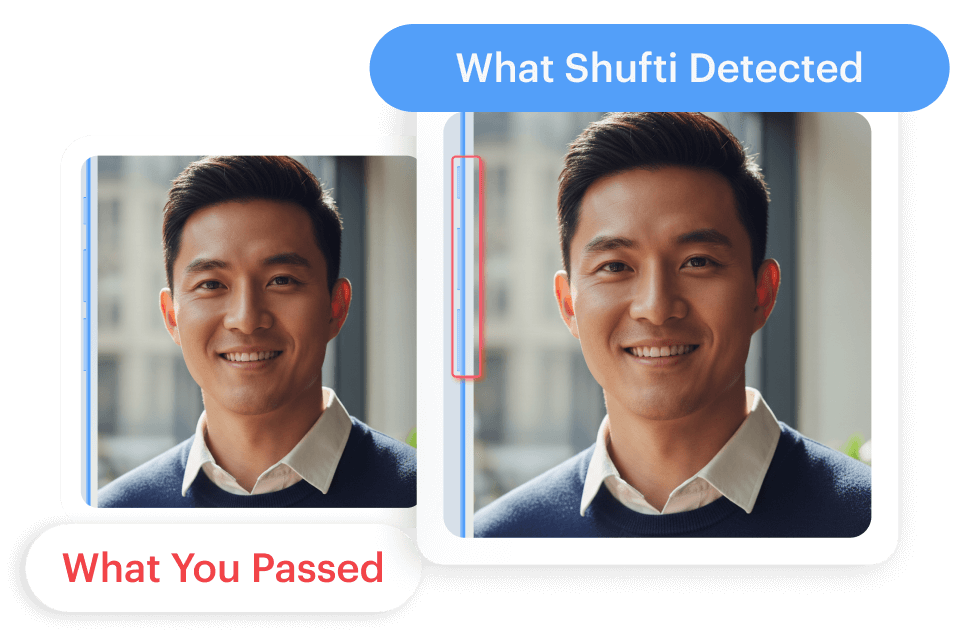

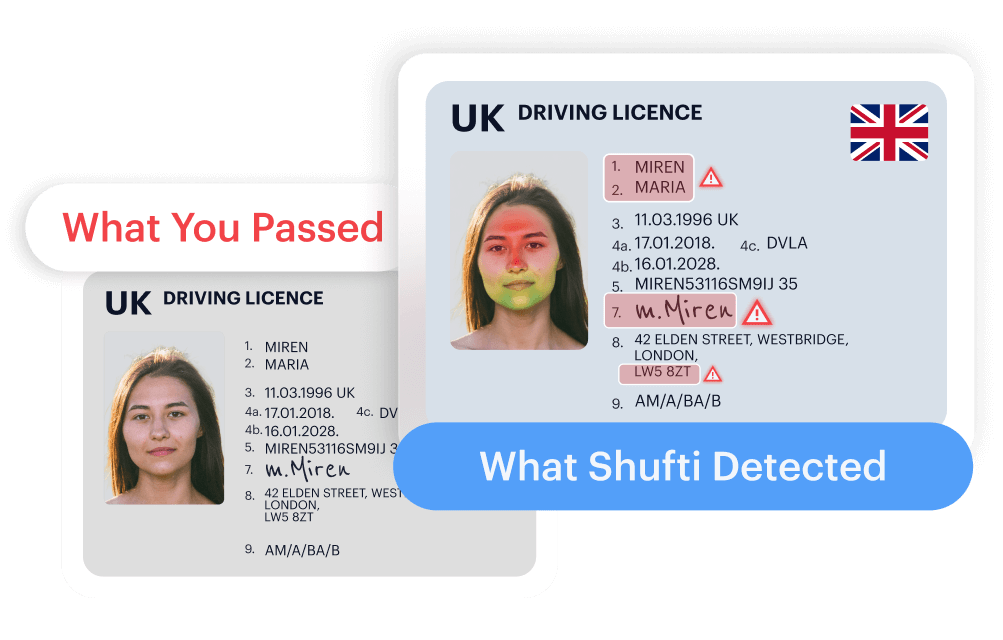

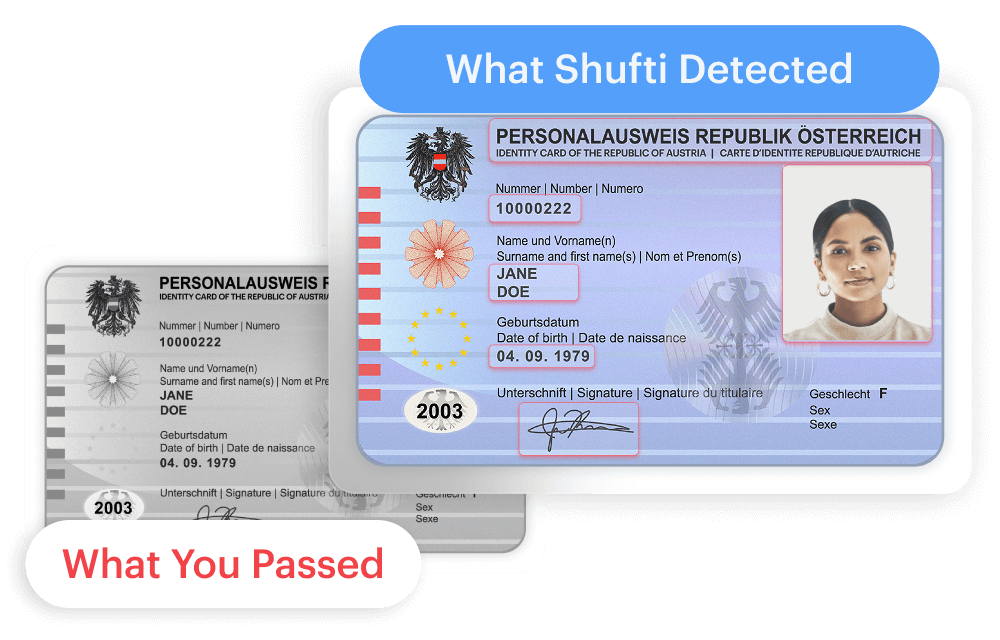

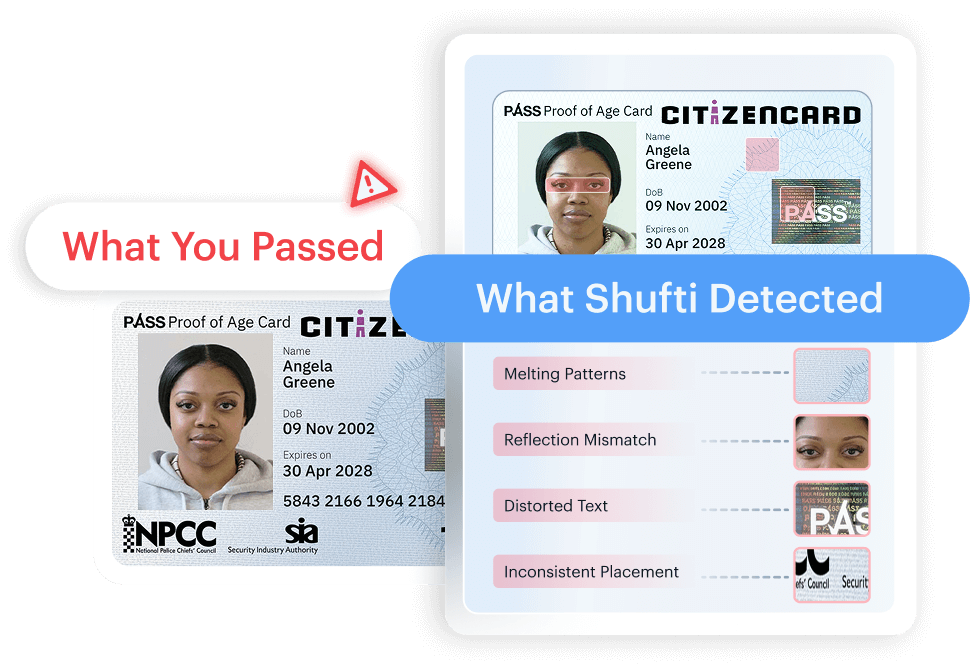

AI agents that can access systems and take actions create a new control gap when accountability and permissions are unclear. Shufti supports digital trust and compliance workflows with identity verification, authentication, and audit-ready controls that help organisations reduce fraud exposure and maintain oversight as automation scales.

Shufti already maintains a core infrastructure for identity verification and authentication built from scratch. The platform leverages these in-house capabilities to provide solutions customized according to use case and industry-specific needs.

Request a demo to explore how Shufti can support trusted automated workflows.

Explore Now

Explore Now