EU Pushes 16+ Age Limit on Social Media as Lawmakers Target Digital Addiction and Risky Platform Design

The European Parliament has issued a strong call to ban social media access for children under the age of 16, unless parents explicitly approve it. This move signals rising urgency around protecting young individuals from increasingly using social media, which can turn into an addiction to these digital platforms.

Members of the European Parliament (MEPs) passed the non-binding resolution with a sweeping majority. It amplifies pressure on the European Commission to propose tougher legislation. This push has reflected a growing alarm across Europe about the mental health impact of unregulated social media exposure on minors.

The Commission is already reviewing Australia’s new social media minimum age framework, requiring platforms to prevent under-16s from holding accounts from 10 December 2025, subject to heavy penalties for non-compliance.

In a speech earlier this year, Commission President Ursula von der Leyen warned of “algorithms that prey on children’s vulnerabilities” and highlighted how parents feel overwhelmed by “the tsunami of big tech flooding their homes.”

A new expert group on child digital safety is expected to be formed by the end of the year.

The momentum for implementing age verification laws is accelerating across Europe. A report from a French expert, commissioned by President Emmanuel Macron, suggests that smartphone use should be limited until age 13 and access to platforms like TikTok and Instagram should be blocked until age 18.

The resolution, led by Danish MEP Christel Schaldemose, states that society, not just parents, needs to protect children from the addictive design of online platforms.

This framing positions child online safety as a shared responsibility across regulators, platforms, and wider society rather than a question of individual parenting alone. It suggests disabling features like infinite scrolling, autoplay videos, push notifications, and reward-based engagement for minors by default.

MEPs pointed out that many design features that encourage addiction are central to the way social media companies make money. Studies mentioned in earlier drafts showed that one in four young people struggles with smartphone use that looks like addiction or other problems.

Despite having significant support from the MEPs, the proposal still faced pushback in the European Parliament. Eurosceptic MEPs contended that decisions about children’s online access should remain in the hands of member states. Simultaneously, tensions were on the rise with the U.S. as the White House urged the EU to lighten digital regulations, a move that European legislators firmly resisted.

The Digital Services Act addresses online harms for underage children. However, the Members of the European Parliament say that clearer and more specific rules are needed. Therefore, the rules should focus on protecting children from social media’s manipulative designs and new ways of digital exploitation.

The Global Heat on Children’s Safety on Social Media

As mentioned above, Australia has initiated the implementation of regulations for children’s safety online, and many countries are now following this path. Australia proposed legislation that requires strong verification tools and parental permission for children under 16 to use social media.

France has also mandated Law No. 2024-449 (SREN law) regulating digital space, which requires age verification for accessing adult content. Along with that, many States in America, including Utah, Texas, and Arkansas, have passed or proposed similar age verification laws that govern social media accounts for minors.

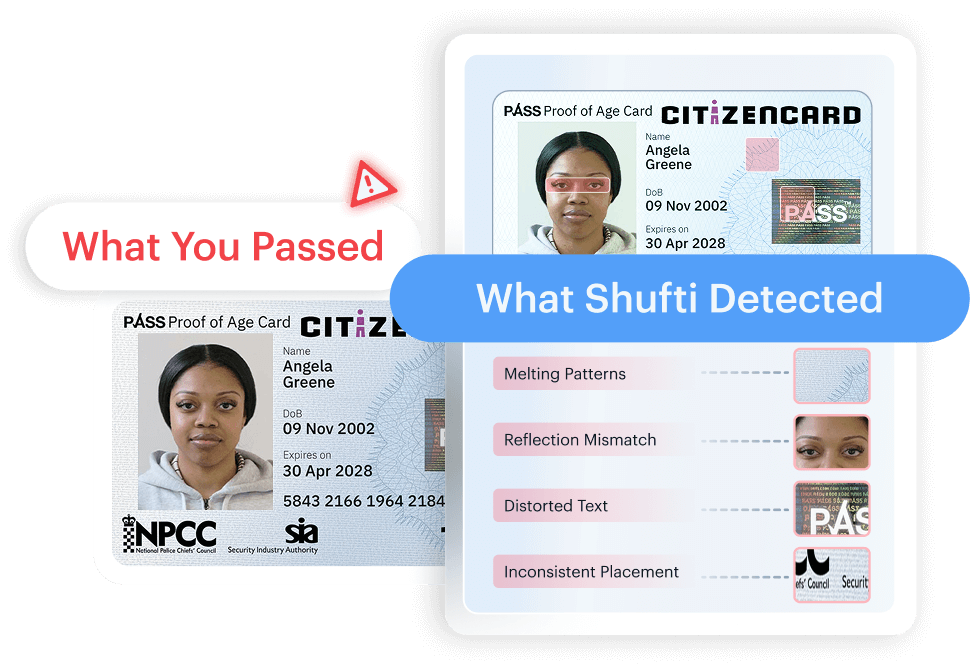

Moreover, the UK’s Online Safety Act of 2023 requires tech companies to implement verified age checks to protect underage users. These efforts demonstrate a global initiative to protect children online and prevent harmful content from appearing on their sites.

These laws collectively push digital platforms towards more robust and privacy-preserving age-assurance frameworks. Therefore, financial institutions and digital businesses need to review how identity verification, consent management, and parental controls are integrated into their onboarding and user-journey design.

Explore Now

Explore Now