Uncover Your KYC Blind Spots and Deepfakes with Shufti on AWS Marketplace

- 01 The Rise of Deepfakes Threats in 2026

- 02 How Deepfakes Create Risks for Leadership & Senior Management?

- 03 What Happens When KYC Blind Spots Go Public?

- 04 Why Your Existing Vendor Can’t Spot Its Own Weaknesses?

- 05 So, What Is The Ideal Solution For These Challenges?

- 06 Inside A Quiet KYC Stress Test On AWS Marketplace

- 07 Four AWS AMIs Built for Today’s IDV Blind Spots

- 08 Audit Historic Onboarding & Your KYC Vendor Before Next Audit

Most institutions now accept that synthetic identities are not only attacking the front door. They already sit inside the existing customer base.

A 2023 Wakefield Research survey of 500 fraud and risk professionals in US financial services and fintech found that around three-quarters believe their organisation has already approved accounts for synthetic customers, and 87 percent say their company has extended credit to those fake customers. Another industry study revealed that US lenders have issued $3.3 billion in credit to synthetic accounts in 2024.

“The pressing question for financial service providers is how many millions have already been disbursed to synthetic identities that will never repay, and how many additional millions they will lose in the coming months if those profiles remain undetected.”

The Rise of Deepfakes Threats in 2026

Deepfakes have made headlines throughout the year 2025, and 2026 is going to be even more challenging.

In an interview on July 22, 2025, OpenAI CEO Sam Altman voiced his concerns about how some financial institutions still lack solutions that can stop AI from bypassing authentication attempts, warning that the world may be on the verge of a “fraud crisis”.

A 2024 FinCEN report found that false records (altered, forged, or counterfeit documentation or identification) were the second-most frequently reported identity-related fraud category in 423,000 SARs filed in 2021 alone, totaling $45 billion in suspicious activity. When onboarding new customers, receiving false identification information is one of the major issues financial institutions face today.

The challenge is going to be much bigger in future as the industry research shows a deepfake attempt roughly every 5 minutes in 2024, alongside a 244% year-over-year rise in digital document forgeries.

If your current KYC vendor is not encountering deepfakes, it doesn’t mean fraud has vanished; it’s highly likely that it’s missing them and that the synthetic accounts already sit in your customer base. Because, over the years, the threat from deepfakes & synthetic identities has only exacerbated as Generative Intelligence in 2026 is far more sophisticated and democratized than it was a couple of years ago.

How Deepfakes Create Risks for Leadership & Senior Management?

Deepfakes as well as altered and forged documents are not only causing direct losses to businesses in financial services, telecommunication, igaming and financing, subscriber and multi-accounting fraud, it also poses risk of legal actions.

These synthetic accounts can be part of terrorism financing and sophisticated money laundering schemes moving criminal proceeds between accounts and jurisdictions without ever leaving a trace to their real beneficiaries.

And it’s all happening at a time when individual accountability of the board of directors, chief compliance officers (COO) and MLROs is rising under regimes like the UK’s SMCR, the EU’s AML Package and the United States’ Bank Secrecy Act. Under these laws, authorities can now directly imprison and fine responsible individuals for any systematic failures & weaknesses which could be exploited for money laundering and terrorism financing through such institutions.

This creates an uncomfortable reality. Fraud and risk teams suspect there are synthetic or otherwise illegitimate accounts on the books. Executives know that current controls, which were designed for yesterday’s threat,s can’t beat today’s generative AI, highly scalable bot farms, or documents generated with AI.

Therefore, the question is not whether blind spots exist. The question is how large they are inside the live customer base and how much losses are they are causing to the businesses. But publicly admitting such weaknesses carries its own risks.

What Happens When KYC Blind Spots Go Public?

| When PayPal’s leadership disclosed in early 2022 that the company had identified and closed 4.5 million illegitimately created accounts, largely linked to incentive abuse, the market reaction was immediate. Reports at the time noted that the share price dropped by roughly 25 percent as investors absorbed the combined impact of downgraded growth targets and the revelation that millions of accounts on the platform were not genuine customers. |

That example sits in the background of many board conversations. Admitting that synthetic or fake customers already exist in the portfolio feels safer inside internal committees than on earnings calls or in public risk disclosures. Hence, leadership teams face a dilemma. They need hard evidence of blind spots in historic onboarding and authentication, yet they do not want any testing process to trigger external scrutiny before there is a remediation plan.

Why Your Existing Vendor Can’t Spot Its Own Weaknesses?

The first instinct is often to turn back to the existing Know Your Customer or IDV stack. However, the same systems that allowed synthetic or manipulated identities in past years are unlikely to be the right tools to uncover those failures now.

If existing controls and vendors were effective enough against deepfakes, AI-created documents, and injection attacks, etc., the fraud would not have scaled up to the level as highlighted by different industry research.

This is why many institutions talk about performing a portfolio-wide KYC refresh or a deep audit of past approvals, yet the project keeps slipping down the roadmap, because it feels too heavy. A typical evaluation demands:

- Finance teams want multi-year pricing comparisons.

- Technology teams plan integration work, sandboxes, and security reviews.

- Legal and privacy teams insist on bespoke contracts and data transfer assessments.

- Product teams worry about disruption to live customer journeys.

A single proof of concept can therefore take months, while synthetic identities and fraud with manipulation of AI created media keep entering new horizons.

So, What Is The Ideal Solution For These Challenges?

The gap between the problem and available solutions is clear. Institutions need a way to stress test existing controls, historic decisions, and live customer inventories with newer AI based detection, without triggering a year-long vendor replacement programme.

It would allow fraud, risk, and compliance leaders to answer very specific questions.

- How many approved accounts in a given period would fail a modern liveness, deepfake, or document originality check?

- Which segments of the portfolio carry the highest concentration of potentially synthetic identities?

- Why did existing KYC workflows miss those signals at the time?

An ideal approach would:

- Run on real historic records, not a small synthetic test set

- Operate inside your business’s own cloud environment, under existing security policies

- Avoid new contracts or data transfers for an initial evaluation

- Leave live onboarding flows untouched until the picture is clear

This is the context in which Shufti is rolling out four focused blind spot audit AMIs on AWS.

Inside A Quiet KYC Stress Test On AWS Marketplace

Shufti’s approach on AWS uses pre-configured Marketplace AMI images to turn heavy vendor evaluations into a quiet stress test of historic onboarding and existing customer inventories.

Instead of wiring APIs into live production journeys, the financial service provider launches one of Shufti’s pre-configured audit engines as an Amazon Machine Image inside its own AWS account and Virtual Private Cloud. The pattern mirrors other AMI-based marketplace products, while every step remains under the institution’s security, identity, and network policies.

Across all four blind spot AMIs, the model stays consistent.

- The engine runs entirely in the organisation’s cloud environment

- No personally identifiable information leaves that environment

- No new integration or custom coding is required for the audit

From an internal process perspective, this structure matters.

- Legal and procurement teams do not need bespoke contracts for an initial evaluation, since usage falls under the existing AWS Marketplace agreement

- Finance teams see consumption on the standard cloud invoice rather than a new vendor billing setup

- Technology teams treat the AMI as a tightly scoped workload inside the VPC rather than a full production integration project

The outcome is a contained, low-friction way for compliance, fraud, and technology stakeholders to run a portfolio-level stress test of past approvals. Modern liveness, deepfake, and document originality checks can be applied to historic records off-flow, without triggering a full vendor replacement cycle or drawing attention to the exercise outside the organisation.

Findings from the audit create a foundation for structured action rather than reactive crisis management. Results can be shared with fraud teams, model governance, internal audit, and the board to shape configuration changes with existing vendors, targeted remediation of high-risk segments, or implementing additional checks in areas more vulnerable to fraud. Vendor strategy decisions then follow evidence rather than marketing claims.

Four AWS AMIs Built for Today’s IDV Blind Spots

For the initial AWS release, Shufti is focusing on four specific blind-spot audits. These AMIs target the areas where generative AI and modern fraud tactics are moving fastest.

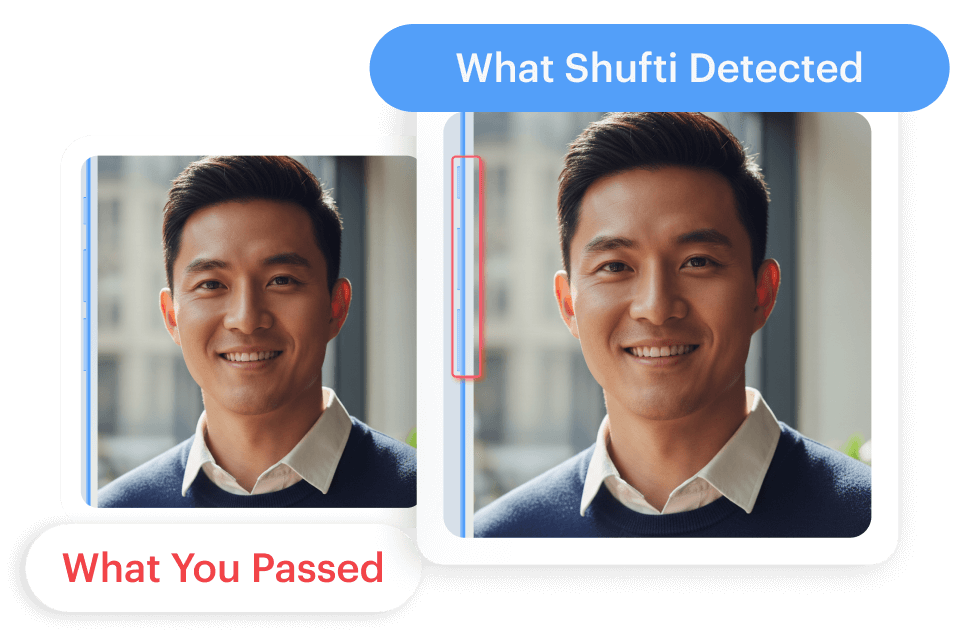

1. Liveness Detection AMI (Spoofed and Injected Sessions)

Shufti’s liveness blind-spot audit targets the gap between older selfie checks and modern spoofing. The dedicated AMI is built for one question. Was a real, present human in front of the camera during that historic session.

Inside the organisation’s cloud, the engine:

- Re-checks previously approved liveness sessions.

- Finds replays, virtual camera feeds, injected streams, and AI-generated faces that legacy liveness missed.

- Prioritises journeys where liveness drives trust, such as account recovery, high-value payouts, remote onboarding, or new device binding.

Industry reports and Shufti’s own research show deepfake and injection-style attacks hitting remote onboarding with growing frequency, while 46 percent of financial institutions report deepfake-related fraud bypassing standard identity verification. This AMI gives a measurable view of how many sessions inside last year’s onboarding never involved a truly live participant.

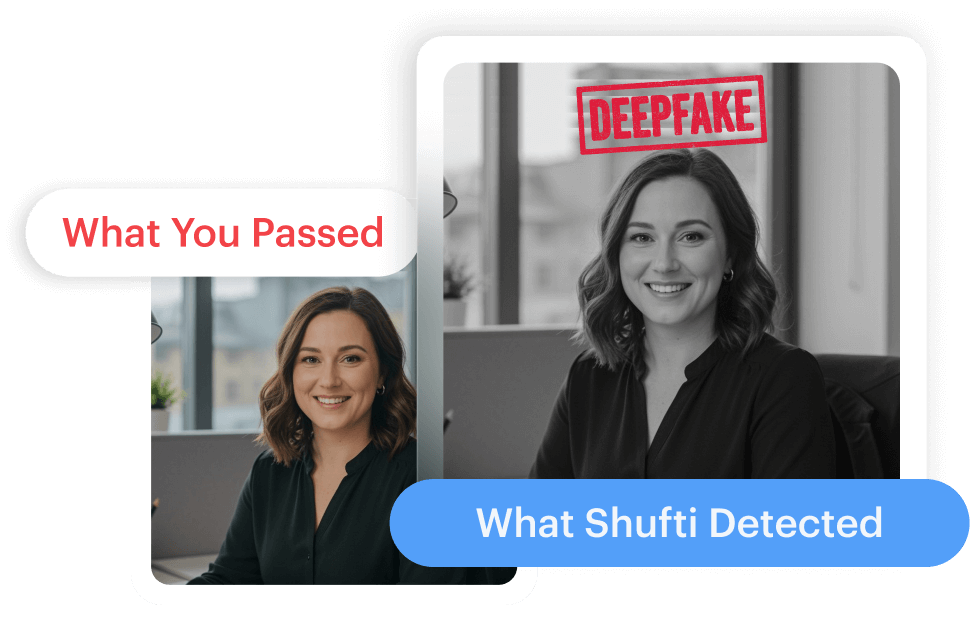

2. Deepfake Detection AMI (Manipulated Faces in KYC)

The deepfake blind-spot detection AMI addresses the growing volume of AI-generated and face-swapped images that pass basic ID checks. Shufti’s messaging on this product is clear. Deepfake fraud is exploding, and legacy ID checks that were never trained on AI faces are leaving gaps.

The audit engine:

- Re-scans previously approved faces from KYC and authentication flows.

- Detects deepfakes, face swaps, and other AI-generated faces that slipped past the existing stack.

- Operates entirely in the institution’s cloud, with customer images never leaving the environment.

Global estimates place account takeover fraud in the billions, while GenAI-driven fraud losses are forecast to grow sharply over the next few years. This AMI turns that trend into a local number that executives can see: how many accounts in the current base would fail a modern deepfake detector.

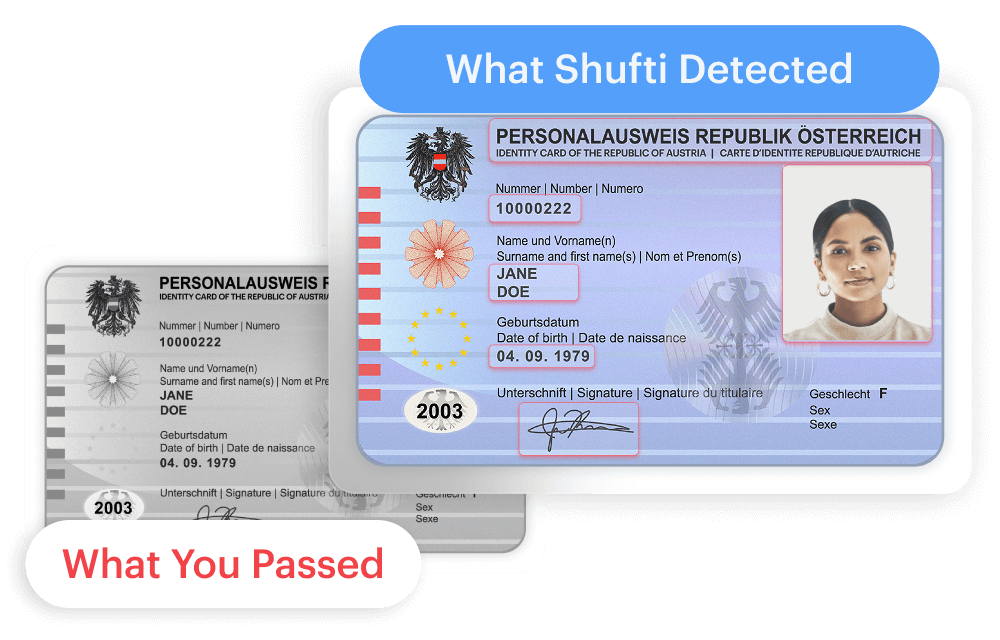

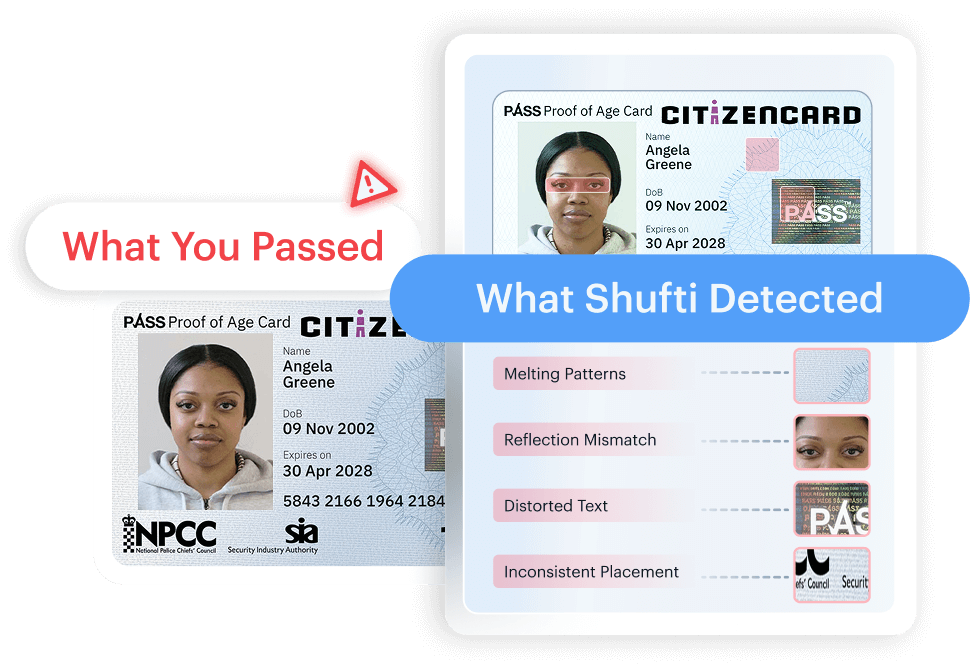

3. Document Deepfake AMI (AI-Generated IDs)

AI tools now generate IDs with pixel-perfect fonts, layouts, and hologram-style effects that pass template and OCR checks while remaining entirely synthetic. Industry studies show that synthetic identities built on such documents have already exposed lenders to billions in potential losses.

Shufti’s document deepfake audit AMI is designed for this specific problem. Inside the institution’s AWS account, the engine:

- Treats each ID as a forensic image rather than a text container.

- Analyses fonts, layout, emblems, and visual security marks to spot computer-drawn text, cloned seals, and missing features.

- Validates MRZ lines, barcodes, and other machine-readable regions against strict format and checksum logic, then cross-checks them with printed fields.

The outcome is a clear list of AI-generated or fabricated IDs that current Identity verification systems treated as genuine, along with patterns by issuer, geography, or channel that inform policy and vendor changes.

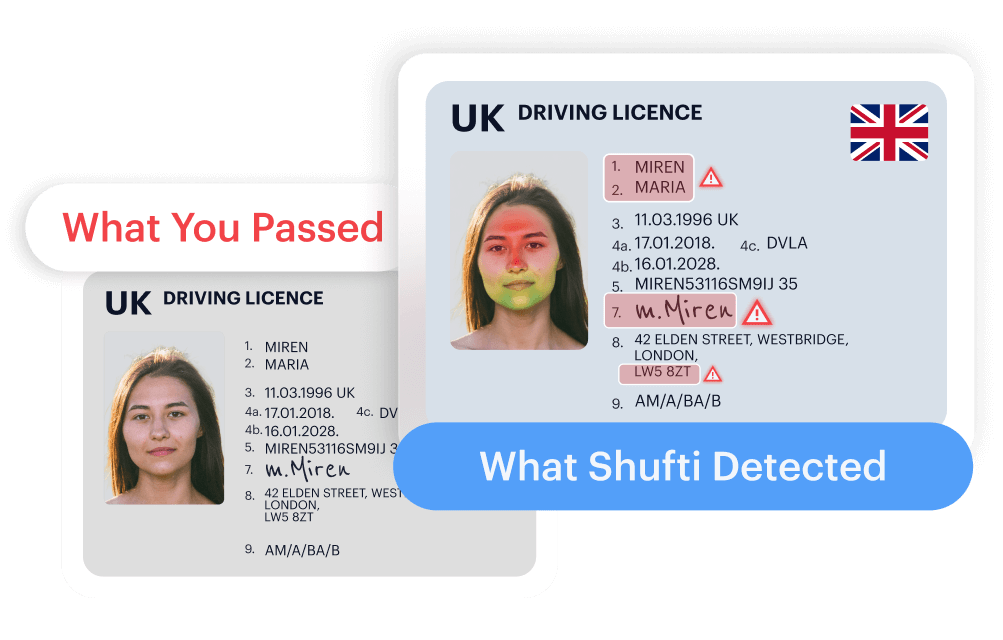

4. Document Originality AMI (Reuse and Edited Fields)

Fraud does not always rely on fully synthetic documents. In many cases, the same high-quality ID image is reused across multiple accounts while text fields change quietly, or faces and signatures are composited onto otherwise genuine backgrounds.

Building on the same document engine, the document originality blind-spot and tampering audit AMI helps institutions:

- Identify reused base images with different personal details.

- Detect blended portraits or pasted regions that alter identity data while preserving overall layout.

- Quantify where document-originality policies and current vendors allowed edited or reused IDs into production.

Combined with the deepfake document audit, this AMI draws a line around the document blind spots that matter most for regulators and auditors.

Audit Historic Onboarding & Your KYC Vendor Before Next Audit

Boards, regulators, and internal audit functions increasingly suspect that synthetic identities, liveness spoofs and AI-carried document fraud are already present in active customer portfolios. Financial Service Providers that can point to a documented blind spot audit of identity data of the existing customer base stand in a stronger position than those that rely on assumptions, especially if a later investigation uncovers fraud in past approvals.

Shufti AWS Marketplace AMIs are designed for exactly this kind of contained stress test. Institutions can deploy an audit engine inside their own cloud, and expand usage through standard AWS billing once value is proven. No bespoke contracts, no new integration into live journeys, and no external data transfers are needed for an initial evaluation.

Prevent millions of dollars in fraud losses and bad debt from extending credit to fake users. Request a tailored AWS deployment demo to identify synthetic customers already in your portfolio.

Explore Now

Explore Now