What Are Deepfakes? A Comprehensive Guide to Deepfake Detection

- 01 What Is a Deepfake? Understanding AI-Generated Videos, Images, and Audio

- 02 How Deepfakes Work: From GANs to Diffusion Models?

- 03 Evolution of Deepfake Technology

- 04 Deepfakes Risk And How They Reach Verification Systems

- 05 The Cost of Deepfake Fraud

- 06 How to Detect and Combat Deepfakes: Deepfake Detection Strategies?

- 07 Are Deepfakes Illegal? Legal and Regulatory Considerations

- 08 The Future of Deepfake Detection: Challenges and Opportunities

- 09 How Shufti Helps Combat Deepfake Threats?

Deepfakes have ceased being a fringe internet trend or a new social media novelty. They have become a serious online threat, directly affecting identity verification, fraud prevention, brand trust, and regulatory compliance.

Deepfakes are undermining the credibility of online interactions more broadly, whether through doctored political speeches or the use of artificial intelligence to produce videos without undergoing the onboarding process. With advances in artificial intelligence, even trained teams struggle to distinguish between real and synthetic media.

This guide explains what deepfakes are, how they work, why they are dangerous, and how modern deepfake detection technologies help organizations protect their platforms, customers, and reputations.

What Is a Deepfake? Understanding AI-Generated Videos, Images, and Audio

A deepfake is synthetic media generated using artificial intelligence to create or manipulate realistic-looking videos, images, or audio that depict events or actions that did not occur. The name combines deep learning and fake, referring to how AI techniques are applied to create these fabrications.

In contrast to traditional photo or video editing, deepfakes are generated by machine learning models trained on large datasets of facial expressions, movements, or a person’s voice. When trained, these models can convincingly mimic the appearance, speech patterns, and mannerisms of any individual.

Deepfakes commonly appear in three forms:

- Video deepfakes occur when someone’s face or behavior is digitally modified.

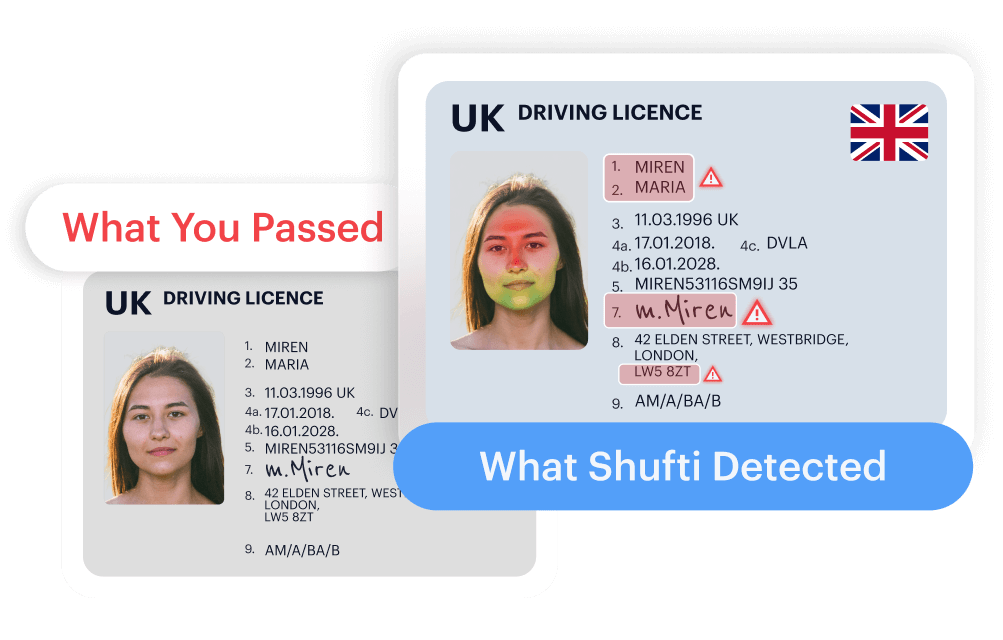

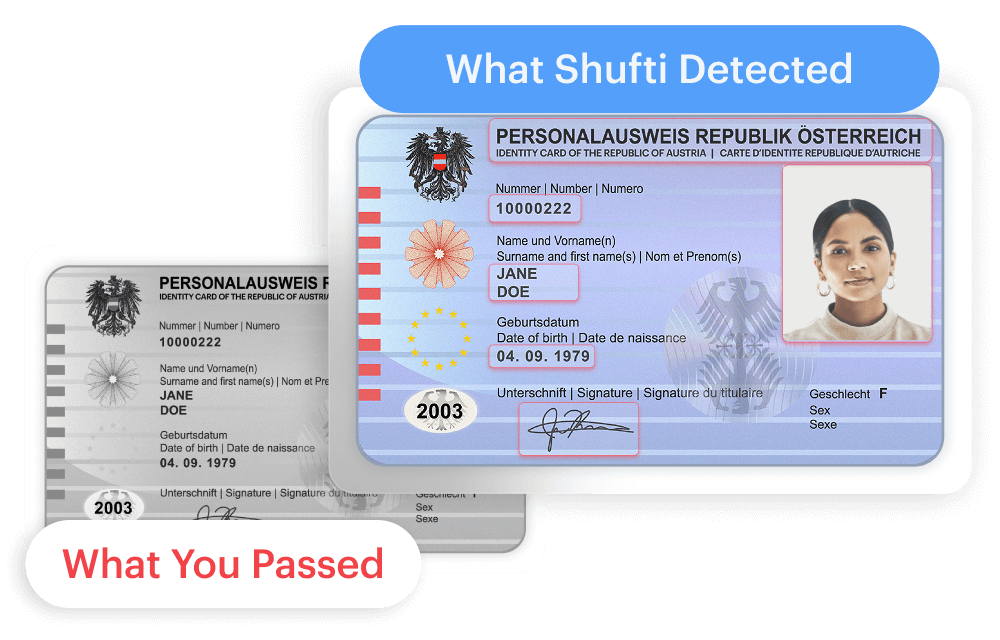

- Image deepfakes are frequently applied to counterfeiting profile photos or identity documents.

- Audio deepfakes, in which voices are copied to sound like that of the genuine person.

Modern deepfakes are especially dangerous for digital identity verification, as they spoof visual and biometric cues used by identity verification systems.

How Deepfakes Work: From GANs to Diffusion Models?

Deepfakes are created using complex deep learning models. In the past, most deepfakes were generated using Generative Adversarial Networks (GANs).

A Generative Adversarial Network (GAN) has two competing components: the Generator (G), which generates fake data, and the Discriminator (D), which distinguishes real data from the fake data generated by the Generator.

Recent deepfakes use advanced GAN-based techniques to track facial features frame by frame. In some cases, they can impersonate voices using limited audio samples and replicate minor body language, such as blinking and subtle facial expressions. This makes deepfakes a serious threat to KYC, remote onboarding, and biometric verification systems.

The industry is now shifting beyond GANs. Modern deepfakes often use Diffusion Models, especially Latent Diffusion Models. These models progressively add and remove noise to produce stable, photorealistic outputs. In some use cases, diffusion-based systems offer improved stability and scalability compared to earlier GAN-based approaches.

Evolution of Deepfake Technology

Deepfake technology has progressed rapidly over the past decade. The high technical skills and specialized software and hardware it once required were now available in open-source software and consumer-grade applications.

The first deepfakes were relatively easy to identify due to visual artifacts and unnatural motion. Nowadays, AI can create media much more quickly, cheaply, and with greater realism. The use of social media platforms has accelerated this development by offering training data and outlets for distributing synthetic content.

With the rising accessibility, deepfakes no longer require high-profile targets. Frauds involving deepfakes are now common to businesses, financial institutions, marketplaces, and digital platforms.

Deepfakes Risk And How They Reach Verification Systems

Although there are some valid uses of synthetic media in entertainment and education, its abuse is a source of serious dangers. Misinformation, identity theft, financial fraud, and invasion of privacy are some of the growing misuses of deepfakes.

Deepfake materials created without consent have become a pressing ethical and legal issue, especially when individuals’ likenesses are used without their consent. At the organizational level, deepfakes undermine trust in online communication and subject systems to large-scale manipulation.

Political Deepfakes and Information Manipulation

Deepfakes can be used to manipulate the masses and cause a misrepresentation of facts and a derailing or sabotaging of a democratic process. False speech or fake videos can go viral online, sometimes faster than fact-checking systems can respond.

Damage to the public trust, even through exposure, can be permanently established, leaving one in doubt about which information to believe.

Deepfake Fraud and Identity-Based Attacks

Deepfakes are also becoming more common in digital identity fraud cases based on impersonation. Artificial intelligence has already been utilized to impersonate executives and approve fraudulent transactions using audio. Onboarding workflows and biometric verification systems are currently being tested on the video deepfakes. With deepfakes added to social engineering, simple security controls fail to work in the absence of sophisticated detection tools.

In the context of identity verification, deepfakes are typically used in two ways:

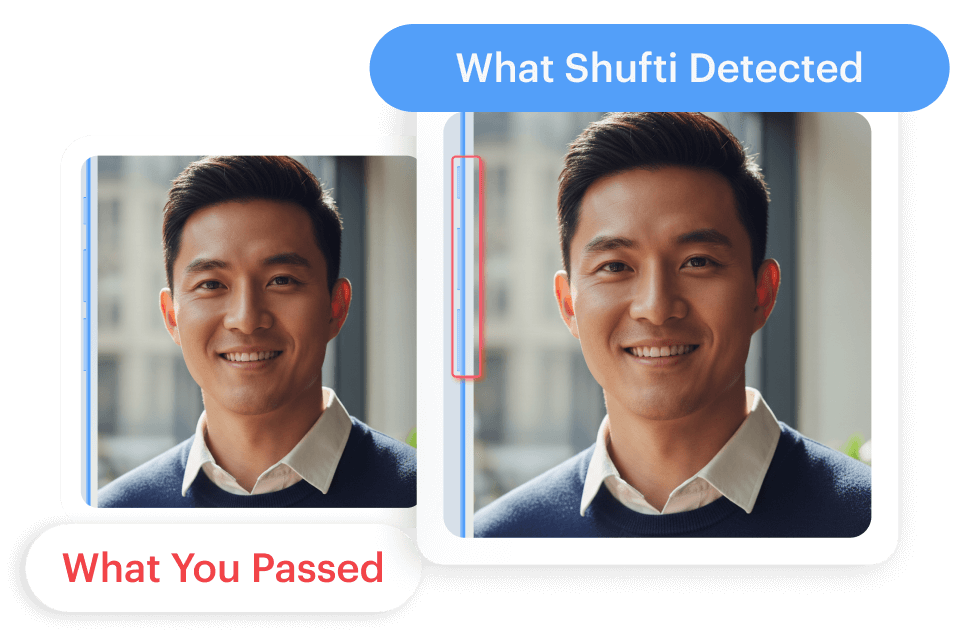

- Presentation attacks: a real-time spoof shown to the camera, such as a screen replay, mask, or printed face.

- Injection attacks: synthetic media fed directly into the verification stream, which can be repeated multiple times to bypass controls.

These attack vectors highlight why advanced detection and passive liveness technologies are essential for Know Your Customer (KYC) and biometric verification systems.

The Cost of Deepfake Fraud

Attacks made by deepfakes impose both short-term costs and long-term costs on organizations.

Reputational Damage

One persuasive deepfake is enough to cause lasting reputational damage to a person or organization. Fraudulent videos or photos of improper conduct may result in social backlash, enforcement review, and customer loss, in spite of the fact that the information might be disproved later.

Financial and Operational Losses

Deepfake fraud causes direct financial losses, involving fraudulent wire transfers and sabotaged onboarding systems, among others. Regulatory fines, legal claims, and high compliance expenses are other effects that organizations may incur after an incident.

When deepfakes slip through onboarding, costs rise through fraud losses, investigations, and remediation.

How to Detect and Combat Deepfakes: Deepfake Detection Strategies?

Deepfakes are difficult to detect, and some measures can be taken to minimize risk.

Basic Indicators of Synthetic Media

Some deepfakes still exhibit subtle anomalies, including:

- Abnormal movements or blinking of the face.

- Lack of audio and video synchronization.

- Fluctuating lighting, shadows, or the color of the skin.

These techniques are currently considered effective first signs, but they are also becoming less reliable with the development of deepfake technology.

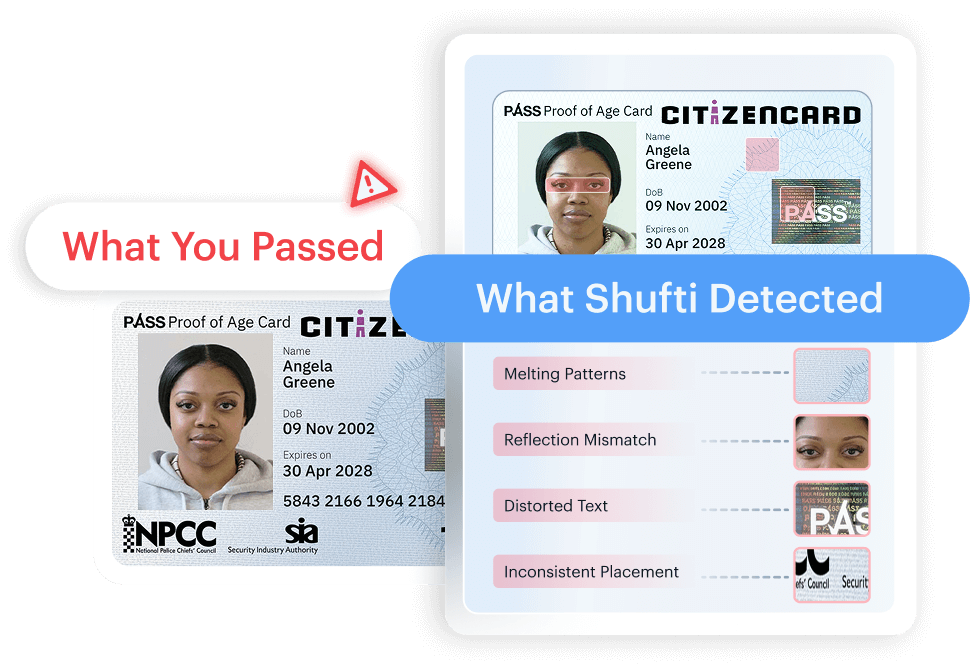

Advanced Deepfake Detection Technologies

The recent technologies of deepfake detection are based on artificial intelligence systems that have learned extensive amounts of fake and authentic content. These systems examine the patterns that are not visible to the human eye, like pixel anomalies, time anomalies, and irregularities in the biometrics.

Advanced detection methods include:

- Deepfake detection algorithms based on AI

- Liveness detection with facial recognition

- Challenge Response to verify that it is real-time

Liveness detection is especially useful in the onboarding and authentication processes, as it significantly reduces the risk that the person who is engaging with the system is not a recording or a fake video, but rather a live individual.

Are Deepfakes Illegal? Legal and Regulatory Considerations

The legality of deepfakes depends mainly on their purpose and how they are used. While synthetic media can be legal for entertainment or satire, using them for bad purposes like fraud, impersonation, defamation, or identity theft is increasingly being handled by existing laws.

Regulators around the world are working on clearer rules to prevent the misuse of deepfakes, especially related to financial crime, consumer protection, and data privacy. However, enforcing these rules can be tricky because of differences in laws across countries and the global nature of online platforms.

In regulated industries, using deepfake detection is becoming a key part of compliance rather than just a technical upgrade.

The Future of Deepfake Detection: Challenges and Opportunities

Detection of deepfakes will be a growing problem as AI-generated content improves. More efficient detection systems are also being enabled by the same technologies that enable deepfakes.

Machine learning algorithms trained on a wide variety of data can continually learn new ways to be manipulated, and as a result, automated detection becomes much more efficient than human inspection.

Companies that invest in early research on deepfake detection will be better positioned to preserve trust, protect users, and meet regulatory expectations in a more synthetic online world.

How Shufti Helps Combat Deepfake Threats?

As synthetic media becomes harder to distinguish from real interactions, maintaining confidence in digital identities is becoming a regulatory and operational priority.

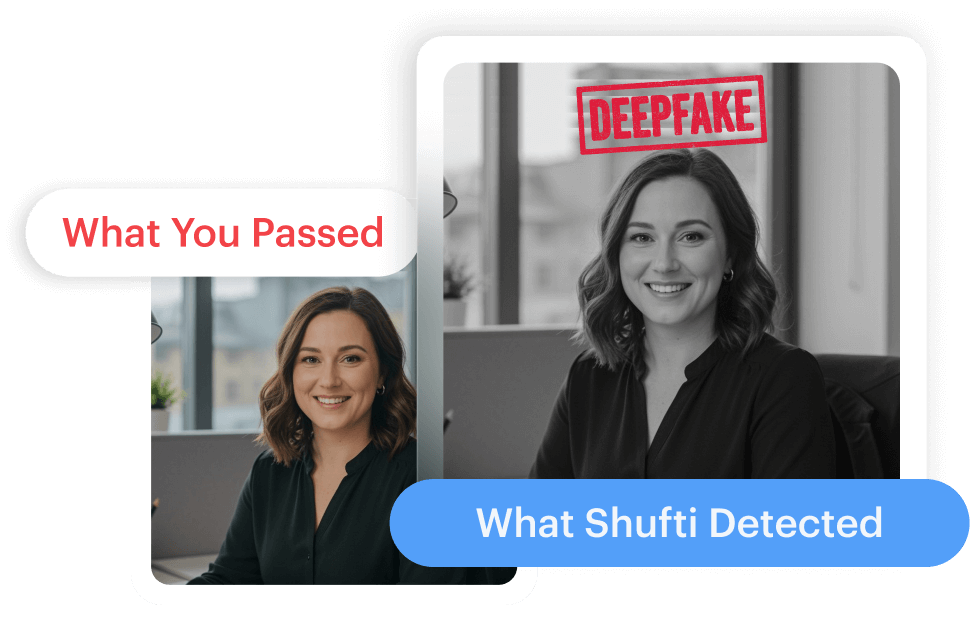

Shufti helps organisations reinforce identity assurance during remote onboarding and authentication through AI-based deepfake detection and liveness controls.

Request a demo to see how Shufti supports secure and compliant digital identity verification.

Explore Now

Explore Now