How Much of What You’ve Verified Is Actually Real?

Shufti’s Deepfake Blindspot Audit now runs fully inside your AWS environment, auditing historic KYC for manipulation signals without moving sensitive data off-prem.

Run Now on AWSDid You Know Deepfake Detection Is Now an Arms Race?

Deepfakes do not stand still. Threat actors test your defenses and come back smarter.

Shufti runs inside your AWS environment so you can fight back where it matters most. Detect manipulation in real-world conditions, move faster as threats evolve, and keep sensitive data inside your cloud.

Explore Clouds

Runs entirely

in your cloud

No PII

Ever leaves

No integration

or coding

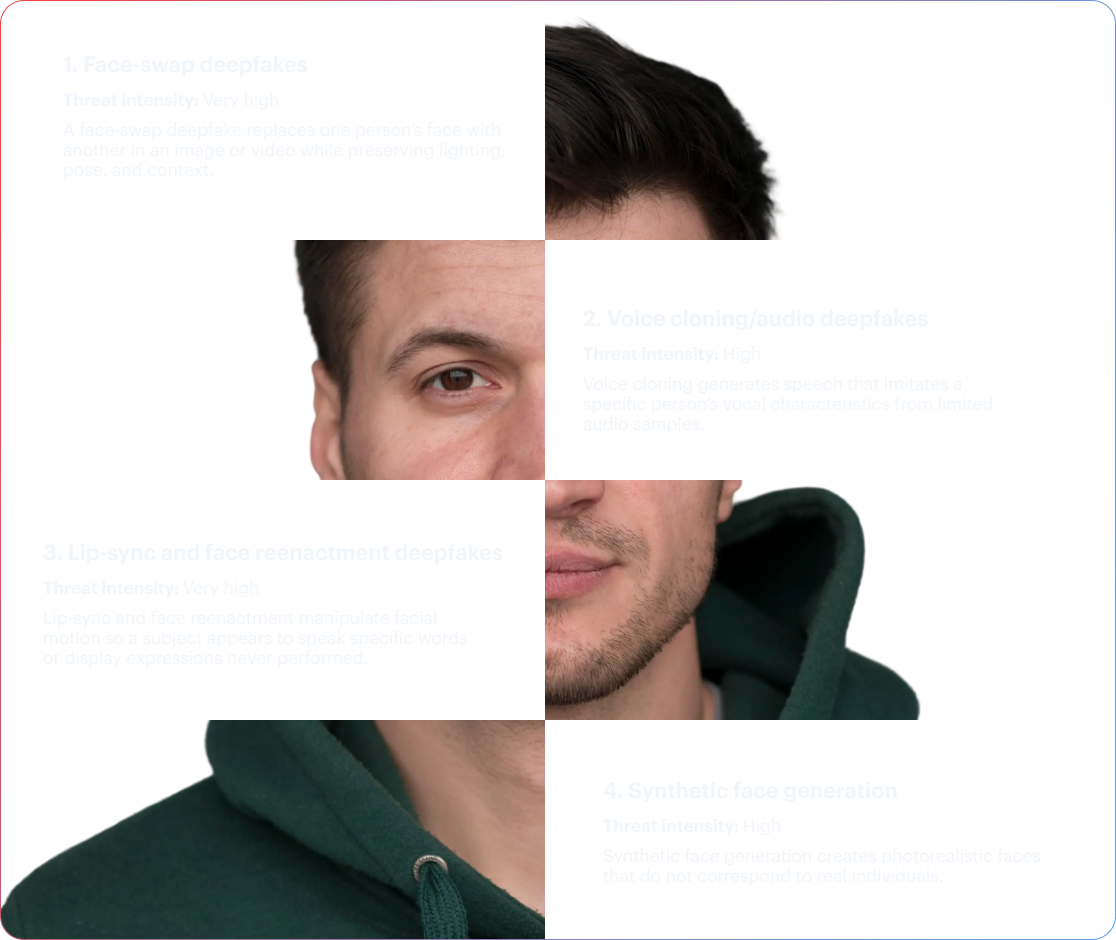

Not All Synthetic Media is Dangerous Until It Becomes a Deepfake

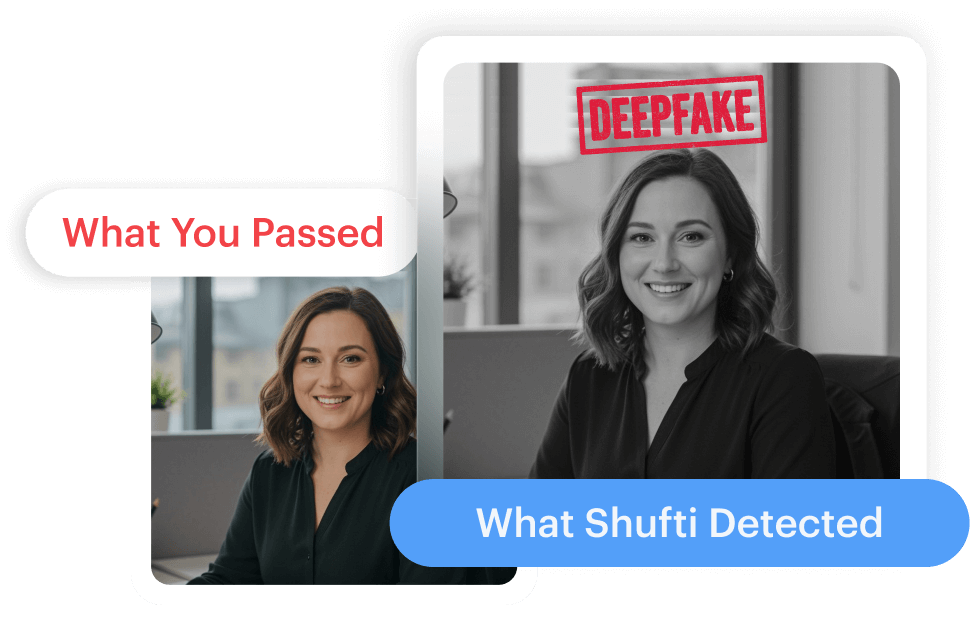

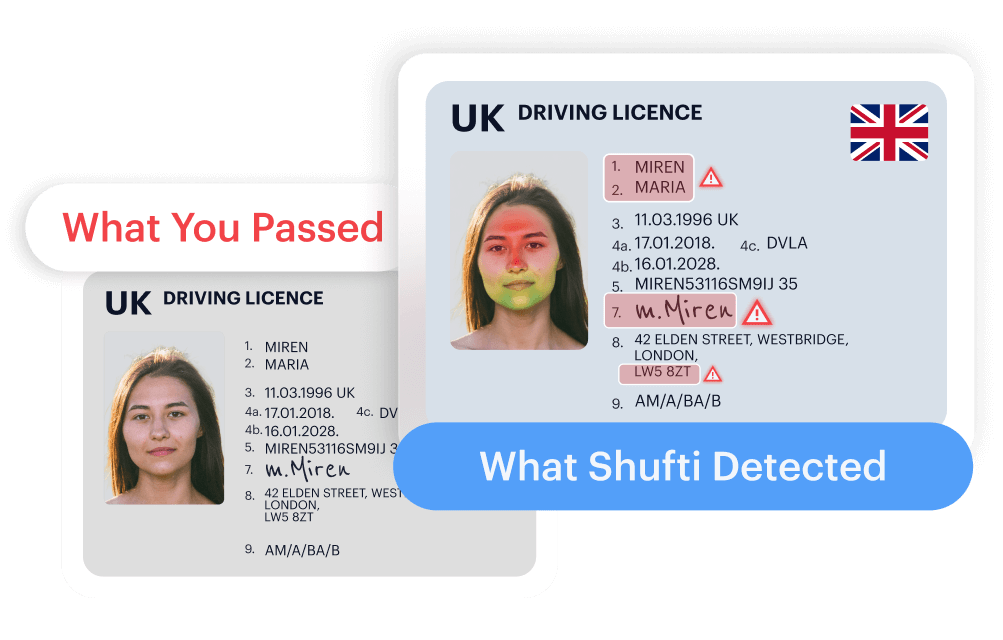

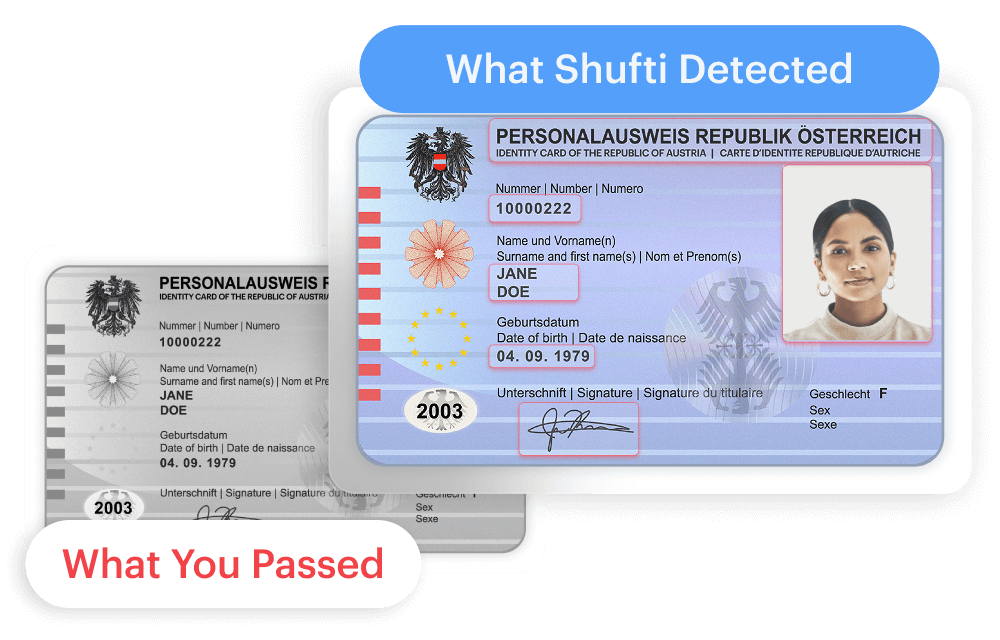

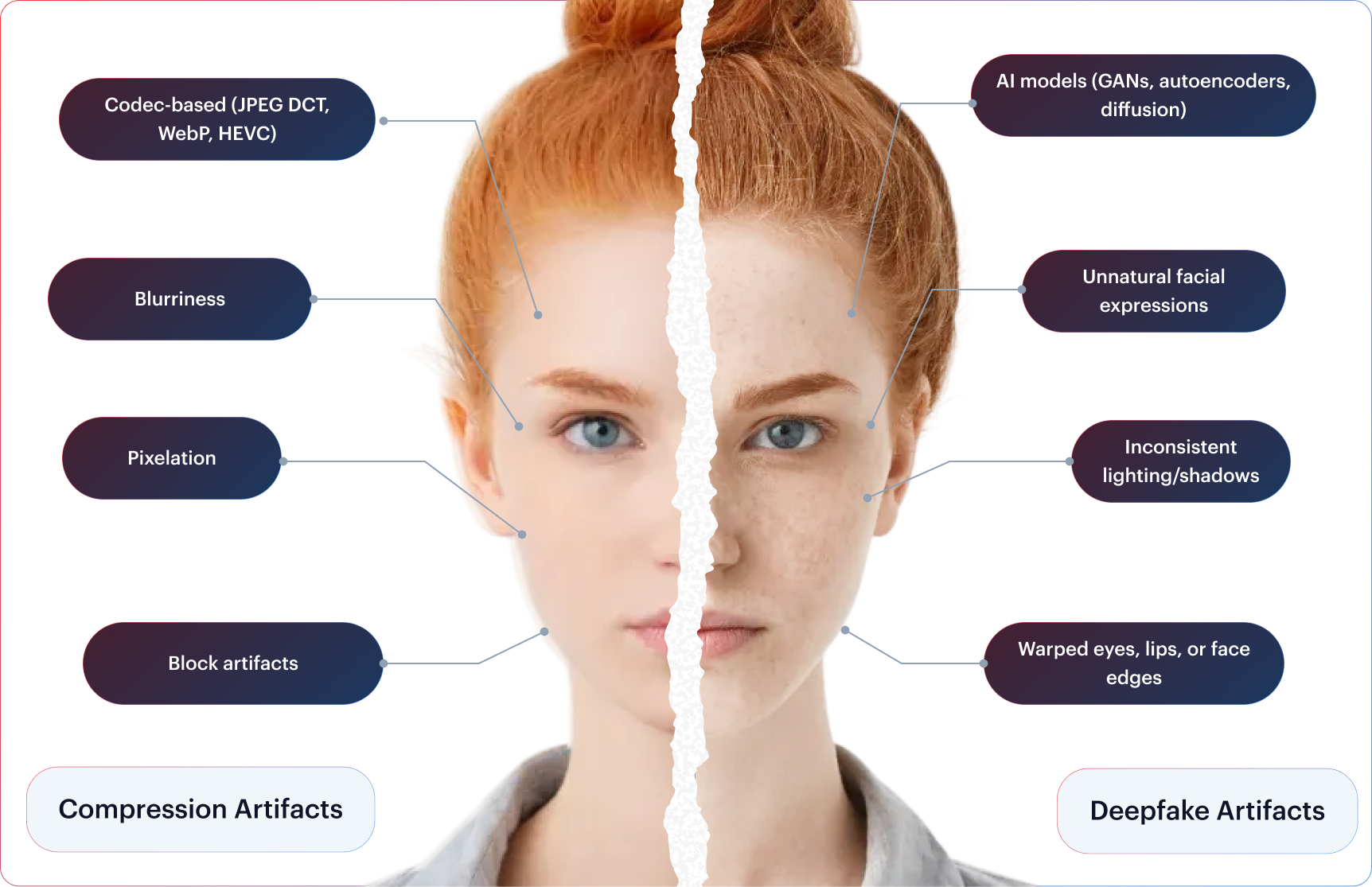

Synthetic media covers all digitally altered or created content. However, deepfakes are a high-risk subset, fully AI-generated media designed to replicate real identities with near-photorealistic accuracy.

Why Detection Starts With The Right Distinction

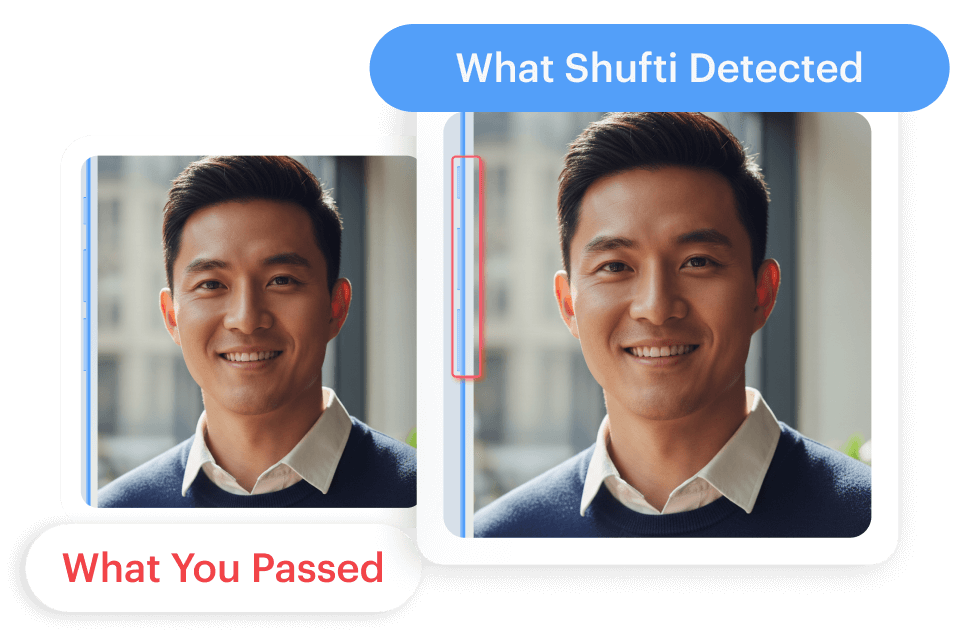

Different manipulation types leave different signals. These signals are evaluated directly within your AWS compute layer, reducing noise introduced by external pipelines or data transfers.

The Comfort of Simple Answers

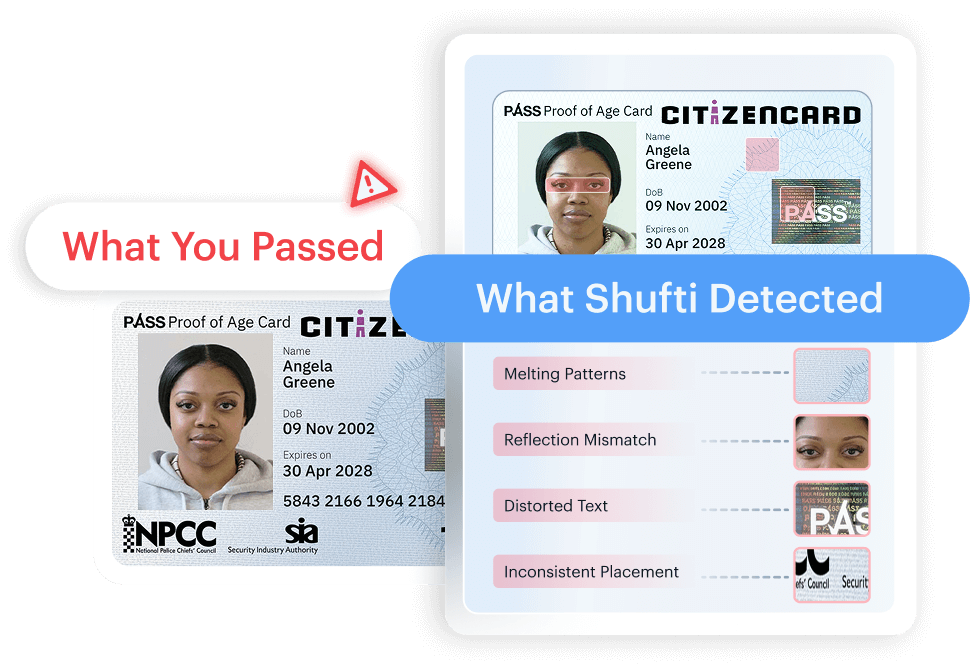

Commercial deepfake detectors are trained on clean, controlled data, not on deepfakes used in real-life threat scenarios like spoofing remote identity verification systems. Their performance claims often rely on threshold-based benchmarks that look impressive but create a false sense of coverage across real-world threat environments.

Explore Now

Explore Now