How the Use of AI in Fraud Prevention is Reshaping Financial Crime Detection

- 01 What Is AI Fraud Detection?

- 02 Generative AI and Fraud: The Current Reality

- 03 Defining Control Boundaries With AI Fraud Detection vs Identity Assurance

- 04 How AI Fraud Detection Complements KYC and IDV?

- 05 Governing AI Fraud Detection Systems: From Black Boxes to Defensible Decisions

- 06 AI Fraud Detection in Banking: Unified Threat and Regulatory View

- 07 AI Governance, Explainability, and Human Oversight in Fraud Detection

- 08 Industry Applications Beyond Banking

- 09 Operational and Strategic Benefits for Businesses

- 10 The Future of AI in Fraud Prevention (2026–2030)

- 11 Shufti’s AI Fraud Detection as Trust Infrastructure

Artificial intelligence has emerged as a central driver in fraud detection in the field of digital finance, payments, and platform-based services. The use of AI in fraud detection has now become a layer of control that is an essential part of the process of transactions, onboarding decisions, and account management. Detection accuracy is no longer the distinguishing factor in the industry, although in the past, it was the primary measure of success. A lot of advancements have been achieved concerning speed, scalability, and pattern recognition.

What is of more immediate concern are governance issues, accountability, and regulatory compliance. These aspects now dictate the capabilities of AI fraud detection systems to be considered appropriate in controlled settings. These systems not only recognize warning signs of risks but also make pre-programmed determinations that have critical financial, legal, and customer implications. They are important risk management infrastructures that impact access to services, prompt compliance obligations, and shape audit outcomes. Such systems are becoming increasingly identified by regulators as decision-making engines needing oversight, documentation, and scrutiny, as opposed to being treated as isolated analytical tools.

What Is AI Fraud Detection?

AI fraud detection involves utilizing machine learning models and advanced analytics to pinpoint patterns, anomalies, and behaviors indicative of fraudulent activities. In contrast to traditional rule-based systems, which operate within a specific set of guidelines, AI-driven approaches become flexible and evolve over time as they learn from emerging data and new methods used in fraud.

The modern AI fraud detection systems are usually combined with several major elements:

- Trained machine learning models that are informed by historical and real-time data.

- Behavioral analysis that evaluates intent rather than just surface-level characteristics.

- Real-time scoring combined with automated response functionalities.

The systems have become well incorporated in the transaction processing, account management, and electronic interactions, and are considered essential in dealing with operational risk. The primary benefits are improved speed, scalability, as well as reduced dependency on manual review processes. In the case of most organizations, AI fraud detection has already caused drastic declines in the rates of fraud losses and false positives.

Generative AI and Fraud: The Current Reality

Generative AI has increased the fraud capacities on both sides of the equation. Nowadays, fraudsters are using generative tools to produce more convincing phishing scams, automate social engineering campaigns, and simulate actual user behaviour at scale. Meanwhile, defenders are also using generative methods to improve training data, set behavioral standards, and assist in investigations.

These advances now represent a greater commitment to current strategies and not a transformation in the architecture of fraud.

Defining Control Boundaries With AI Fraud Detection vs Identity Assurance

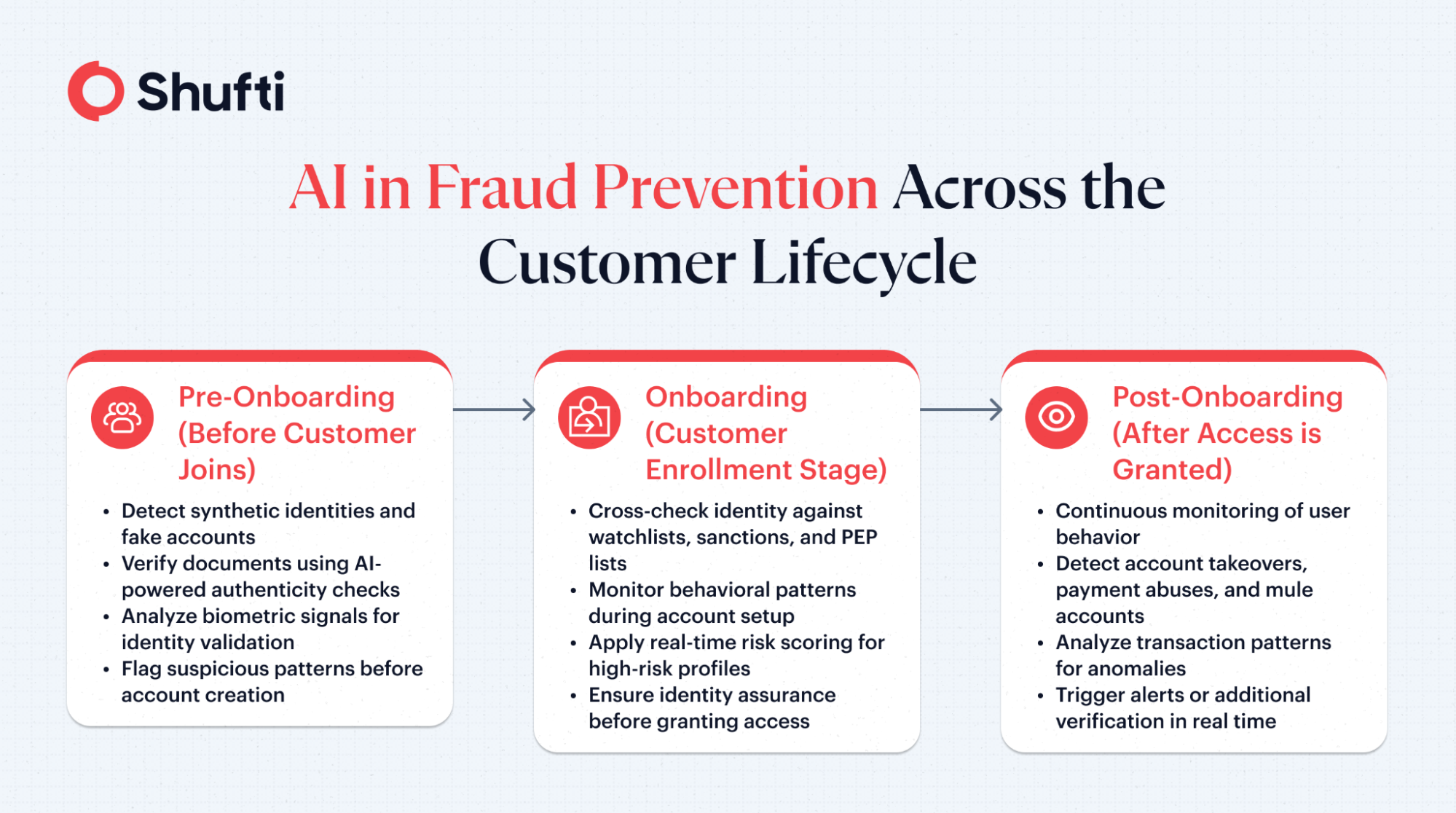

Fraud does not occur at a single point in time; it spans the entire customer lifecycle. For instance:

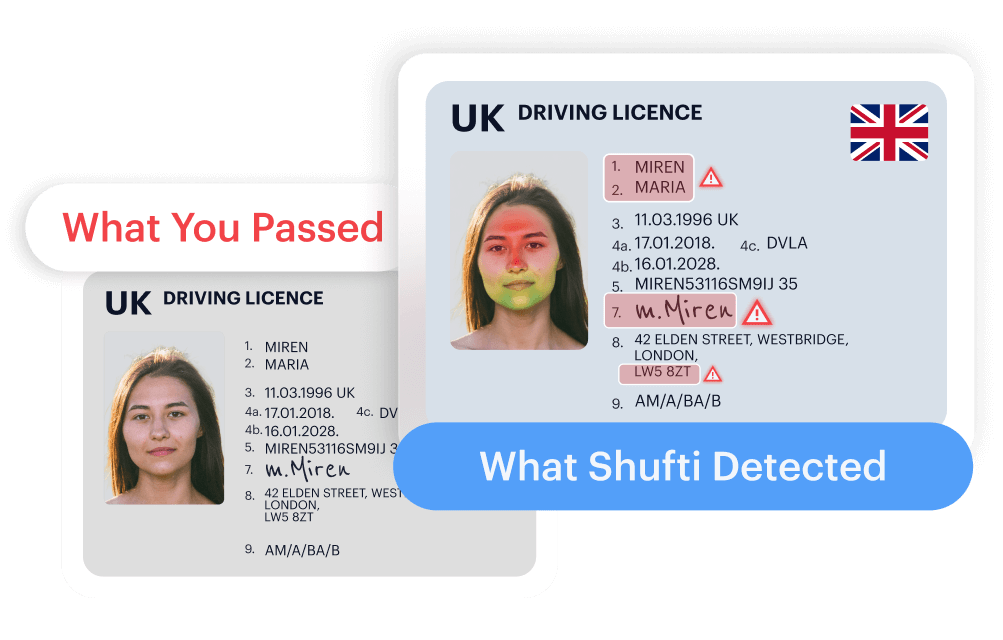

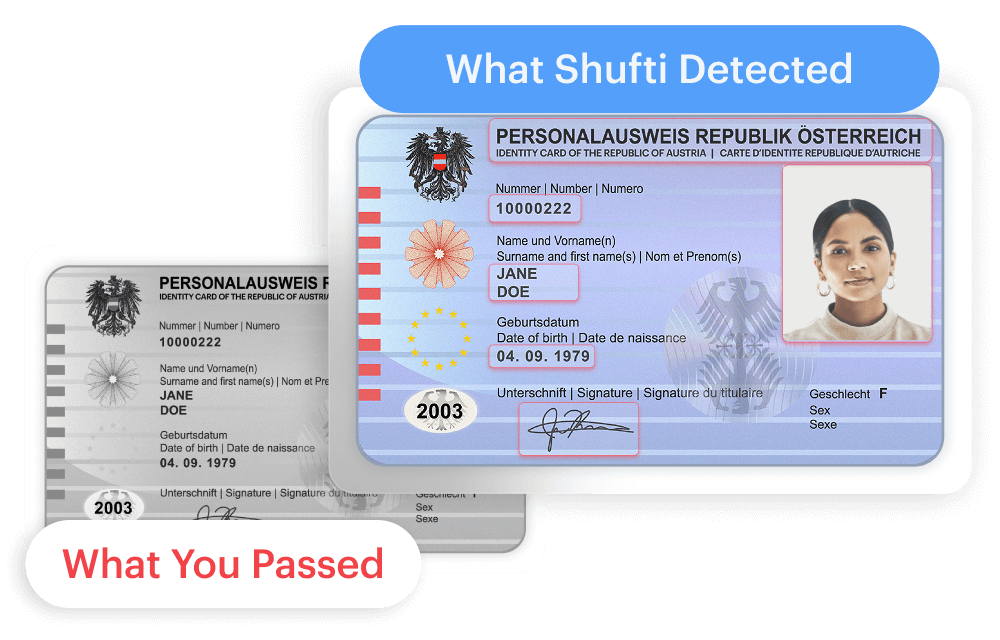

- Pre-onboarding fraud involves tactics such as creating synthetic identities, generating fake accounts, and manipulating documents.

- Post-onboarding fraud includes activities like account takeovers, payment abuses, and the operation of mule accounts.

The best application of AI fraud detection is one that keeps tracking the subject’s behavior once access is granted. However, despite the most sophisticated behavioral models, in the absence of strong identity assurance during onboarding, there is always a risk of compromised or fake identities.

How AI Fraud Detection Complements KYC and IDV?

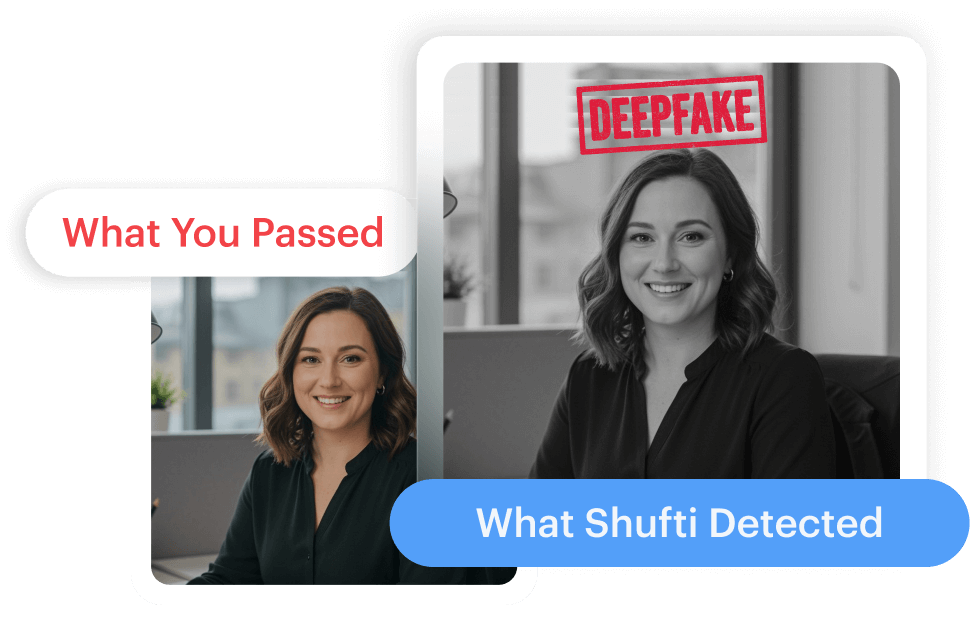

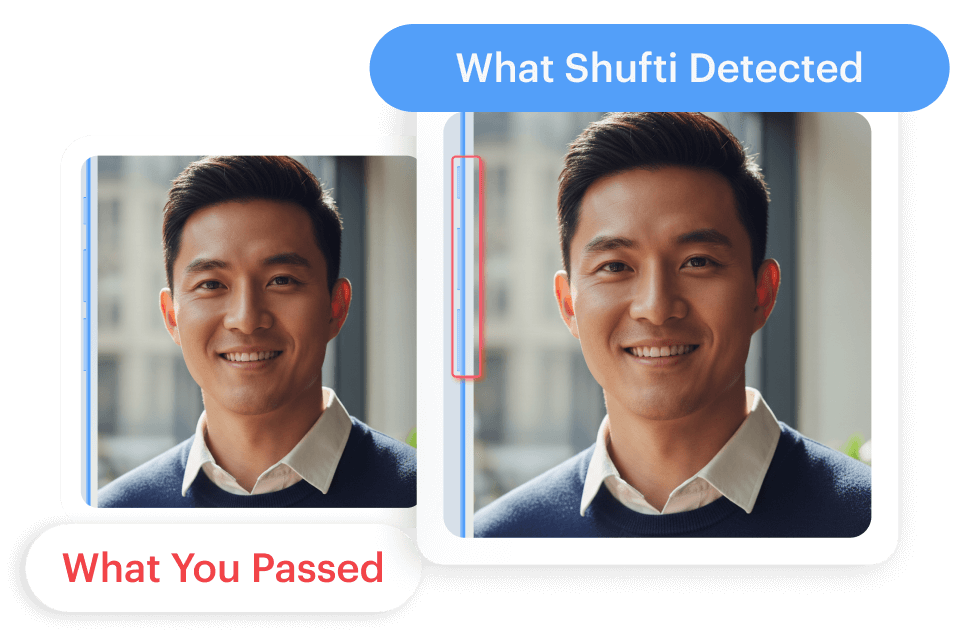

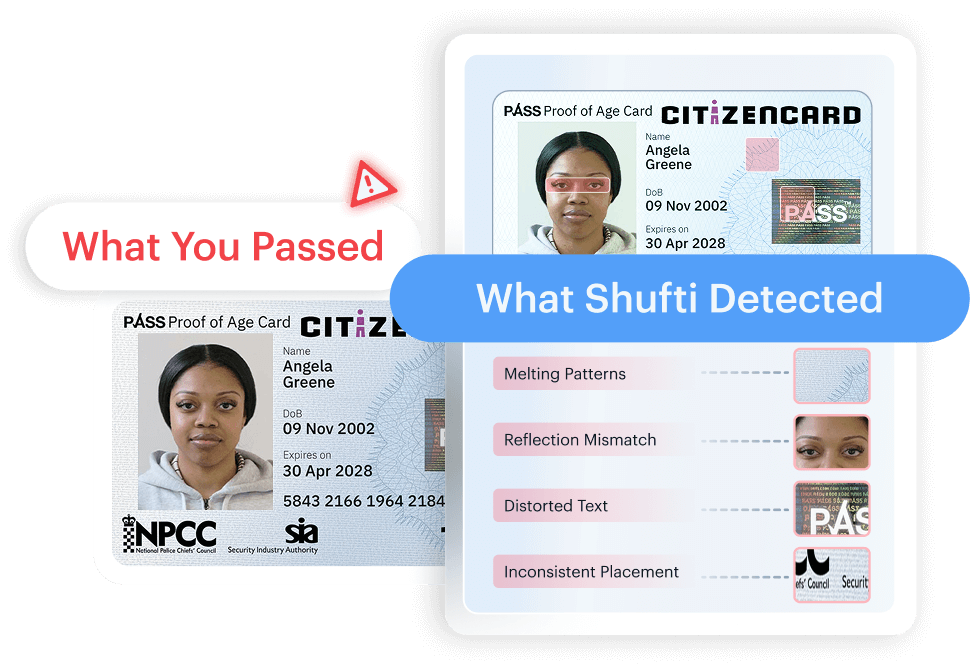

Identity verification lays the foundation for trust, particularly in regulated businesses where identity fraud represents a foundational risk. With the growing use of generative AI, identity-based attacks are becoming more sophisticated, making it critical to anchor fraud prevention strategies in strong identity controls. AI-based fraud detection continuously assesses risk by analyzing biometric signals, document authenticity markers, and behavioral consistency, allowing organizations to validate not just who the user is, but whether that identity remains credible over time.

Intent-based detection alone, without effective identity controls, would leave significant gaps, especially in environments that require customer due diligence. At the same time, non-dynamic Know Your Customer (KYC) procedures that rely solely on static checks do not evolve with emerging fraud patterns. When AI strengthens identity-centric fraud prevention, it enables early risk detection and reduces downstream exposure.

To prevent fraud effectively, these layers must interoperate rather than function independently. An identity-first approach supports both fraud prevention and AML compliance by improving the accuracy of transaction monitoring and reducing false positives. As a result, AI acts as a preventative control that enhances trust and compliance, rather than a reactive safeguard applied after fraud has already occurred.

Governing AI Fraud Detection Systems: From Black Boxes to Defensible Decisions

Why Accuracy Alone Is No Longer Sufficient?

Regulators and auditors want organizations to show control in the methods of making automated decisions. This involves the capability to justify that a transaction was blocked, an account was restricted, or a customer was labeled as high risk.

The fundamental change is that, instead of asking whether AI works, the question becomes whether its decisions can be justified.

The Black Box Problem in Regulated Environments

Many AI models operate as opaque systems that produce outcomes without transparent reasoning. In regulated industries, this opacity introduces serious risks:

- Inability to explain decisions during audits or investigations

- Difficulty resolving customer disputes and complaints

- Increased exposure to legal and regulatory penalties

What regulators require is decision transparency, clear, documented reasoning that can be communicated to non-technical stakeholders.

Building Governable AI Fraud Detection Systems

Governance-ready AI fraud detection systems incorporate:

- Documented model logic and training assumptions

- Clear reason codes attached to decisions

- Threshold management and version control

- Human-in-the-loop escalation for high-risk cases

These controls do not weaken fraud prevention. Instead, they ensure that automated systems can operate at scale without undermining accountability.

AI Fraud Detection in Banking: Unified Threat and Regulatory View

Banks face a wide range of fraud risks that include authorized push payment scams, wire fraud, real-time payment abuse, account takeovers, and vendor payment manipulation. These threats are becoming increasingly sophisticated, often blending social engineering tactics with technical exploits.

To combat these challenges, banking fraud controls must adhere to a variety of overlapping regulatory obligations. This includes Anti-Money Laundering (AML) and Counter-Terrorism Financing (CTF) requirements, the Payment Services Directive 2 (PSD2), Strong Customer Authentication (SCA), the Payment Card Industry Data Security Standard (PCI DSS), as well as established model risk management frameworks.

During audits and examinations, regulators pay close attention to:

- Automated decision logic and override mechanisms

- False positive handling and customer impact

- Escalation workflows and human oversight

- Audit trails and documentation quality

Banks that treat AI fraud detection as a purely technical system often struggle to meet these expectations.

AI Governance, Explainability, and Human Oversight in Fraud Detection

Behavioral and transactional data play a major role in AI-driven fraud detection, but the real risk lies in how these systems are governed and overseen. As AI is increasingly used to automate fraud decisions, organizations must clearly define how models operate, how decisions are made, and where human intervention is required. Strong governance frameworks ensure accountability across the AI lifecycle, from model development to deployment and ongoing monitoring.

Regulators are placing growing emphasis on explainability, record-keeping, and human oversight in automated decision-making systems. Laws and frameworks such as the EU AI Act classify fraud detection as a high-risk AI use case and mandate human oversight, logging, and transparency to ensure decisions can be understood, challenged, and audited. Similarly, in the United States, the Model Risk Management (MRM) framework highlights the need for model validation, documentation, and continuous human review to prevent unintended outcomes.

Without effective governance and oversight, AI systems may amplify bias, produce opaque decisions, or operate beyond acceptable risk thresholds. Ethical controls, periodic reviews, explainable outputs, and controlled retraining are therefore essential to maintaining trust, regulatory alignment, and responsible use of AI in fraud detection.

Industry Applications Beyond Banking

AI fraud detection is much more than just a banking endeavor, and it offers essential control in any digital ecosystem.

- Marketplaces and E-commerce: AI fraud detection helps mitigate chargeback abuse, promotion fraud, and account farming, where scale and velocity make manual controls impractical.

- Platforms, Gaming, and High-Velocity Environments: The AI model can identify bot-ified abuse, bonus abuse, and coordinated fraud attacks in real-time environments that have high traffic.

- Crypto and Emerging Financial Models: Cryptos use AI to detect fraud in their wallets, synthetic identities, and an ever-changing regulatory environment.

Operational and Strategic Benefits for Businesses

Beyond fraud reduction, AI delivers measurable operational benefits. Automated risk assessment shortens onboarding timelines and reduces manual review costs. Compliance teams can focus on complex investigations rather than repetitive checks.

Strategically, AI enables scalability. As transaction volumes increase, AI-driven systems handle growth without proportional increases in compliance headcount. This scalability is particularly valuable for fintech firms expanding into new regions with varying regulatory requirements.

Moreover, data-driven insights generated by AI support continuous improvement. Fraud trends, customer behaviour shifts, and control effectiveness become measurable, allowing informed decision-making at both operational and executive levels.

The Future of AI in Fraud Prevention (2026–2030)

Going forward, fraud ecosystems will become more self-driving. The AI-driven networks will be able to create synthetically generated identities, organize attacks, and adapt dynamically. It implies that defensive systems will be competing against AI-generated behaviors that are specifically created to avoid being detected.

Within such an environment, the use of opaque automation may be a heavy burden. Companies that focus on established, clear, and regulator-conforming AI fraud detection systems will be better placed to negotiate the ever-growing fraud prevention arms race.

AI fraud detection systems are not optional security systems anymore. They are foundational components of digital trust. Their importance lies not only in preventing fraud but also in helping organizations continue operating within regulatory and ethical frameworks.

Organizations that thrive as fraud prevention technologies advance, and systems become more automated, will opt not to treat AI in fraud prevention as a black box, but as a structured infrastructure, which is transparent, accountable, and responsive to long-term growth.

Shufti’s AI Fraud Detection as Trust Infrastructure

Fraud prevention challenges often stem from fragmented controls, manual processes, and limited visibility across the customer lifecycle. Businesses struggle to balance regulatory compliance, customer experience, and fraud risk as digital onboarding volumes grow.

Shufti addresses these challenges by embedding AI-driven identity verification and fraud prevention capabilities into a unified compliance framework. Intelligent automation supports real-time identity checks, risk assessment, and ongoing monitoring aligned with global AML and KYC requirements. This approach helps businesses reduce fraud exposure while maintaining operational efficiency and regulatory confidence.

A tailored demo allows organisations to explore how AI-powered verification and fraud controls can strengthen compliance programs without disrupting customer journeys.

Explore Now

Explore Now