5 Key Takeaways from the FATF Horizon Scan Report on Deepfakes

- 01 1. Deepfake Technology is Becoming a Major Threat to AML Compliance:

- 02 2. The Threat of Deepfakes Bypassing CDD Systems:

- 03 3. Why Deepfake Detection Must Keep Evolving:

- 04 4. How can Financial Institutions Address the Challenge of AI Deepfakes?

- 05 5. Best Practices for Detecting AI Deepfakes for Law Enforcement Agencies:

- 06 Strengthening Onboarding Resilience Against Deepfakes

The latest FATF Horizon Scan AI and Deepfakes Report, published on 22nd December, 2025, provides a future-focused analysis of potential risk trends posed by AI, particularly with deepfakes. The report highlights two popular narratives about the use of AI in the Financial world: AI can be used to increase efficiency in compliance, preventive measures, and law enforcement, and AI can also be weaponized by money launderers, terrorist financiers, and sanctions evaders to circumvent existing AML/CFT/CPF frameworks.

This blog will summarize five key takeaways from the report on how AI-enabled deepfakes exploit the financial system, and preventative measures institutions must take to stay ahead of this growing threat.

1. Deepfake Technology is Becoming a Major Threat to AML Compliance:

The evolution of deepfake technology has transformed this threat from a rare occurrence to a concern that now demands proactive effort to stay ahead. AI-enabled deepfakes can present themselves in multiple forms of synthetic media:

- Videos

- Images

- Audio

Through actions, audible prompts, or images, this form of media is used to impersonate famous individuals. These techniques are used to deceive individuals in complex fraud schemes that range from phishing attacks to romance scams. The risk posed by deepfake scams enhanced by AI is that bad actors can bypass existing AML, CFT, and CPF controls, particularly Customer Due Diligence (CDD) measures.

The report emphasizes that until recently, the issue of deepfake fraud in the financial environment was mitigated by the technical expertise and vast amount of resources required for deepfake technology. However, the rapid evolution of AI technology has lowered the barrier to entry. With the burden of both the technical expertise (AI technology) and resources (Large AI data centres with high-density computing power) being borne by industry-leading tech companies, a smartphone and internet access are all cybercriminals need to create problematic deepfakes in minutes (such as AI images from popular LLMs).

2. The Threat of Deepfakes Bypassing CDD Systems:

Customer Due Diligence is an important step in the know your customer cycle. It helps financial institutions verify the customer’s identity and essentially know who the user is. Recommendations 10 and 22 of the FATF itself require authorities to ensure that Financial Institutions (FIs), Virtual Asset Service Providers (VASPs), and Designated Non-Financial Businesses and Professions (DNFBPs) identify their customers through reliable documents/information.

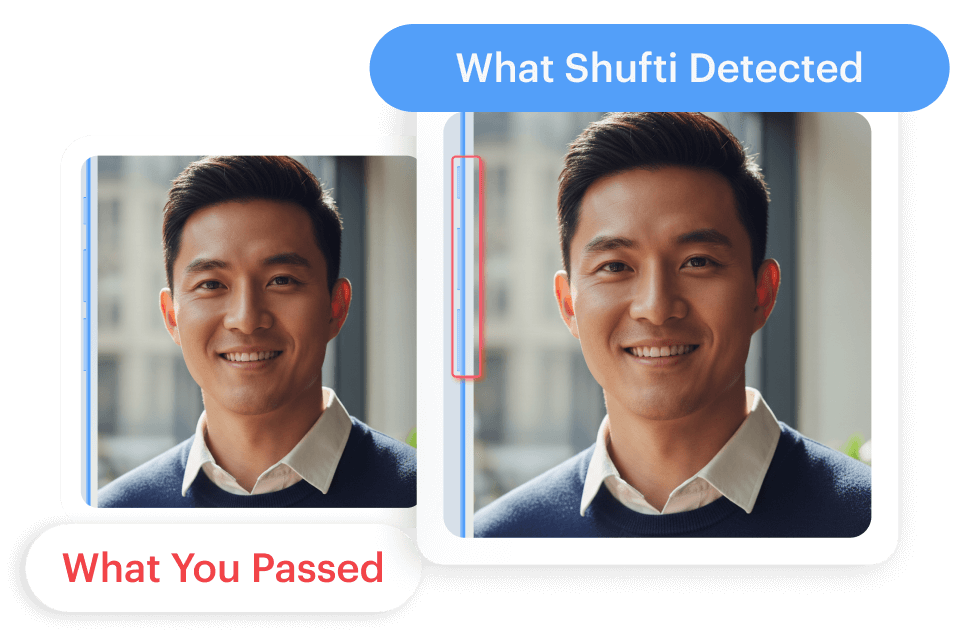

However, AI-enhanced deepfakes that can manipulate biometric authentication and mimic other individuals pose a direct challenge to traditional CDD systems deployed throughout FIs, VASPS, and DNFBPs.

When bad actors create convincing deepfakes of an individual, they can bypass biometric and liveness tests employed by that individual’s FI. This method allows them access to sensitive details and finances of the individual they have impersonated.

Following a roundtable discussion with several law enforcement agencies from around the world, the report consolidated three main issues with deepfakes exploiting traditional CDD systems:

a. High Reliance on Biometric Verification:

The growing reliance of FIs and other institutions on video KYC and facial recognition protocols creates a greater chance of exploitation by deepfakes.

To address the potentially high-risk of deepfake exploitation due to virtual onboarding, the FATF recommends a standardized risk-based approach tailored to digital ID systems.

This “informed risk-based approach” was issued in FATF’s Guidance on Digital ID

b. Complexity Cross-Border Co-operation:

The lag between global financial systems makes digital identification a complex issue, with some jurisdictions having strict IDV systems while others do not. This lack of uniformity provides the perfect opportunity for bad actors to exploit these weaknesses.

c. Limited Technology Adoption:

Despite the increasing threat of modern Deepfakes, many AML solutions have not yet upgraded their solutions to detect synthetic identities.

With FI’s and other institutions shifting their operations online, these risks are now amplified more than ever.

3. Why Deepfake Detection Must Keep Evolving:

The rapid evolution of AI-driven deepfakes has put noticeable pressure on FIs and other institutions to keep up, constantly enhancing their detection tools and investing in specialized expertise to stay ahead. FI’s must also ensure that the preventative measures they deploy are scalable, secure, and satisfy regulatory requirements, all while ensuring that the end-user experience is not undermined.

However, before one type of deepfake threat is identified and adequately catered for, the scale at which deepfake technology is advancing poses a novel threat for which FIs and other institutions must start the upgradation process again, and the cycle continues. The Report illustrates this ‘arms race’ with two real-world examples:

a. The Dual-Use of AI:

The European Union Agency for Law Enforcement Cooperation (Europol) demonstrated the weaponization of AI-enhanced deepfakes by both low-skilled offenders and highly-skilled cybercriminals.

While low-skilled fraudsters use widespread general-purpose AI technology to generate deepfakes for illicit purposes (phishing, child sexual abuse, impersonation), highly-skilled cybercriminals utilize AI for generating expert-level deepfakes that can replicate fingerprints, login credentials, browser behaviour, and even bypass two-factor authentication.

Europol also identifies emerging threats such as “typosquatting”, whereby bad actors upload real but malicious software packages on public repositories to deceive developers.

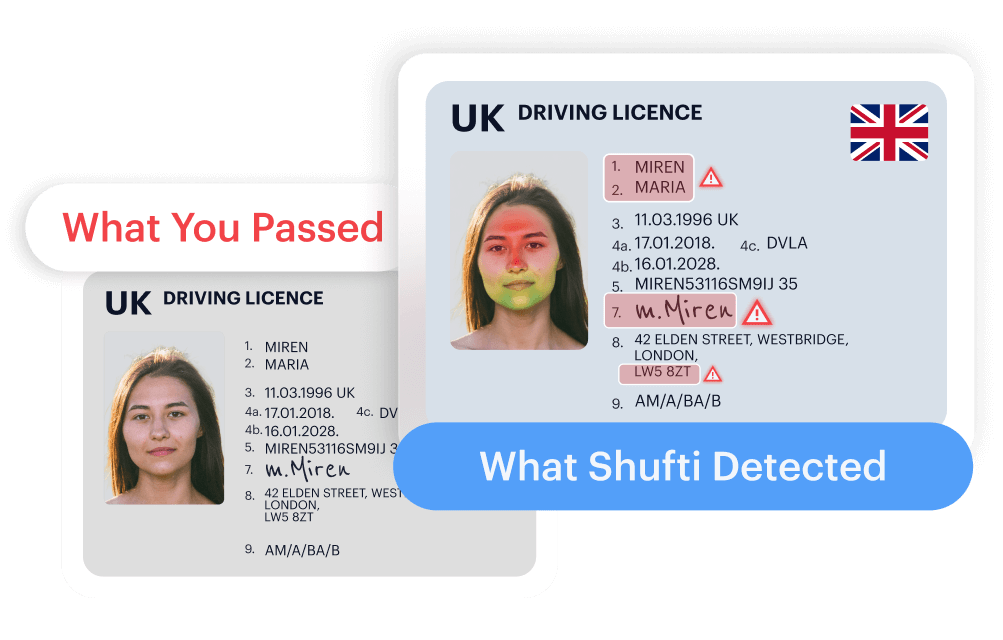

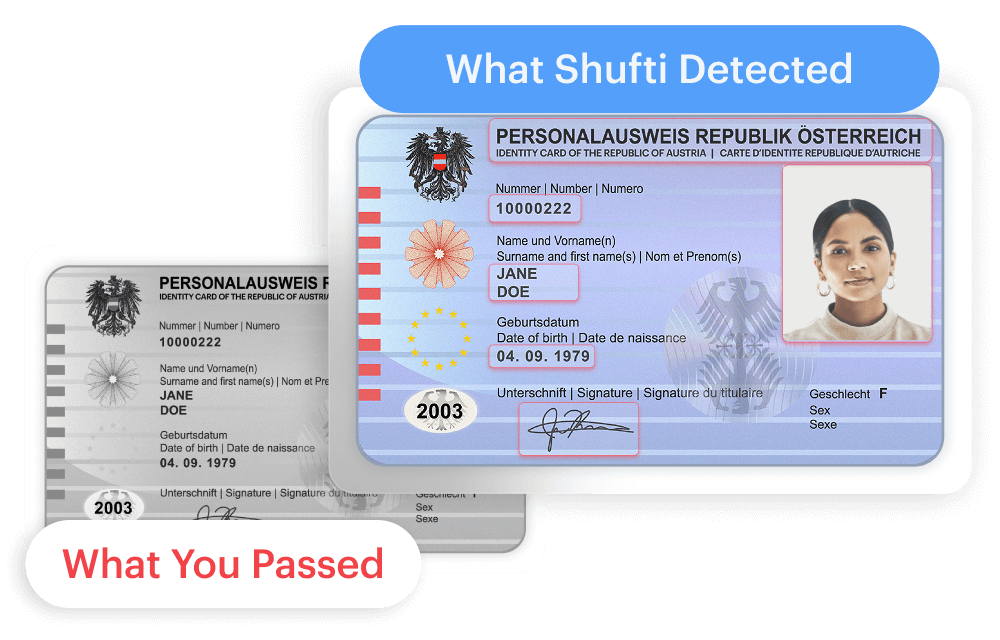

b. Hybrid Synthetic Identities:

One jurisdiction reported how stolen identity documents were collected to create hybrid synthetic identities (that merged the IDs of both the victims and the suspects’ information) to bypass CDD controls and open bank accounts.

This same jurisdiction also reported the use of AI-generated deepfakes impersonating high-level executives of a company. The fraudsters deceived and manipulated the firm’s CFO to transfer USD 25 million to a fraudulent account. In another instance, a deepfake scam centre that devised special romance scams was also uncovered by the police.

Therefore, deepfake fraud is no longer a one-time control gap; it is a moving target that demands continuous investment in detection, expertise, and stronger verification across the full onboarding and transactions lifecycle.

4. How can Financial Institutions Address the Challenge of AI Deepfakes?

The report frames deepfakes as an operational problem that sits right inside the AML/CFT/CPF framework because they can break trust at the exact points where CDD measures, digital ID verification, and CDD authentication are supposed to work.

FATF describes a fast-moving threat cycle where deepfake detection capabilities must keep evolving, which pushes FIs, VASPs, and DNFBPs to keep investing in advanced detection tools and specialised expertise, sometimes through external technology providers.

To counter the threat of AI-enhanced deepfakes, the report suggests a layered approach rather than a single control. That includes tools that spot inconsistencies in video and audio, stronger multi-layer verification, and training compliance teams to recognise AI-driven manipulation patterns.

Citing a real-world example, the report identified a deep-fake securities fraud where known news anchors were seen reporting on the IPO of a fraudulent entity. To trace the funds and uncover this operation, authorities used specialized technology to verify content and trained software to identify such deepfakes successfully.

Where AI can enhance deepfake creation, it can also help FIs and other institutions to detect emerging threats.

5. Best Practices for Detecting AI Deepfakes for Law Enforcement Agencies:

The report also describes the challenges of AI-enhanced deepfakes from the perspective of law enforcement agencies. It describes law enforcement’s response as a mix of capability building and operational modernisation. AI-driven fraud is often blended with traditional tactics such as phishing, social engineering, and digital deception, making investigations more complex and faster-moving.

Conclusively, the report suggests that financial institutions, other entities, and law enforcement agencies can counter the threat of AI-enabled deepfakes in two ways:

- Through expanding their detection capabilities through technology

- By embracing AI technology for content verification, biometric analysis, and other tools that can help detect modern deepfakes.

Strengthening Onboarding Resilience Against Deepfakes

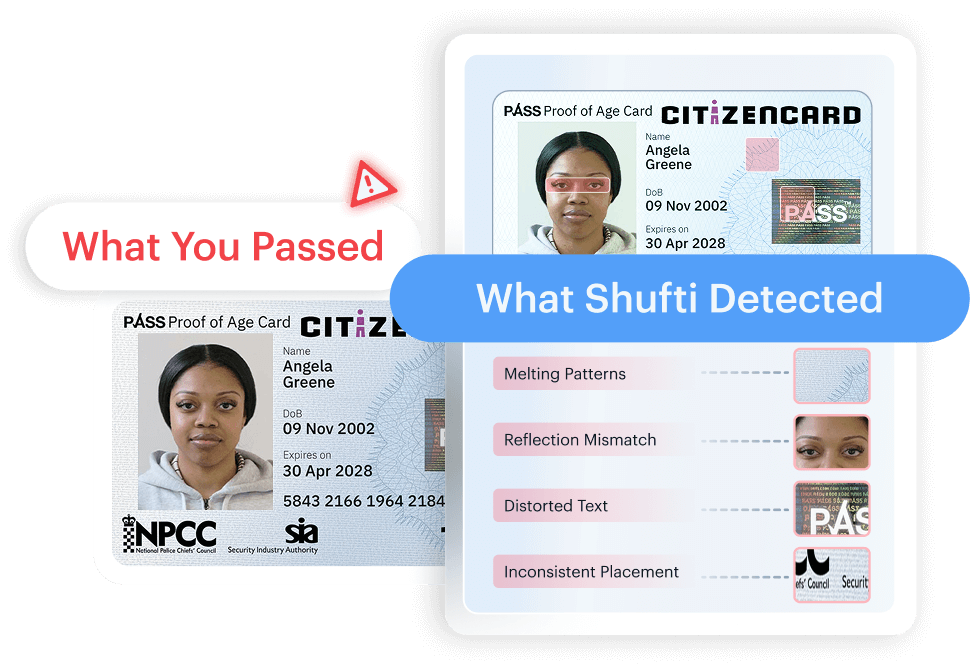

As the FATF Horizon Scan makes clear, deepfakes are putting pressure on the reliability of digital identity verification, biometric checks, and CDD processes across the financial system. Financial institutions need controls that can adapt as impersonation techniques evolve, without undermining regulatory obligations or customer experience.

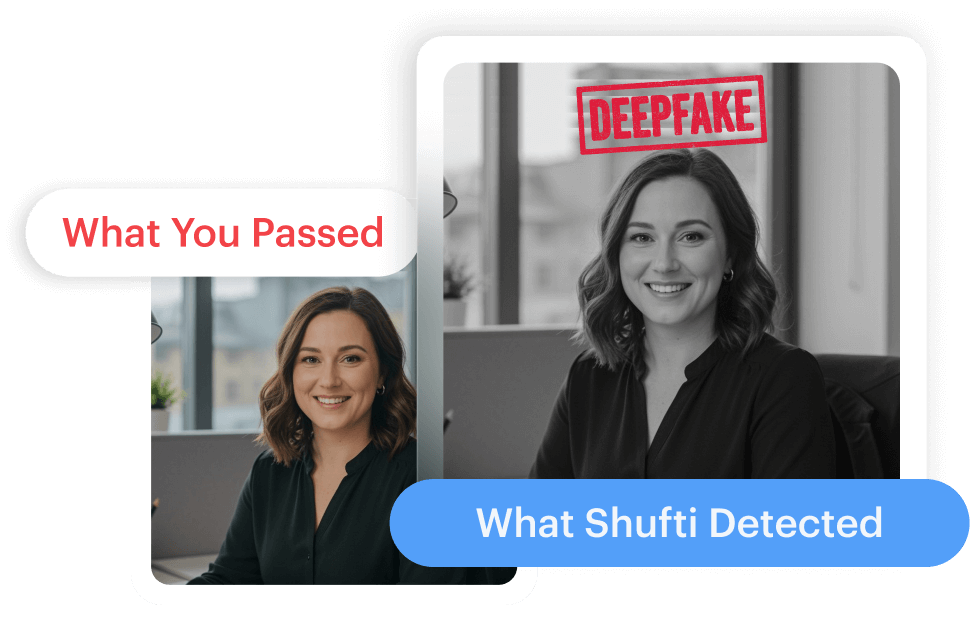

Shufti supports financial institutions and other reporting entities with identity verification and KYC workflows designed for remote onboarding, including layered verification and liveness checks to help reduce exposure to deepfake-enabled fraud.

Discover how Shufti’s regulator-grade deepfake detector can help you stay ahead of this evolving threat.

Explore Now

Explore Now